1 The Basics of Probability and Statistical Inference

1.1 Data and Statistical Populations

Data surround us, in the form of numbers, texts, images, and more that are collected and analyzed across disciplines. Tweets contain data about user sentiments. Receipts contain data about people’s buying habits. Pictures help us differentiate between, e.g., goldfish and dogs. These data\(-\)tweets, receipts, pictures\(-\)are unstructured data, so-called because we generally cannot visualize or analyze them directly. So what can we do? We can provide structure: to determine if a tweet indicates that a film was liked or disliked, we can extract counts of words indicating sentiments (e.g., “good” and “bad”). To determine the whether an image is that of a goldfish or a dog, it can be passed through appropriate filters that break down the images to a series of analyzable numbers. Etc.

The result of all this “pre-processing” is generation of structured data, data in the form of a table in which the columns represent particular measurements (e.g., the number of instances of the word “good”) and the rows representing the objects of study (e.g., individual films). Let’s focus on a single table column. Perhaps its data look like this:

34.1 28.6 37.7 52.1 26.6 28.9 ...Such data are dubbed quantitative data. Quantitative data are numbers that might be discretely valued (e.g., 1, 2, and 3) or continuously valued and measured to arbitrary precision (e.g., 15.4959735). Data may also look like this:

Heads Heads Tails Heads ...These data are categorical data; each outcome is one element from a set of categories. Here that set is {Heads,Tails}.

An experiment is the act of measuring and recording a datum (like when after each flip of a coin we record \(H\) for heads and \(T\) for tails). The data we generate from experiments are drawn from populations, the sets of all possible experimental outcomes. A population can be an existing group of objects (e.g., 52 cards in a deck, eight socks of different colors in a drawer), but it can also be hypothetical (e.g., a mathematical function, like a bell curve, which indicates the relative rates at which we would draw samples with particular values). To boil down the discipline of statistics to its essence, our goal is to use the data we have drawn from a population to say something (i.e., to infer something) about the population itself. If we record the heights of 100 people, we would like to say something about the average height of humans. If we record the ice-cream flavor preferences of 500 people, we would like to infer the proportion of humans that prefer chocolate to vanilla. Etc. Data surround us and the possibilities for inference are plentiful.

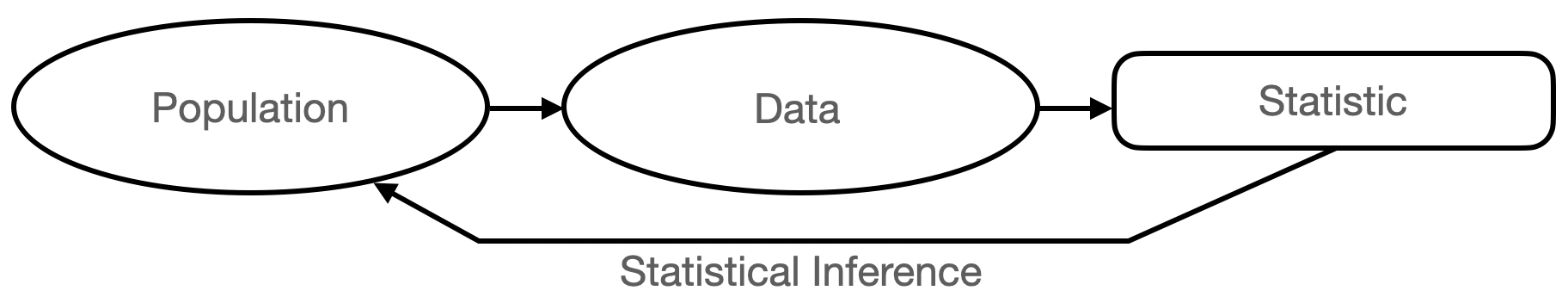

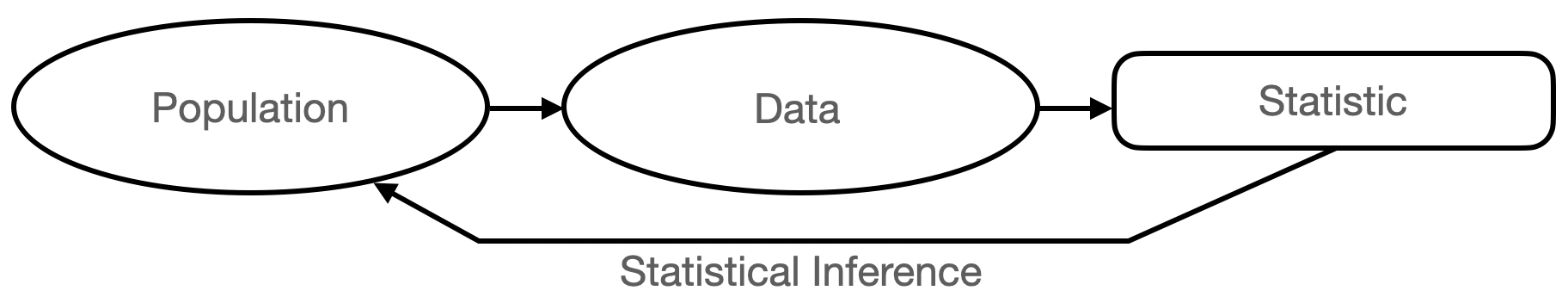

We pictorially summarize what we write above in Figure 1.1. One might immediately notice the word “statistic,” which we have yet to define. As we see later in this chapter, a statistic is simply a function of data (such as their average value) that helps reduce data volume while (hopefully!) retaining sufficient information to allow useful inferences to happen. Defining and understanding useful statistics is a major part of this course!

Figure 1.1: The canonical experiment-and-infer cycle. We gather data sampled from an unknown population, and use statistics, or functions of the data, to infer population properties.

But, the reader says: the course is called Modern Probability and Statistical Inference. Where is probability in all of this? Probability is the so-called “language of statistics,” and it provides the mathematical framework upon which we can build statistical inference. Remember how above we say that a population might be a mathematical function indicating the relative rates of observing experimental outcomes? Those relative rates are probabilities (or at least probability densities). Thus the structure of this chapter (and “mathemtical statistics” courses as a whole): we discuss probability first, and then use our newfound knowledge to show how the enterprise of statistical inference works, both algorithmically and mathematically.

1.2 Sample Spaces and the Axioms of Probability

Probability is the long-term frequency of the occurrence of an event. For instance, what is the probability of flipping a coin and observing heads? (Intuitively, this probability is 1/2, if the coin is fair.) Or: what is the probability that a student finishes a particular test in between 30 and 40 minutes? Etc.

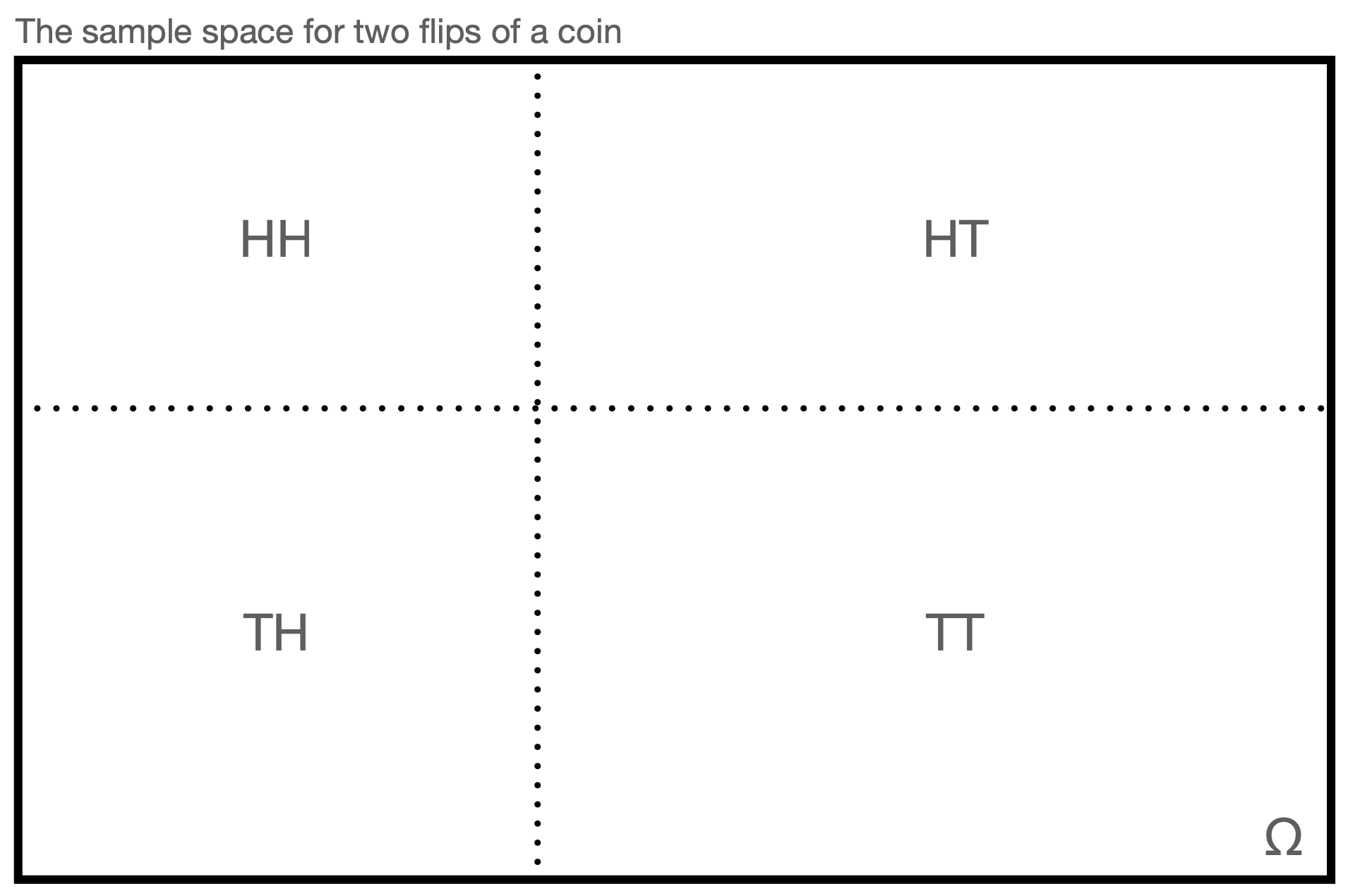

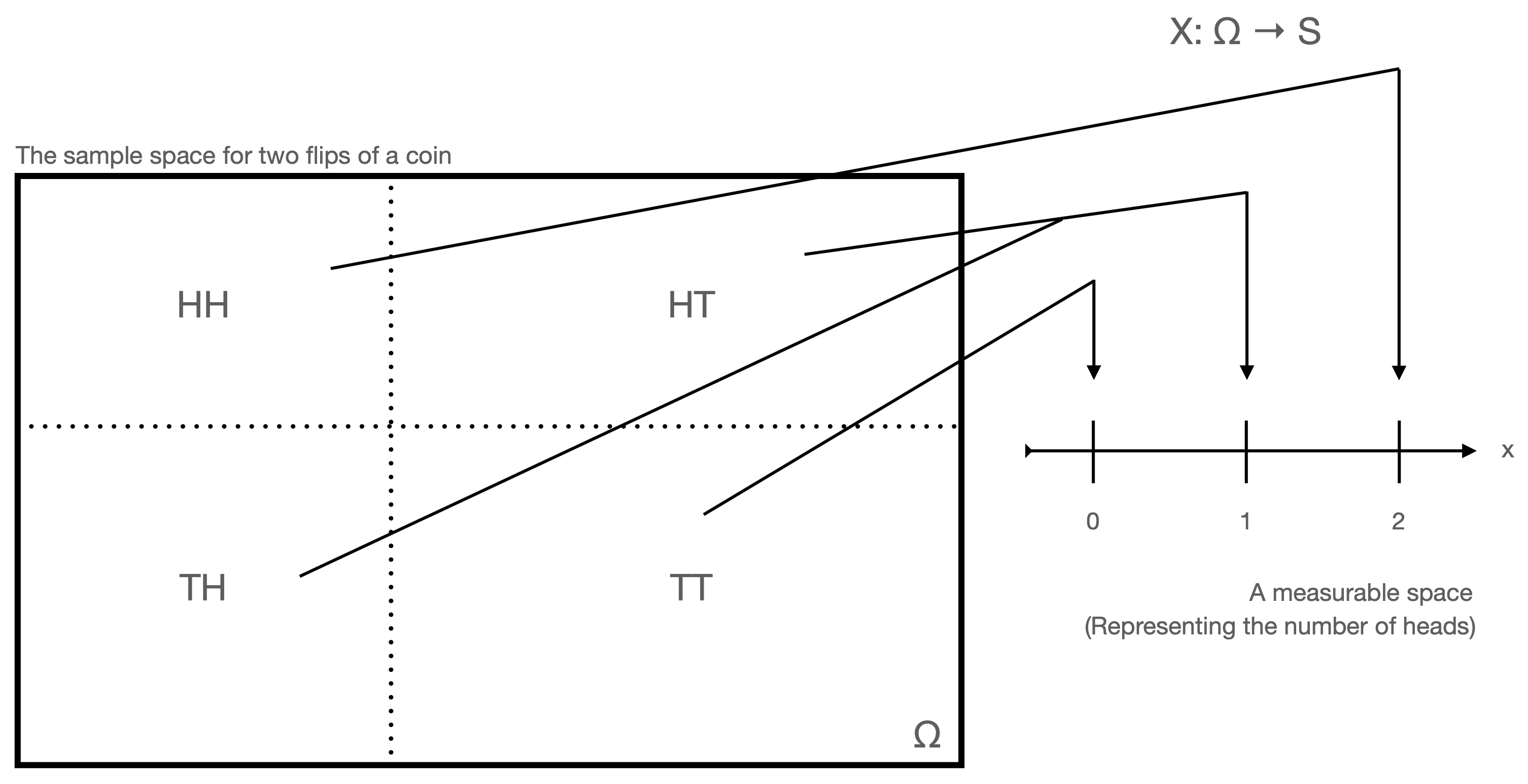

To build up an understanding of probability, it is conventional to start with the concept of a sample space. A sample space is the set of all possible outcomes of an “experiment” (or “trial”), which is simply some process that can, in theory, be repeated an infinite number of times. (For instance, the flipping of a coin.) For instance, if our experiment is to flip a single coin twice, the sample space would be \[ \Omega = \{HH,HT,TH,TT\} \,, \] where \(H\) and \(T\) represent observing heads and tails, respectively. (The Greek letter \(\Omega\) is a capital “omega,” or “oh-MAY-gah.”) See Figure 1.2.

Figure 1.2: This is an example of a sample space \(\Omega\), representing the experimental outcomes of flipping a single coin twice and recording the observed side of the coin. For purposes of intuition, it is common to associate the area shown for each outcome with that outcome’s probability of occurrence, so here we may view the coin as an unfair one that favors tails.

The members of the set \(\Omega\) are dubbed events and they come in two varieties:

- simple events: specific experimental outcomes (e.g., \(HH\)); any two simple events in \(\Omega\) are mutually exclusive, or disjoint, as they cannot be observed simultaneously in a single experiment.

- compound events: sets of two or more simple events (e.g., \(\{HH,HT,TH\}\), which represents the set of outcomes where heads was observed at least once).

As stated above, a sample space is a set of possible experimental outcomes; thus we can apply set notation to, e.g., define specific events as functions of others:

| term | notation | intuitive terminology |

|---|---|---|

| superset | \(A \supset B\) | “encompasses” |

| subset | \(A \subset B\) | “within” |

| union | \(A \cup B\) | “or” |

| intersection | \(A \cap B\) | “and” |

| complement | \(\bar{A}\) | “not” |

We show examples of how we use set notation in the context of samples spaces below.

Here are a few more things to keep in mind regarding sample spaces:

- The number of simple events in \(\Omega\) (i.e., the set’s cardinality) may be finite (as in the example above) or either countably or uncountably infinite (e.g., the set of all non-negative integers versus the set of real numbers). (For instance, the simple events in the experiment of repeating a task until one fails are \(\{F,SF,SSF,SSSF,\ldots\}\), where \(S\) denotes success and \(F\) denotes failure.)

- The definition of a sample space can depend upon whether the order of outcomes matters. For instance, if the order of outcomes does not matter, we could rewrite our two-coin-flip sample space as \(\Omega = \{HH,HT,TT\}\) (or \(\{HH,TH,TT\}\)).

- At no point thus far have we indicated the probability of observing any simple event. It is not the case in general that each experimental outcome is equally likely!

Regarding the last point above: while we may not know the probability of observing any simple event, there are some things we can say about its long-term relative frequency of occurrence:

- it must be \(> 0\) and \(\leq 1\);

- the relative frequencies of all simple events in \(\Omega\) must sum to 1; and

- the relative frequency of a compound event must equal the sum of the relative frequencies of its component simple events.

These statements appear to be self-evident, and as thus may be dubbed axiomatic. (A mathematical axiom is a statement accepted without proof.) In probability theory, these statements were recast as the so-called Kolmogorov axioms, introduced by Andrey Kolmogorov in 1933. Let \(A\) denote an event within \(\Omega\) (i.e., \(A \subset S\)), either simple or compound. A probability measure on \(\Omega\) is a function \(P\) from subsets of \(\Omega\) to \(\mathbb{R}^n\) that satisfies the following:

- \(P(A) \in [0,1]\);

- \(P(\Omega) = 1\); and

- if \(\{B_1,\ldots,B_k\}\) is a set of mutually exclusive simple or compound events, then \(P(\bigcup_{i=1}^k B_i) = \sum_{i=1}^k P(B_i)\), where the symbol \(\bigcup\) refers to the union of the set of events, i.e., the combination of all the events in the set into a single compound event.

1.2.1 Utilizing Set Notation

Let’s suppose that we have in our hand one six-sided die, with faces numbered 1 through 6. We roll it once, and observe the value on the uppermost face. The sample space is \[ \Omega = \{1,2,3,4,5,6\} \,. \] Let the event \(A\) be all odd-numbered outcomes, and let the event \(B\) be all outcomes less than 4. Thus \(A = \{1,3,5\}\) and \(B = \{1,2,3\}\).

- What is \(\bar{A}\), the complement of \(A\)? It is the set of all outcomes not in \(A\), i.e., the set of all even-numbered faces: \(\bar{A} = \{2,4,6\}\).

- What is \(A \cup B\), the union of \(A\) and \(B\)? It is the set of all outcomes observed in either \(A\) or \(B\), without double counting: \(A \cup B = \{1,2,3,5\}\) (and not \(A \cup B = \{1,1,2,3,3,5\}\)…it is meaningless to write out the same experimental outcome twice).

- What is \(A \cap B\), the intersection of \(A\) and \(B\)? It is the set of all outcomes observed in both \(A\) and \(B\), again without double counting: \(A \cap B = \{1,3\}\).

We note that we can combine unions, intersections, and complements in, e.g., the distributive law, \[ A \cap (B \cup C) = (A \cap B) \cup (A \cap C) \,, \] the associative law, \[ A \cup (B \cap C) = (A \cup B) \cap (A \cup C) \,, \] and De Morgan’s laws, \[ \overline{A \cup B} = \bar{A} \cap \bar{B} ~~\mbox{and}~~ \overline{A \cap B} = \bar{A} \cup \bar{B} \,. \]

1.2.2 Working With Contingency Tables

Let’s assume that we are given the following information about two events \(A\) and \(B\):

| \(A\) | \(\bar{A}\) | |

|---|---|---|

| \(B\) | 0.45 | 0.12 |

| \(\bar{B}\) | 0.21 | 0.22 |

This is dubbed a contingency table (or, more specifically here, a two-by-two contigency table). The numbers in each cell represent probabilities; for instance, \(P(A \cap B) = 0.45\). A contingency table is appropriate to work with if the probabilities associated with each event do not change from experiment to experiment.

- What is \(P(A)\)? We can determine this by summing down the \(A\) column: \(P(A) = P[A \cap \Omega] = P[A \cap (B \cup \bar{B})] = P[(A \cap B) \cup (A \cap \bar{B})]\); since \(A \cap B\) and \(A \cap \bar{B}\) are disjoint, we can view the \(\cup\) as addition, and so \(P(A) = P(A \cap B) + P(A \cap \bar{B}) = 0.45 + 0.21 = 0.66\).

- What is \(P(A \cup B)\)? Utilizing De Morgan’s laws, this would be \(1 - P(\overline{A \cup B}) = 1 - P(\bar{A} \cap \bar{B}) = 1 - 0.22 = 0.78\).

- What is the probability of observing \(A\) or \(B\), but not both? This would be \(P(A \cup B) - P(A \cap B)\), which is \(0.78 - 0.45 = 0.33\).

It is well worth taking the time to see how one could derive each of these answers through visual inspection of the table. For instance, \(P(A \cup B)\) is 1 minus the value in the cell at lower right, which does not lie in the row for \(B\) or the column for \(A\).

1.3 Conditional Probability and the Independence of Events

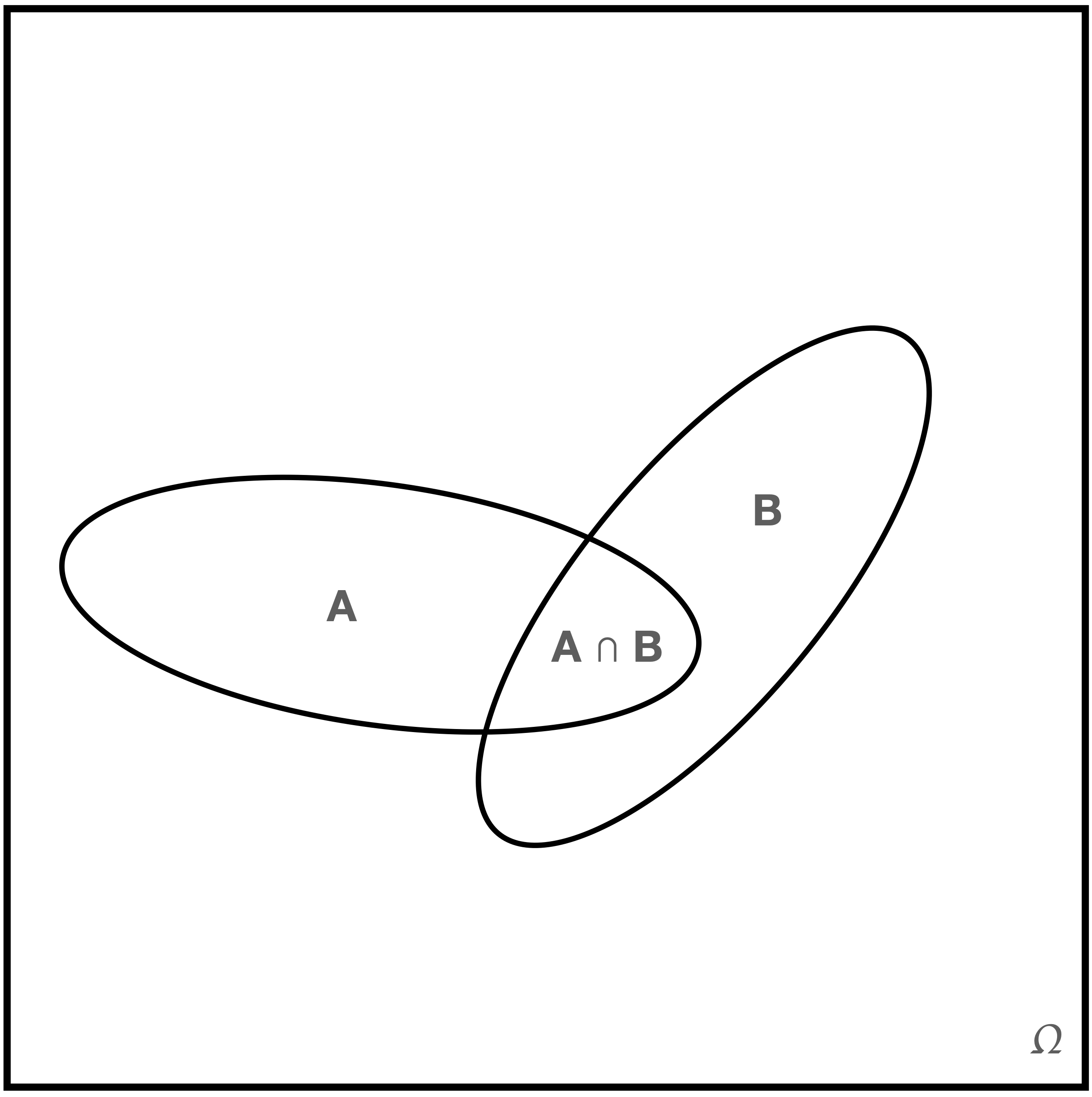

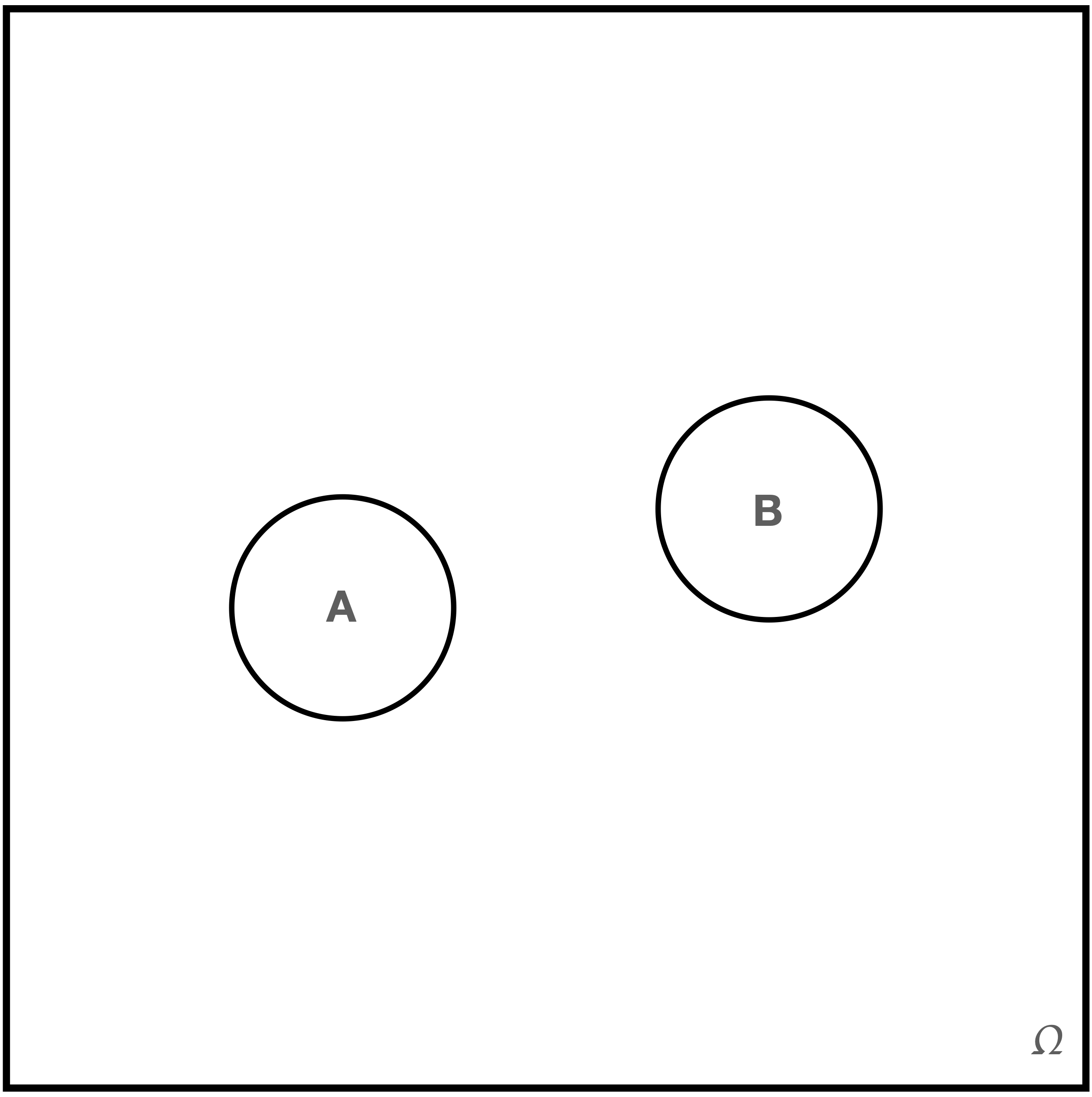

Intuitively, we can picture a sample space \(\Omega\) and two of its constituent events as looking something like what we show in Figure 1.3.

Figure 1.3: A sample space with non-disjoint events \(A\) and \(B\).

We can imagine that the geometric areas of each region represent probability, with \(P(\Omega) = 1\) (given the second Kolmogorov axiom) and \(P(A \cap B) > 0\) being the probability that both \(A\) and \(B\) occur during an experiment. (Perhaps \(A\) is the event of speaking French and \(B\) is the event of living is Brussels. The symbol \(\cap\) denotes the intersection or overlap between two sets, whereas the analogous symbol \(\cup\) represents the union of two sets.)

We can use this intuitive picture to illustrate the concept of conditional probability. A stated event probability, such as \(P(A)\), is an unconditional probability: its occurrence does not depend on whether or not other events occur. To denote a conditional probability, we add a vertical bar and place the conditions to the right of it. For instance, \(P(A \vert B)\) denotes the probability that the event \(A\) is observed, given that the event \(B\) is observed. (Note that there is no implied causality: it is not necessarily the case that \(B\) occurring is “causing” changes to the probability that \(A\) will occur.) To illustrate why \(P(A)\) may not equal \(P(A \vert B)\), we first point out that \(P(A) = P(A \vert \Omega)\), which we may think of as “the probability of observing the event \(A\) if we observe the event \(\Omega\),” which is the ratio of geometric areas of \(A \cap \Omega\) and \(\Omega\): \[ P(A) = P(A \vert \Omega) = \frac{P(A \cap \Omega)}{P(\Omega)} \,. \] When we condition on the event \(B\), we are reducing the set of possible outcomes from the full sample space \(\Omega\) to \(B\), i.e., we are replacing \(\Omega\) in the expression above with \(B\): \[ P(A \vert B) = \frac{P(A \cap B)}{P(B)} \,, \]

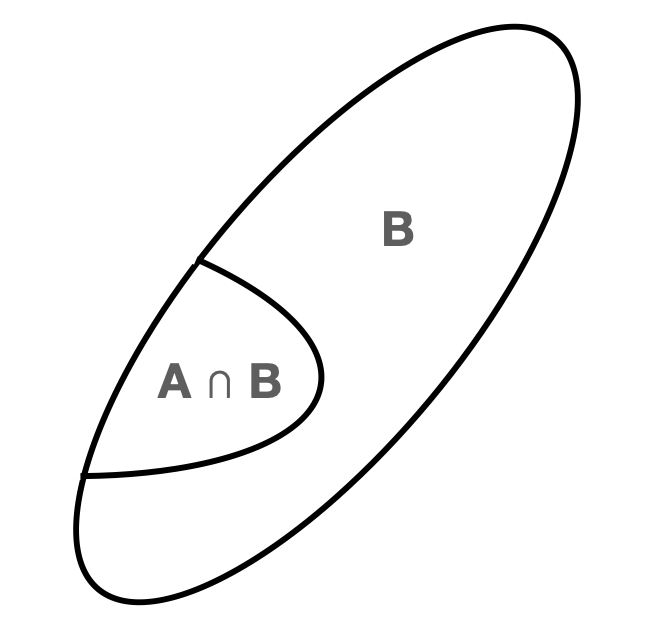

In the context of our intuitive picture, we are changing the one shown above to the one we show in Figure 1.4.

Figure 1.4: The new sample space that arises when we condition on the event \(B\).

\(B\) thus defines a new “sample space.”

Two events \(A\) and \(B\) are independent if the probability of observing one does not depend on the probability of observing the other. The intuitive picture many have of independence is the one shown in Figure 1.5.

Figure 1.5: To many, \(A\) and \(B\) appear to be independent events…but they are simply disjoint.

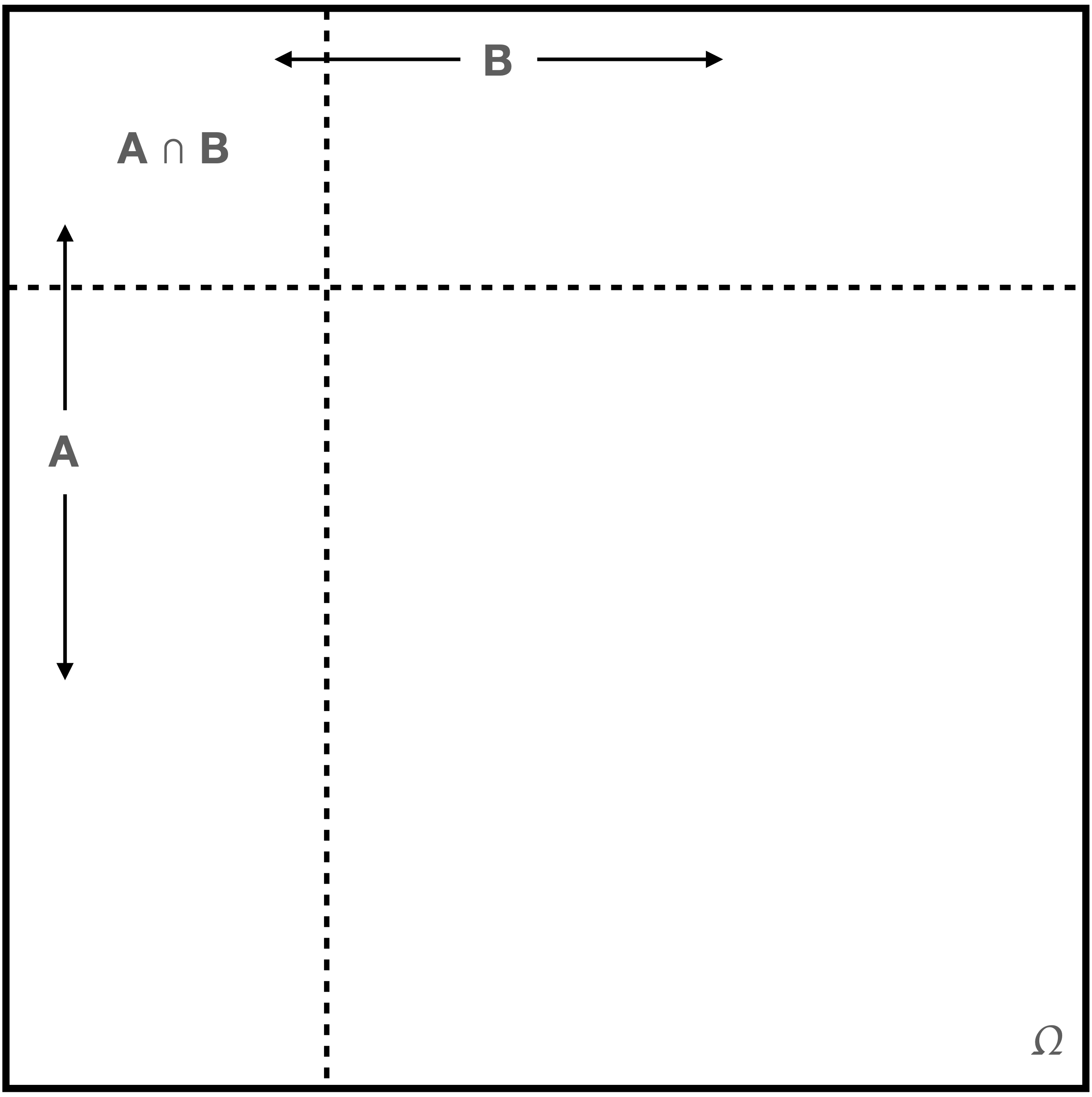

The events \(A\) and \(B\) do not overlap…hence they are independent events, right? No: they are simply disjoint events. Also, with reflection, we realize that if, e.g., the event \(A\) is observed in a given experiment, then we know that \(B\) cannot be observed. So these events are very much dependent! Figure 1.6 shows how we can actually represent \(A\) and \(B\) as independent events. In this figure, the ratio of the geometric area associated with the event \(A\) to the geometric area of \(\Omega\) is equal to the ratio of the areas of \(A \cap B\) and \(B\). Thus we can write that \[ P(A) = P(A \vert \Omega) = \frac{P(A \cap \Omega)}{P(\Omega)} = \frac{P(A \cap B)}{P(B)} = P(A \vert B) \,. \] The probability of observing the event \(A\) is unchanged if the event \(B\) occurs: \(A\) and \(B\) are independent events.

Figure 1.6: \(A\) and \(B\) are independent events.

1.3.1 Visualizing Conditional Probabilities: Contingency Tables

Let’s recall the two-by-two contingency table we defined in the previous section, but with some additional information added:

| \(A\) | \(\bar{A}\) | ||

|---|---|---|---|

| \(B\) | 0.45 | 0.12 | 0.57 |

| \(\bar{B}\) | 0.21 | 0.22 | 0.43 |

| 0.67 | 0.33 |

The numbers in the so-called “margins” are the row and column sums, so, for instance, \(P(A) = 0.67\). This information is useful to have when computing conditional probabilities. In analogy with what was stated above about imposing conditions and what that does to the sample space, here we can say that imposing a condition will restrict us to a given row or a given column. For instance, what is \(P(\bar{A} \vert B)\)? The condition restricts us to the top row, and within that row, the probability of observing the event \(\bar{A}\) is 0.12/0.57 = 0.21. So \(P(\bar{A} \vert B) = P(\bar{A} \cap B) / P(B) = 0.21\).

Now, are \(A\) and \(B\) independent events? The easy way to visually infer this given a two-by-two table is to see if the rows (or columns) are multiples of each other…meaning, here, is there a number \(a\) such that \(0.45 = 0.21 a\) and \(0.12 = 0.22 a\)? The answer here is no…so the events \(A\) and \(B\) are dependent events. (The conventional, yet longer way to determine independence is to see if, e.g., \(P(A \vert B) = P(A)\); if so, \(A\) and \(B\) are independent.)

1.3.2 Conditional Independence

If \(A\) and \(B\) are independent events, is it automatically the case that the events \(A \vert C\) and \(B \vert C\) are also independent events?

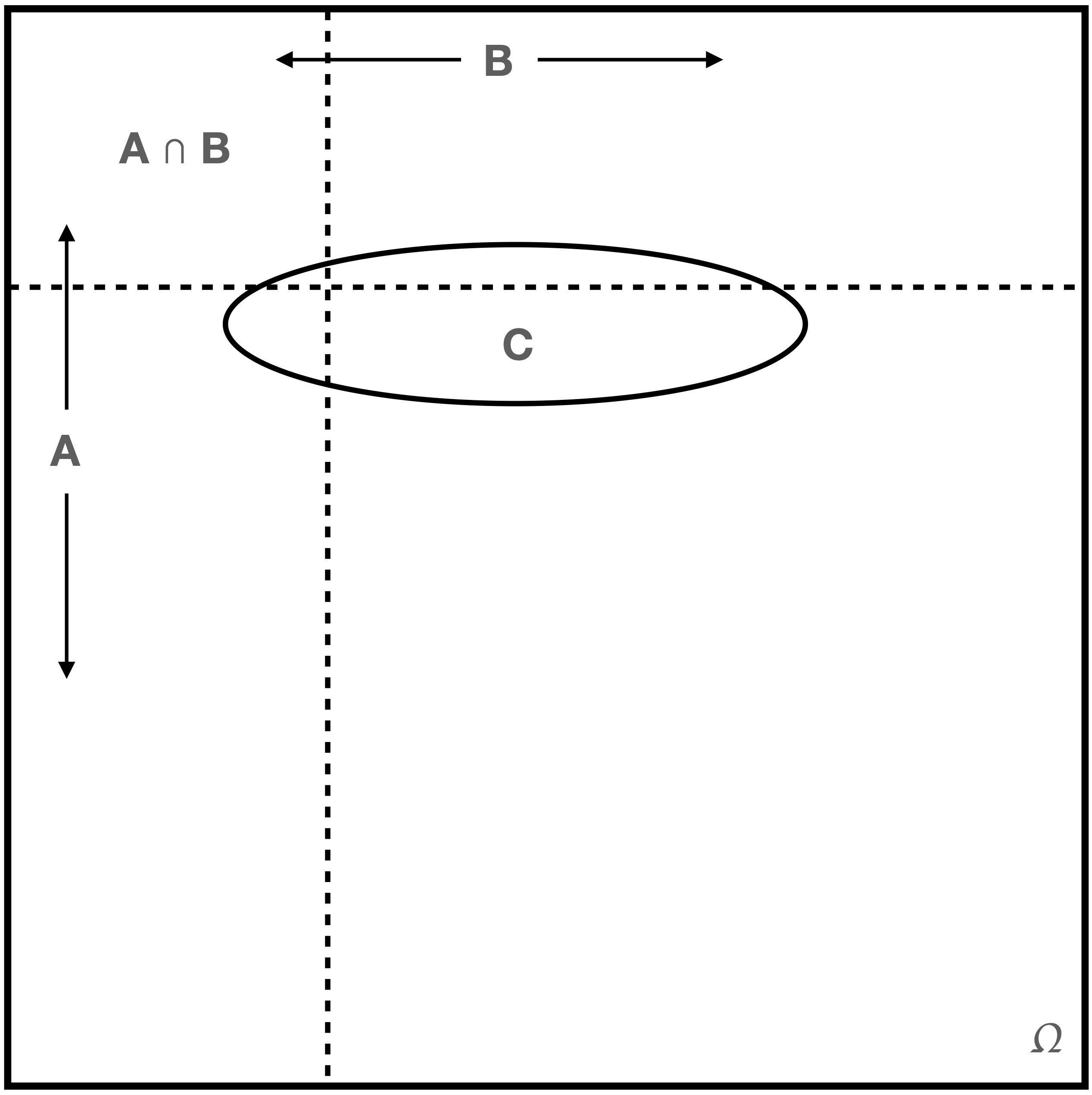

Recall the figure above that shows how independent events appear in a Venn diagram. Recall also that if we impose a condition \(C\), we effectively change the sample space from \(\Omega\) to \(C\). Imagine \(C\) as an arbitrarily shaped region superimposed on the last figure, so that now we have a situation like the one in Figure 1.7.

Figure 1.7: \(A\) and \(B\) are not necessarily independent events, given \(C\).

The events \(A\) and \(B\) are conditionally independent given \(C\) if \(P(C) > 0\) and \(P(A \cap B \vert C) = P(A \vert C) P(B \vert C)\). As we can see in Figure 1.7, \(C\) can be made to overlap \(B\), \(A\), and \(A \cap B\) in any number of ways such that \(P(A \cap B \vert C) \neq P(A \vert C) P(B \vert C)\)…so it is not the case that if \(A\) and \(B\) are independent, \(A \vert C\) and \(B \vert C\) are always independent. (If \(C\) had a rectangular shape with a horizontal base and top and vertical sides, conditional independence would hold. Think through why this would be true…)

1.4 Further Laws of Probability

Now that we have learned about the concepts of conditional probabilities and independence, we can write down some useful laws that one can use to solve an array of probability-based problems.

- Multiplicative Law. This follows simply from rearranging the definition of conditional probability: \[ P(A \cap B) = P(A) P(B \vert A) = P(B) P(A \vert B) \] We can generalize this law given an arbitrary number of events \(k\): \[\begin{align*} P(A_1 \cap A_2 \cap \cdots \cap A_k) &= P(A_1 \vert A_2 \cap \cdots \cap A_k) P(A_2 \cap \cdots \cap A_k) = \cdots \\ &= P(A_k) \prod_{i=1}^{k-1} P(A_i \vert A_{i+1} \cap \cdots \cap A_k) \,, \end{align*}\] where \(\prod\) is the product symbol, the multiplicative analogue to the summation symbol \(\sum\).

- Additive Law. The probability of the union of two events \(A\) and \(B\) is \[ P(A \cup B) = P(A) + P(B) - P(A \cap B) \,. \] If the events \(A\) and \(B\) are not disjoint, then if we add their probabilities, we count the probability of \(A \cap B\) twice…hence the subtracted term.

- Law of Total Probability (LoTP). Assume that we partition the sample space \(\Omega\) into \(k\) disjoint (simple or compound) events \(\{B_1,\ldots,B_k\}\), all of which have non-zero probability of occurring. Then, given any event \(A\), we can write \[ P(A) = \sum_{i=1}^k P(A \vert B_i) P(B_i) \,. \]

- Bayes’ Rule. Continue to assume that the sample space is partitioned into the events \(\{B_1,\ldots,B_k\}\). The conditional probability of each of these events, given that \(A\) occurs, is \[ P(B_i \vert A) = \frac{P(A \vert B_i) P(B_i)}{\sum_{j=1}^k P(A \vert B_j)P(B_j)} = \frac{P(A \vert B_i)P(B_i)}{P(A)} \,. \]

1.4.1 The Additive Law for Independent Events

Let’s assume that for a given experiment, we can define the independent events \(A\) and \(B\), with \(P(A) = 0.6\) and \(P(B) = 0.4\). What is \(P(A \cup B)\)?

In general, when solving probability problems, we look at all the rules and relationships at our disposal and see which one (or more!) contains the probabilities we know and the one we don’t know, and we use that rule or relationship to derive the solution. Here, there is nothing that directly relates \(A\) and \(B\) to \(A \cup B\)…the events may overlap when represented on a Venn diagram, and we don’t know by how much. Except…we are given the word “independent.” That allows us to say that \(P(A \cap B) = P(A)P(B)\)…and now we know that the additive law is in play: \[\begin{align*} P(A \cup B) &= P(A) + P(B) - P(A \cap B) \\ &= P(A) + P(B) - P(A \vert B)P(B) \\ &= P(A) + P(B) - P(A)P(B) = 0.6 + 0.4 - 0.6 \cdot 0.4 = 0.76 \,. \end{align*}\] Is this the only way to solve the problem? No…we know from De Morgan’s laws that \(\overline{A \cup B} = \bar{A} \cap \bar{B}\), and thus \[\begin{align*} P(A \cup B) &= 1 - P(\overline{A \cup B}) = 1 - P(\bar{A} \cap \bar{B}) = 1 - P(\bar{A} \vert \bar{B})P(\bar{B}) \\ &= 1 - P(\bar{A})P(\bar{B}) = 1 - (1-0.6)(1-0.4) = 0.76 \,. \end{align*}\] There is no “right” way to solve a probability problem…just correct ones.

1.4.2 The Monty Hall Problem

Let’s Make a Deal is a game show that has appeared on television at various times since 1963. During one part of the show, contestants are brought on stage and presented with three closed doors; behind one is an expensize prize (say, a car or an around-the-world cruise), and behind the other two are inexpensive prizes (like a year’s supply of Turtle Wax). The contestant is asked to pick a door (say, Door #1), at which point the show’s host will open another door (say, Door #3) and show the inexpensive prize behind that door (thereby taking that door out of play). The contestant is then asked if they want to stick with the door they’ve chosen (here, Door #1), or switch their choice to the other unopened door (here, Door #2). What should we advise the constestant to do?

The original, and most famous, host of Let’s Make a Deal was Monty Hall. Hence: the Monty Hall Problem. (Note that the problem is often stated such that there is a car being behind one door and goats behind the other two. The author is old enough to have seen the show in its heyday and he recalls seeing no goats. Or maybe they made no impression at the time…)

Assume, without loss of generality, that Door #1 is chosen. Then, let

- \(O_i\) = “Monty Hall opens Door #\(i\)”

- \(C_i\) = “The car is behind Door #\(i\)”

and assume that \(P(C_i) = 1/3\) for all \(i\). (The car could have been placed behind any door before the show was filmed.) The sample space of experimental outcomes is \[ \Omega = \{ O_2 \cap C_1 , O_2 \cap C_3 , O_3 \cap C_1 , O_3 \cap C_2\} \,. \] Why not \(O_2 \cap C_2\) and \(O_3 \cap C_3\)? Monty is not stupid: he won’t open the door the car is behind. (He knows where it is!)

Let’s assume, again without any loss of generality, that Monty opens Door #3. The probability we want to compute is \(P(C_2 \vert O_3)\): what is the probability that the car is actually behind Door #2? (Note that this is \(1 - P(C_1 \vert O_3)\)…again, \(P(C_3 \vert O_3) = 0\), as Monty is not stupid.) Is this probability 1/2? We utilize Bayes’ rule and the LoTP to write \[ P(C_2 \vert O_3) = \frac{P(O_3 \vert C_2) P(C_2)}{P(O_3)} = \frac{P(O_3 \vert C_2) P(C_2)}{P(O_3 \vert C_2) P(C_2) + P(O_3 \vert C_1) P(C_1)} = \frac{P(O_3 \vert C_2)}{P(O_3 \vert C_2) + P(O_3 \vert C_1)}\,. \] What do we know?

- \(P(O_3 \vert C_2) = 1\): if the car is behind Door #2, Monty has to open Door #3

- \(P(O_3 \vert C_1) = 1/2\): Monty can open either Door #2 or #3 if the car is behind Door #1

Hence \[ P(C_2 \vert O_3) = \frac{1}{1 + 1/2} = \frac{2}{3} \,. \] We should advise the contestant to open Door #2!

Confused? Think about the solution this way: the contestant has a one-third chance of correctly picking the door the car is behind, and a two-thirds chance of being wrong. Opening one of the other doors (while knowing there is no car behind it) doesn’t change these conditions at all: the contestant still has a one-third chance of having initially picked the correct door. Thus the contestant should change their pick to the other unopened door.

1.4.3 Visualizing Conditional Probabilities: Tree Diagrams

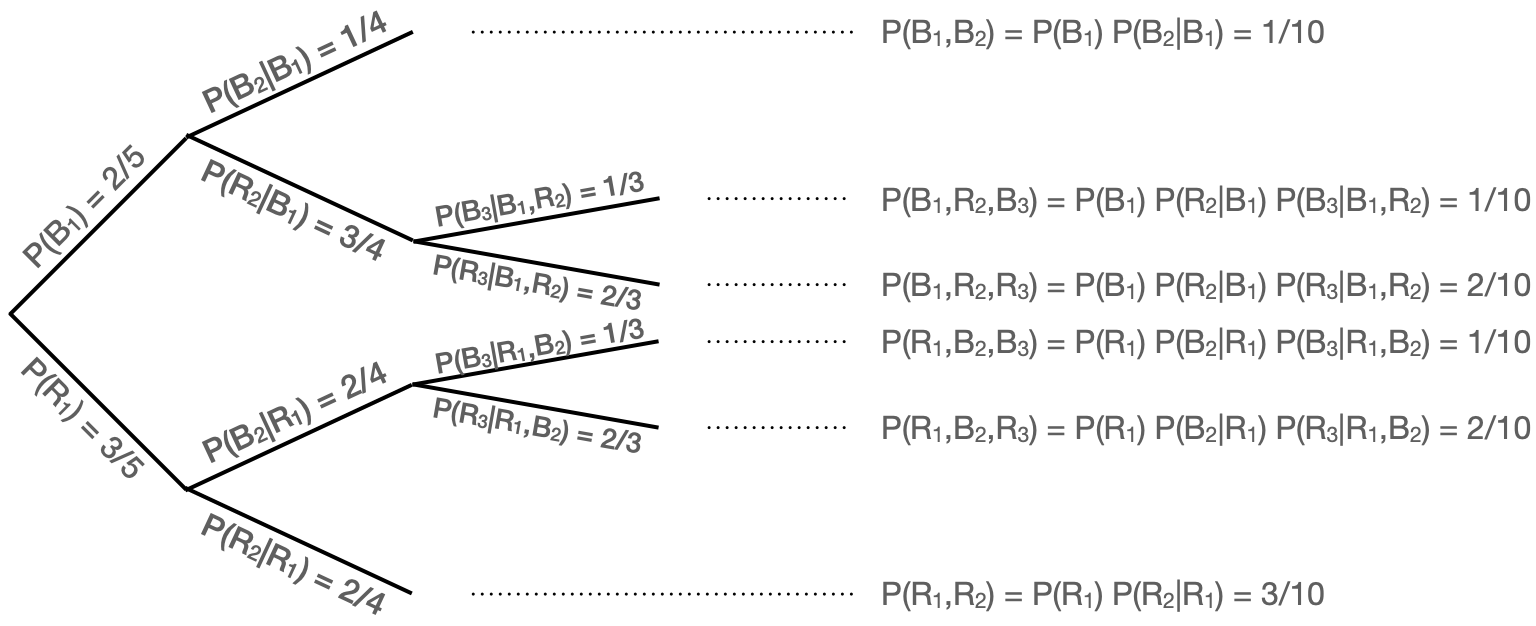

In the previous sections, we show how one can use contingency tables to aid the visualization of probabilities (and to solve for probabilities of simple and/or compound events). Here we show another, somewhat more general probability visualizer: the tree diagram. Why “somewhat more general”? First, a tree in a tree diagram can have arbitrary depth: if we have events \(A\), \(B\), and \(C\), the table would be three-dimensional, with the axes representing the experimental outcome in terms of \(A\) and \(\bar{A}\), \(B\) and \(\bar{B}\), and \(C\) and \(\bar{C}\). A table is not an optimal means to represent probabilities. And second, a tree is arguably a more natural means to represent probabilities when an experiment represents sequential outcomes, particularly when we sample without replacement.

Let’s elaborate on that second point. Let’s say we have a drawer with five socks, three of which are red and two of which are blue. We plan to draw three socks in succession from the drawer without placing the socks back into the drawer, but we will stop early if we draw two socks of the same color on the first two draws. What is the probability that our final sample contains two blue socks?

We can write out the following: if \(B_i\) and \(R_i\) are the probabilities of drawing a blue and red sock from the drawer when taking out the \(i^{\rm th}\) sock, then \(P(B_1) = 2/5\) and \(P(R_1) = 3/5\)…and \(P(B_2 \vert B_1) = 1/4\) because there is one less blue sock in the drawer, and… Actually, this gets tiring quickly. Let’s use a tree diagram instead.

Figure 1.8: An example of visualizing probabilities using a decision tree.

In Figure 1.8, we show the tree diagram for this problem. We note some aspects of this diagram:

- the tree can be truncated along some branches (here, that’s because we stop removing socks from the drawer if we remove two of the same color in the first two draws);

- at any branching point, the (conditional) probabilities of going down each branch sum to one; and

- the probability of ending up at a particular leaf (where the leaves collectively represent the simple events of the experiment) is the product of all the branch probabilities leading to that leaf.

So, now, what is the probability of drawing two blue socks in this experiment? To find that, we determine which leaves are associated with drawing two blue socks; from the top, that would be leaves 1, 2, and 4, with probabilities 1/10, 1/10, and 1/10. Because simple events are disjoint by definition, the probability of the compound event is simply the sum of the probabilities of the simple events, which here is 3/10. In any given replication of this experiment, we have a 30% chance of ending up with two blue socks.

1.5 Random Variables

Let’s say that we perform an experiment in which we flip a fair coin three times. Let \(H\) denote observing heads, and \(T\) tails. The sample space of outcomes \(\Omega\) is \[ \{ HHH,HHT,HTH,THH,HTT,THT,TTH,TTT\} \] and each outcome is observed with probability 1/8. What is the probability of observing exactly one tail? We can determine this by laboriously generating a table of probabilities, like so: \[\begin{align*} P(\mbox{"no tails"}) &= P(HHH) = 1/8 \\ P(\mbox{"one tail"}) &= P(HHT \cup HTH \cup THH) = 3/8 \\ P(\mbox{"two tails"}) &= P(HTT \cup THT \cup TTH) = 3/8 \\ P(\mbox{"three tails"}) &= P(TTT) = 1/8 \,. \end{align*}\] One can easily imagine how, if we were to flip a coin 50 times, or 500 times, the generation of tables would be onerous. A better way to portray the information in a sample space is to use a random variable. In probability theory, a random variable \(X\) is a measurable function mapping from a set of outcomes (here, \(\Omega\)) to a measurable space (here, \(\mathbb{R}^n\), where \(\mathbb{R}^1 = \mathbb{R}\) is the real-number line). (See Figure 1.9.) While \(X\) is a function, it is natural in an undergraduate context to think of it as a variable whose value is an experimental outcome. For instance, if we define \(X\) as being “the number of tails observed in three flips of a fair coin,” then \(P(X=1) = 3/8\). (Below, we will complete our transition away from laboriously built probability tables by introducing mathematical functions\(-\)probability mass functions or probability density functions associated with distributions\(-\)that allow us to compute probabilities more generally, as a function of an arbitrary observed value \(X=x\) or a range of observed values \(X \in [a,b]\).)

Figure 1.9: A random variable is a function that maps events in \(\Omega\) to the real-number line \(\mathbb{R}\).

There are a few initial things to note about random variables. First, they are conventionally denoted with capital Latin letters (e.g., \(X\), \(Y\), \(Z\)). Second, note the words “if we define” above. There is no unique random variable associated with a sample space. In addition to \(X\), we could just as easily have defined \(Y\) as the number of heads observed, or \(Z\) as having value 0 if at least one head and at least one tail are observed, and 1 otherwise, etc. Third, and most important, is that random variables come in two types, discrete and continuous:

- A discrete random variable \(X\) maps the sample space \(\Omega\) to countably finite (e.g., \(\{0,1\}\)) or infinite (e.g., \(\{0,1,\ldots,\}\)) outcomes.

- A continuous random variable \(X\) maps the sample space \(\Omega\) to an outcome that is uncountably infinite (e.g., \([0,1]\) or \([0,\infty)\)).

1.6 Probability Distributions

A probability distribution is a mapping \(P: \Omega \rightarrow \mathbb{R}^n\) that describes how probabilities are distributed across the values of a random variable. (A random variable simply maps events in a sample space to a measurable space like the real-number line, without regard to the probability of the event. A distribution adds this additional layer of information.) There are different ways to mathematically define a distribution; here, we concentrate upon

- the probability mass function (or pmf): if \(X\) is a discrete random variable, this represents the probability that \(X\) takes on a particular value \(x\), i.e., \(p_X(x) = P(X = x)\); or

- the probability density function (or pdf): if \(X\) is a continuous random variable, this represents the probability density (think of this as the “probability per unit interval”) at the value \(x\), i.e., \(f_X(x)\).

To be clear, we can represent a given distribution with a pmf or a pdf, but not both simultaneously; the choice is dictated by whether \(X\) is discretely or continuously valued. (It is possible to mix probability masses and densities into a single distribution, however. See the example below.) Later, we introduce two alternatives to pmfs/pdfs: the cumulative distribution function (cdf), and the moment-generating function (mgf).

Probability mass and density functions have two fundamental constraints: (a) they are non-negative; and (b) they sum or integrate to 1:

| pmf | |

|---|---|

| \(p_X(x) \in [0,1]\) | \(f_X(x) \in [0,\infty)\) |

| \(\sum_x p_X(x) = 1\) | \(\int_x f_X(x) dx = 1\) |

Before continuing on to discussing properties of distributions, we reiterate the point that one cannot interpret a pdf \(f_X(x)\) as the probability of sampling the value \(x\)! It is, again, a probability density function and not a probability itself; to determine a probability, we utilize integration: \[ P(a \leq X \leq b) = \int_a^b f_X(x) dx \,. \] To drive home the point that a pdf does not itself represent probability, we note that for any value \(a\), \[ P(X = a) = \int_a^a f_X(x) dx = 0 \,. \]

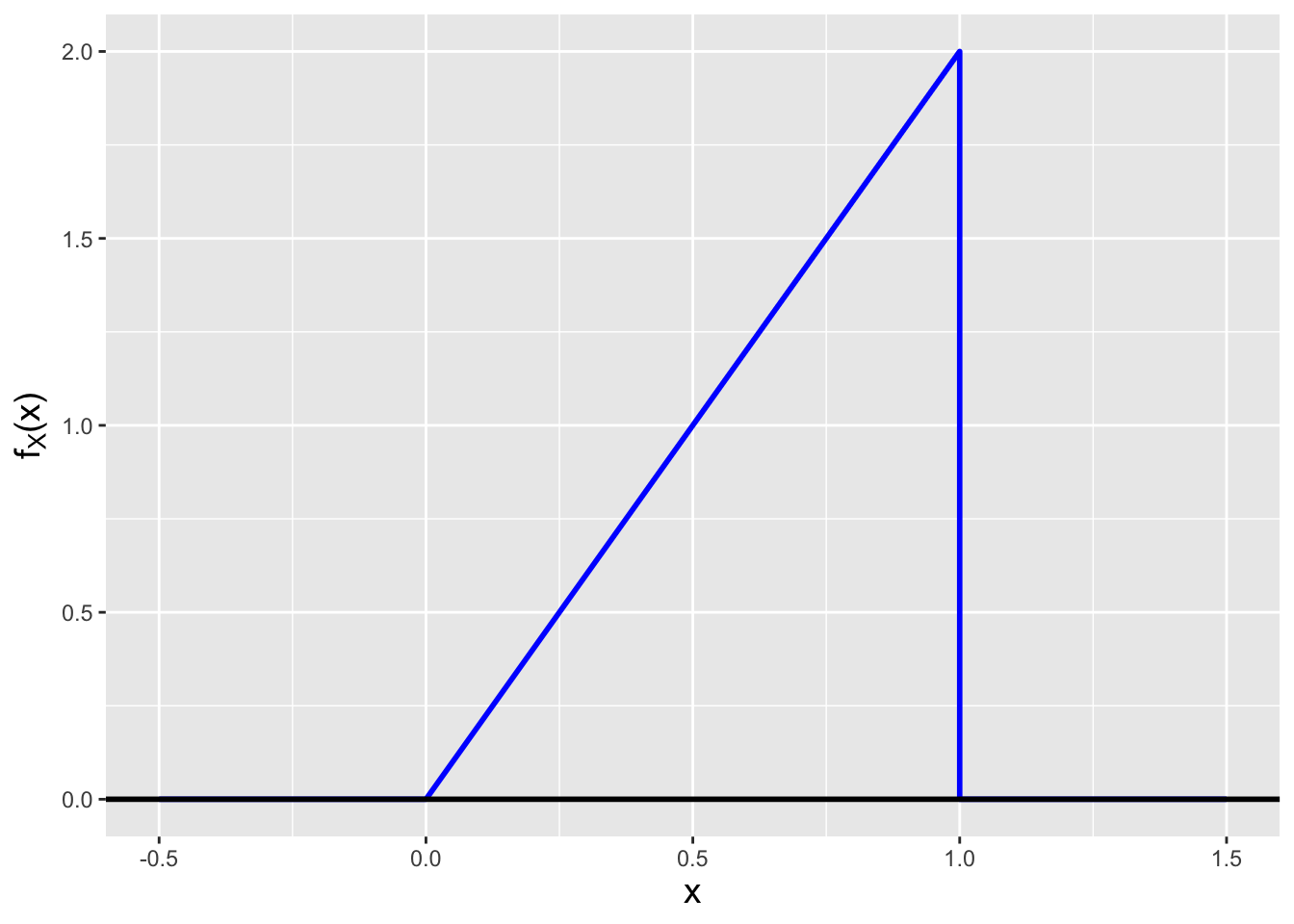

1.6.1 A Simple Probability Density Function

Let’s assume that we have defined the following pdf: \[ f_X(x) = \left\{ \begin{array}{cl} 2x & 0 \leq x \leq 1 \\ 0 & \mbox{otherwise} \end{array} \right. \,. \] We visualize this pdf in Figure 1.10.

Figure 1.10: The probability density function \(f_X(x) = 2x\), for \(0 \leq x \leq 1\).

This pdf helps to illustrate many of the points made above. Note that it is (a) non-negative and although its maximum value is \(> 1\), (b) it integrates > to 1. (We need not actually integrate here, as geometry is sufficient: the area under the curve is 1/2 \(\times\) 1 \(\times\) 2 = 1.)

How would one interpret this pdf? Where its value is larger, we are more likely to sample data. Full stop. What is the probability of sampling a datum between 0 and 1/2? Again, we can use geometry and see that the area under the curve is 1/2 \(\times\) 1/2 \(\times\) 1 = 1/4. (Which means the probability of sampling a datum between 1/2 and 1 must be \(1 - 1/4 = 3/4\).)

Let’s extend this example a bit by adding a condition. For instance, what is the probability of sampling a datum between 1/4 and 1/2, given that we sample a datum between 0 and 3/4? In analogy with how we worked with conditional probabilities above, we can write that \[ P(1/4 \leq X \leq 1/2 \, \vert \, 0 \leq X \leq 3/4) = \frac{P(1/4 \leq X \leq 1/2 \cap 0 \leq X \leq 3/4)}{P(0 \leq X \leq 3/4)} = \frac{P(1/4 \leq X \leq 1/2)}{P(0 \leq X \leq 3/4)} \,. \] (How does this differ from computing the unconditional probability \(P(1/4 \leq X \leq 1/2)\)? Technically, it does not…we could write out a similar expression to the one above. But we note that the denominator would be \(P(0 \leq X \leq 1) = 1\) and thus it would “go away.”) Using geometrical arguments, we should be able to convince ourselves that the answer we seek is 1/3.

One last point we will make here is that for a continuous distribution, it is meaningless to compute \(P(X = a)\). For instance: \[ P\left(X = \frac{1}{2}\right) = \int_{1/2}^{1/2} 2 x dx = \left. x^2 \right|_{1/2}^{1/2} = \frac{1}{4} - \frac{1}{4} = 0 \,. \] What are we to make of this? Recall that a pdf is a probability density function, and that one can think of it as having units of probability per unit interval…so one has to integrate the pdf over an interval of length greater than zero to derive a non-zero probability value.

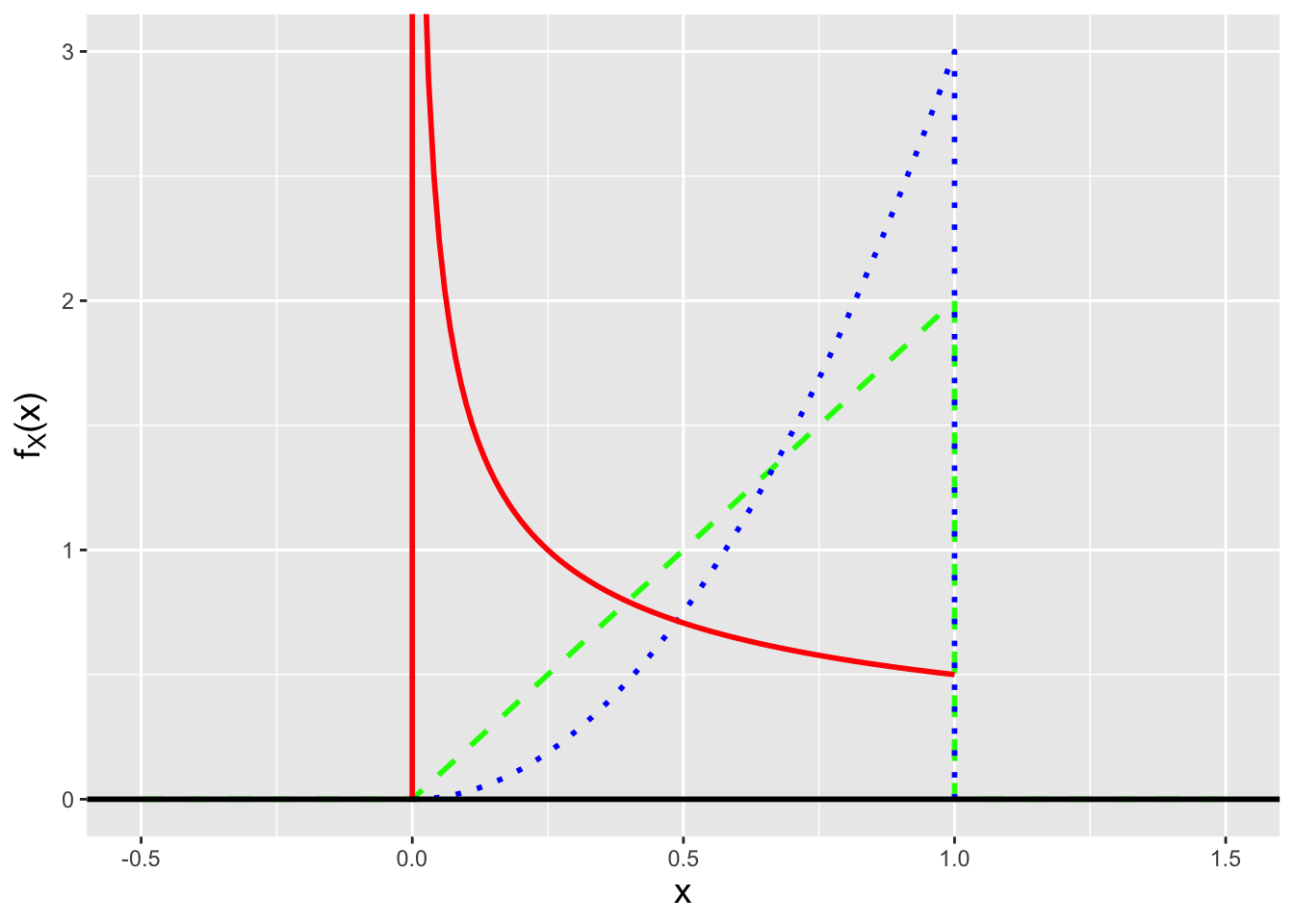

1.6.2 Shape Parameters and Families of Distributions

In the previous example, the stated pdf was the stated pdf: there was no means by which to change its shape. We can generalize it by utilizing a shape parameter: \[ f_X(x \vert \theta) = \left\{ \begin{array}{cl} \theta x^{\theta-1} & 0 \leq x \leq 1 \\ 0 & \mbox{otherwise} \end{array} \right. \,, \] where in the previous example, \(\theta = 2\). It is conventional to denote a population parameter or a set of such parameters with the Greek letter \(\theta\) (theta, pronounced “thay-tah”). Here, \(\theta\) represents a single, constant parameter whose value is \(> 0\). (If \(\theta\) were negative, for instance, \(f_X(x \vert \theta)\) would be \(< 0\), which is not allowed!) \(f_X(x \vert \theta)\), with \(\theta \in \Theta = (0,\infty)\), is perhaps confusingly dubbed a family of distributions. (One might think that a family would refer to a set of different mathematical forms for pdfs, like \(\theta x^{\theta-1}\) and \(e^{-x/\theta}/\theta\), etc., but it actually refers to the fact that \(\theta\) can take on more than one value, yielding a family of shapes as illustrated in Figure 1.11.)

Figure 1.11: Examples of the family of pdfs \(f_X(x \vert \theta) = \theta x^{\theta-1}\) for \(0 \leq x \leq 1\), with parameters \(\theta =\) 1/2 (solid red line), 1 (dashed green line), and 2 (dotted blue line).

1.6.3 A Simple Probability Mass Function

Let’s play a game: we throw a dart at a board that has ten numbers on it, 1 through 10. Assume that we are guaranteed to hit the board, and that the regions associated with each number have the exact same size. If we hit an even number, we get 0 points, while if we hit an odd number, we get 2 points. Furthermore, if we hit a prime number, we get a bonus of 1 point. What is the probability mass function for the number of points we will score given a single throw of the dart?

If we hit the 4, 6, 8, or 10, we get 0 points. (2 is prime, so we’d get a bonus of 1 point by hitting that.) If we hit the 9, we get 2 points, and if we hit the 1, 3, 5, or 7, we get 3 points. Hence the probability mass function is

| \(x\) | \(p_X(x)\) |

|---|---|

| 0 | 4/10 |

| 1 | 1/10 |

| 2 | 1/10 |

| 3 | 4/10 |

We see that this pmf is (a) non-negative and (b) has values \(p_X(x)\) that lie between 0 and 1. Because we are dealing with masses and not densities, probability calculations involve summations (while taking care to note whether one or both limits of summation lie at a mass, and if so, whether or not the inequality is, e.g., \(>\) or \(\geq\)). For instance, what is the probability of achieving a score greater than 1 point? \(P(X > 1) = p_X(2) + p_X(3) = 1/2\). What about a score of 3 points, given a score greater than 0 points? \[ P(X = 3 \vert X > 0) = \frac{P(X = 3 \cap X > 0)}{P(X > 0)} = \frac{P(X = 3)}{P(X > 0)} = \frac{p_X(3)}{p_X(1)+p_X(2)+p_X(3)} = \frac{4}{1+1+4} = \frac{2}{3} \,. \]

1.6.4 A More Complex Example Involving Both Masses and Densities

There is no reason why masses and densities cannot be combined into a single probability distribution. For instance, perhaps we have the following: \[ h_X(x) = \left\{ \begin{array}{cc} 1/2 & x \in [0,1] \\ 1/2 & x = 2 \end{array} \right. \,. \] There is nothing special about this function; the mathematics of probability calculations is just a tad more complicated than before. For instance, what is the probability of sampling a value greater than 3/4? \[ P(X > 3/4) = \int_{3/4}^1 h_X(x) dx + h_X(2) = \frac{1}{2} \left. x \right|_{3/4}^1 + \frac{1}{2} = \frac{1}{8} + \frac{1}{2} = \frac{5}{8} \,. \] Integrate over the domain(s) where densities are defined and sum over the domain(s) where masses are defined. Done!

1.7 Characterizing Probability Distributions

A probability distribution represents the rates of occurrence of different experimental outcomes. Can we determine an “average” outcome? In other words, can we determine what value to expect when we next run the experiment? The answer is yes: this is the expected value of a random variable (or expectation) and it is the weighted average of all possible experimental outcomes: \[\begin{align*} E[X] &= \frac{\sum_x x p_X(x)}{\sum_x p_X(x)} = \sum_x x p_X(x) ~~ \mbox{(discrete r.v.)} \\ &= \frac{\int_x x f_X(x) dx}{\int_x f_X(x) dx} = \int_x x f_X(x) dx ~~ \mbox{(continuous r.v.)} \,. \end{align*}\] In each case, the denominator disappears because it equals 1, by definition. Note that Greek letter \(\mu\) (mu, pronounced “myoo”), which conventionally denotes the mean value of a pdf or pmf, is also sometimes used interchangeably with \(E[X]\). See Figure 1.12.

It is important here to note the following:

- The input to the expected value operator is (usually!) a random variable, so that input is capitalized. In other words, we always write \(E[X]\) and not \(E[x]\). (\(x\) is just a coordinate on the real-number line…its expected value is simply \(x\) itself. See “Expected Value Tricks” in the examples below.)

- The expected value is a constant; it is not random! For any given pmf or pdf, the average value of a sampled datum does not change from experiment to experiment…there is no randomness.

Now, because the expected value is simply a weighted average, we can write down a more general expression for it: \[\begin{align*} E[g(X)] &= \frac{\sum_x g(x)p_X(x)}{\sum_x p_X(x)} = \sum_x g(x) p_X(x) ~~ \mbox{(discrete r.v.)} \\ &= \frac{\int_x g(x)f_X(x) dx}{\int_x f_X(x) dx} = \int_x g(x) f_X(x) dx ~~ \mbox{(continuous r.v.)} \,. \end{align*}\] This has been dubbed the “Law of the Unconscious Statistician” (e.g., Ross 1988, as noted by Casella & Berger 2002) due to the fact that we all think of it a definition…and not the result of a theorem.

A probability distribution may have an extended domain (e.g., \([0,\infty)\)) but often the probability mass or density is concentrated in a relatively small interval. A metric that represents the square of the “width” of that interval is the variance, which is defined as \[ V[X] = \sigma^2 = E[(X-\mu)^2] = E[X^2] - (E[X])^2 \,. \] The “width” itself\(-\)the square root of the variance\(-\)is dubbed the standard deviation and is denoted with the Greek letter \(\sigma\) (“sigma,” pronounced “SIG-muh”). Note that because the variance is the expected value of a squared quantity, it is always non-negative. (And like the expected value, it is a constant.) See Figure 1.12.

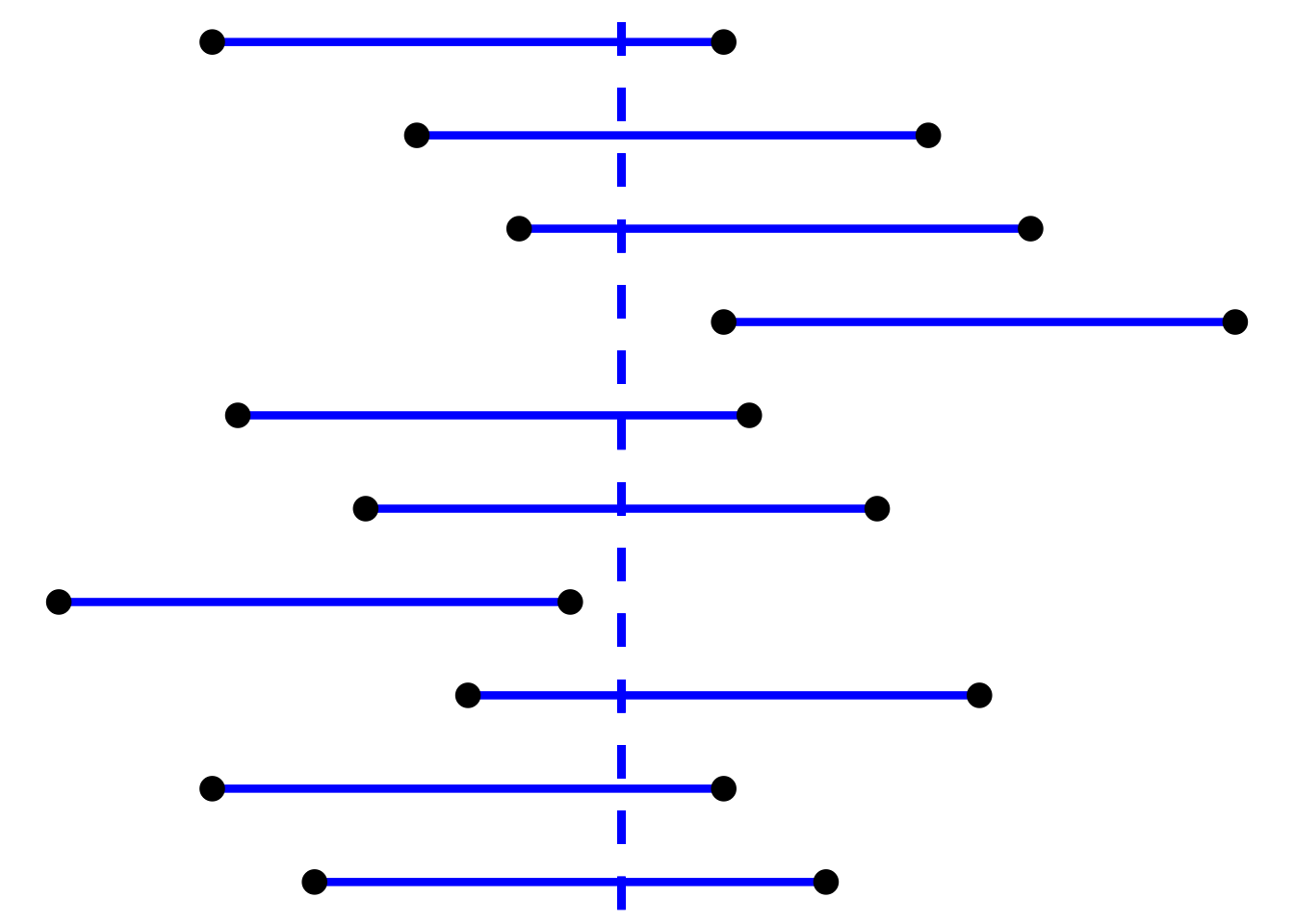

Figure 1.12: Examples of a probability mass function (left) and a probability density function (right), with the expected values \(E[X]\) indicated by the vertical lines and the distribution “widths” (here, \(E[X]-\sqrt{V[X]}\) to \(E[X]+\sqrt{V[X]}\)) indicated by the horizontal lines.

Both the expected value and variance are examples of moments of probability distributions. Moments represent elements of a distribution’s location and shape. In the end, moments are “just” expected values computed via the Law of the Unconscious Statistician, ones that are defined around the coordinate origin (\(E[X^k]\)), and ones that are defined around the distribution’s mean, \(\mu\) (\(E[(X-\mu)^k]\)). Other metrics used to describe a probability distribution, such as its skewness, are also related to moments. (One definition of skewness is Fisher’s moment coefficient: \(E[(X-\mu)^3]/\sigma^3\).)

1.7.1 Expected Value Tricks

The expected value operator \(E[X]\) has the following properties.

- If we multiply \(X\) by a constant \(a\), that constant can be moved out of the operator, i.e., \[ E[aX] = aE[X] \,. \]

- The expected value of a constant is simply that constant, i.e., \[ E[b] = b \,. \]

- The expected value operator is a linear operator, which means that we can split it at \(+\)’s and \(-\)’s, with the sign being preserved: \[ E[aX - b] = E[aX] - E[b] = aE[X] - b \,. \]

If, for example, we define a random variable \(Y = 10X - 5\) and we know that \(E[X] = 4\), then we can write that \(E[Y] = 10E[X] - 5 = 35\).

Note that we have said nothing about \(E[XY]\) here. In general, we cannot simplify this expression at all, unless \(X\) and \(Y\) are independent random variables (a concept we haven’t discussed yet), in which case \(E[XY] = E[X]E[Y]\).

1.7.2 Variance Tricks

The variance operator \(V[X]\) has the following properties.

- If we multiply \(X\) by a constant \(a\), that constant can be moved out of the operator, but it is then squared, i.e., \[ V[aX] = a^2V[X] \,. \]

- The variance of a constant is zero: \[ V[b] = 0 \,. \]

- The variance operator is a linear operator, which means that we can split it at \(+\)’s and \(-\)’s, with all signs becoming positive: \[ V[aX - b] = V[aX] + V[b] = a^2V[X] + 0 = a^2V[X] \,. \]

If, again, \(Y = 10X - 5\), and if \(V[X] = 2\), then \(V[Y] = 100V[X] = 200\).

1.7.3 The Shortcut Formula for Variance

Above, we indicate that \[ V[X] = E[(X-\mu)^2] = E[X^2] - (E[X])^2 \,. \] This is the so-called shortcut formula for determining the variance of a distribution. We can derive it as follows, making use of the “tricks” we show above: \[\begin{align*} V[X] = E[(X-\mu)^2] &= E[X^2 - 2X\mu + \mu^2] ~~\mbox{(expand)} \\ &= E[X^2] - E[2X\mu] + E[\mu^2] ~~\mbox{(split on + and -)} \\ &= E[X^2] - 2\mu E[X] + \mu^2 ~~\mbox{(slide constants out)}\\ &= E[X^2] - 2(E[X])^2 + (E[X])^2 = E[X^2] - (E[X])^2 \,, \end{align*}\] where in the last line we make use of the fact that \(E[X] = \mu\). Note what this shortcut formula means: it means that to compute a variance, it is sufficient to compute both \(E[X]\) and \(E[X^2]\) and combine the results. It also means that if we are given any two of the quantities \(E[X]\), \(E[X^2]\), and \(V[X]\), we can immediately derive the third one.

1.7.4 The Expected Value and Variance of a Probability Density Function

In the last section, we define the pdf \[ f_X(x \vert \theta) = \left\{ \begin{array}{cl} \theta x^{\theta-1} & 0 \leq x \leq 1 \\ 0 & \mbox{otherwise} \end{array} \right. \,, \] For this pdf, the expected value is \[ E[X] = \int_0^1 x f_X(x) dx = \int_0^1 \theta x^\theta dx = \frac{\theta}{\theta+1} \left. x^{\theta+1} \right|_0^1 = \frac{\theta}{\theta+1} \,. \] As for the variance, we utilize the shortcut formula, which means that we compute \(E[X^2]\) first: \[ E[X^2] = \int_0^1 x^2 f_X(x) dx = \int_0^1 \theta x^{\theta+1} dx = \frac{\theta}{\theta+2} \left. x^{\theta+2} \right|_0^1 = \frac{\theta}{\theta+2} \,. \] Hence the variance is \[ V[X] = E[X^2] - (E[X])^2 = \frac{\theta}{\theta+2} - \frac{\theta^2}{(\theta+1)^2} = \frac{\theta}{(\theta+2)(\theta+1)^2} \,. \]

We see that the value for our new pdf is similar: 0.643.

1.8 Working With R: Probability Distributions

In this section, we introduce R as a tool with which to, e.g.,

visualize and numerically manipulate probability distributions.

We start with the concept of the vector:

(The reader should feel free to open R and type in these lines

at the Console prompt.)

In this example, we define a vector of character strings which we

name x; here, x has length 1:

## [1] 1c() is an R function whose arguments (e.g., "Hello, world!") are

what are to be the constituents of the vector.

The arrow is an assignment operator; = is equally valid. We can create

a numeric vector as follows:

## [1] 1 2 4 8## [1] 4but when the numbers follow a (long) sequence, it can be easier to utilize

seq():

x <- seq(0,pi,by=0.01) # 0, 0.01, 0.02, ..., 3.14 (but not 3.15)

# pi and Inf are built-in constants

length(x)## [1] 315When it comes to probability distributions, what might we want to do first?

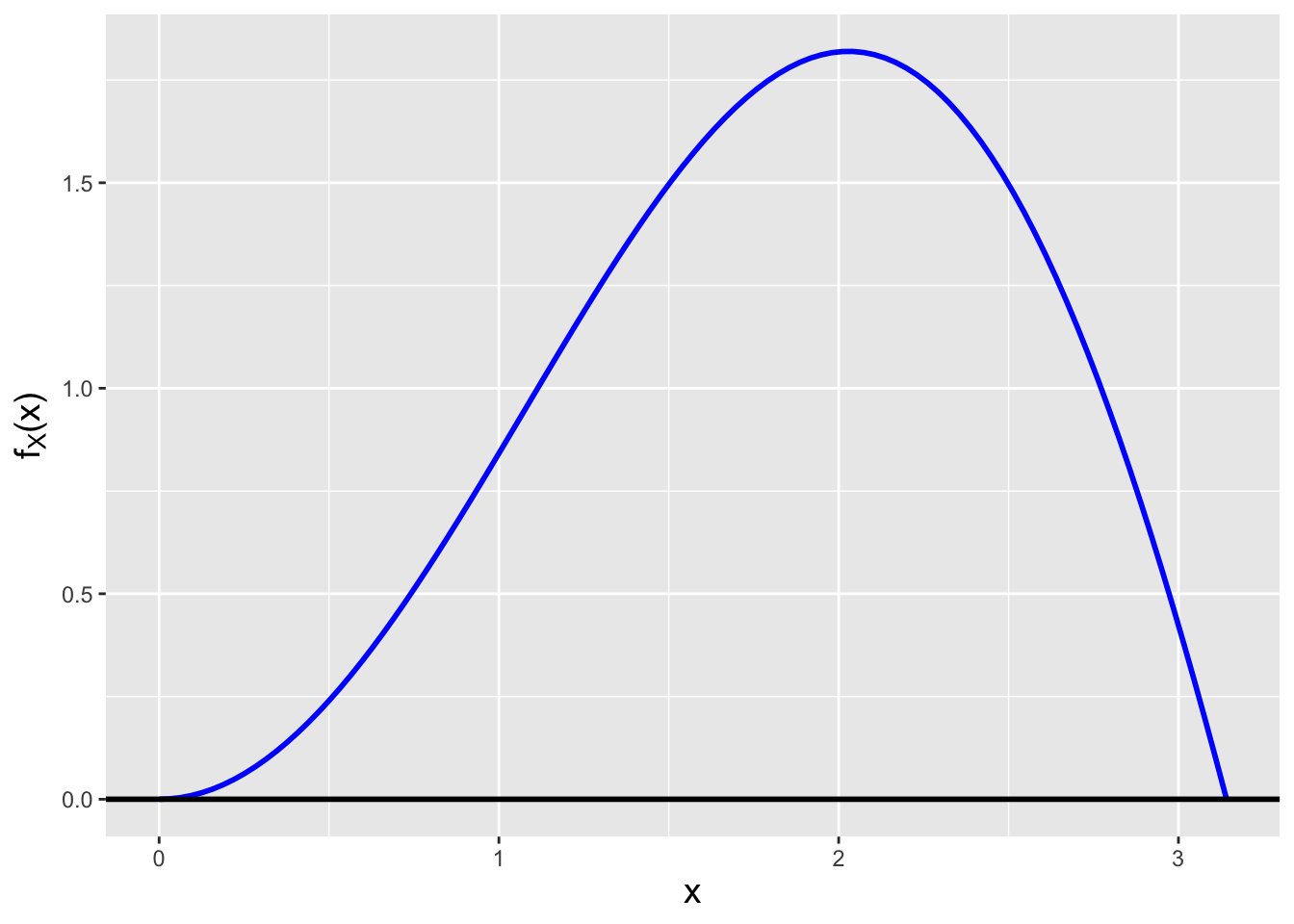

Let’s suppose that our data are sampled from this pdf:

\[

f_X(x) = \left\{ \begin{array}{cl} c x \sin x & 0 \leq x \leq \pi \\ 0 & \mbox{otherwise} \end{array} \right. \,,

\]

\(c\) is a normalization constant, meaning it has some value (to be

determined) such that the integral of \(f_X(x)\) from 0 to \(\pi\) is 1.

Below, we will show how we can determine the value of \(c\) using R code.

But first, we will assume \(c = 1\) and

determine if \(f_X(x)\) is non-negative (as it should be!):

## [1] 0Note how we do not have to use a for-loop here, as one of the hallmarks of

R is vectorization: if R sees that x is a vector, it will work with

the vector directly and thus f.x will itself be a vector with the same

length as x (and with the first element of x corresponding to the first

element of f.x, etc.). We see that the minimum value is 0.

If we want to go further, we can make a simple plot (see Figure 1.13):

x <- seq(0,pi,by=pi/100)

f.x <- x*sin(x)

df <- data.frame(x=x,f.x=f.x)

ggplot(data=df,aes(x=x,y=f.x)) +

geom_line(col="blue",lwd=1) +

geom_hline(yintercept=0,lwd=1) +

labs(y = expression(f[X]*"(x)")) +

base_theme

Figure 1.13: The function \(x \sin x\).

The ggplot() function puts x on the \(x\)-axis and f.x on the \(y\)-axis.

We then connect each point with a line (geom_line()),

make the line blue (col="blue"),

overlay a horizontal red line at \(y = 0\) (geom_hline(),

with yintercept=0),

and change the default \(y\)-axis label to one that includes the subscript “X”

(labs()).

The next step is to determine the normalization constant. Let’s suppose

that we have forgotten integration by parts and thus we are not sure

how to integrate \(f_X(x)\). We can code numerical integration in R using a

combination of a function that evaluates \(f_X(x)\) and a call to the

built-in function integrate(), which performs numerical integration:

## 3.141593 with absolute error < 3.5e-14We see that the integral is \(\pi\), so to make the pdf valid, we have to set \(c\) to \(1/\pi\): \[ f_X(x) = \left\{ \begin{array}{cl} \frac{1}{\pi} x \sin x & 0 \leq x \leq \pi \\ 0 & \mbox{otherwise} \end{array} \right. \,. \]

Let’s suppose we sample data from this distribution.

What is the probability that the next observed datum will

have a value between 1 and 2? We can use integrate to figure that

out:

f <- function(x) {

return(x*sin(x)/pi) # we now include the normalization constant

}

integrate(f,1,2) # integrate the function f between 1 and 2## 0.4585007 with absolute error < 5.1e-15The answer is 0.4585…there is a 45.85% chance that the next datum will have a value between 1 and 2.

What is the expected value, \(E[X]\), of \(f_X(x)\)?

## 1.868353 with absolute error < 2.1e-14The expected value is 1.868. Given the appearance of the pdf, this number makes sense.

1.8.1 Numerical Integration and Conditional Probability

Let’s suppose that we would like to numerically evaluate \[ P(1 \leq X \leq 2 \vert X > 0.5) = \frac{P(1 \leq X \leq 2 \cap X > 0.5)}{P(X > 0.5} = \frac{P(1 \leq X \leq 2)}{P(X > 0.5} \,. \] As we have already defined At first, it would appear that all we have to do is to call

integrate()twice

However, this will not work, since

integrate()returns a list, not a single numerical value. So we have to figure out where the value of the integral value is stored:

## [1] "value" "abs.error" "subdivisions" "message"

## [5] "call"What we want is

value. To reference the value directly, we use a dollar sign, as shown here:

## [1] 0.3836833Done. Our conditional probability is 0.4645.

1.8.2 Numerical Integration and Variance

Above, we compute the expected value of \(f_X(x)\). For the variance, we adapt the same code to compute \(E[X^2]\), then utilize the shortcut formula:

f <- function(x) {

return(x^2*sin(x)/pi) # same code as above

}

E.X <- integrate(f,0,pi)$value

f <- function(x) {

return(x^3*sin(x)/pi) # add one more power of x

}

V.X <- integrate(f,0,pi)$value - E.X^2

V.X ## [1] 0.3788611## [1] 0.6155169The variance is 0.379 and the standard deviation is 0.616. We interpret these numbers as saying that the majority of the observed data will lie between \(1.868 - 0.616 = 1.252\) and \(1.868 + 0.616 = 2.484\). If we recall introductory statistics, the proportion of values within one standard deviation of the mean for a normal distribution (i.e., a bell curve) is 0.683…but that value changes from distribution to distribution. What is the value here?

f <- function(x) {

return(x*sin(x)/pi) # back to the original pdf

}

integrate(f,E.X-sqrt(V.X),E.X+sqrt(V.X))$value## [1] 0.642609We see that the value for our new pdf is similar: 0.643.

1.9 Cumulative Distribution Functions

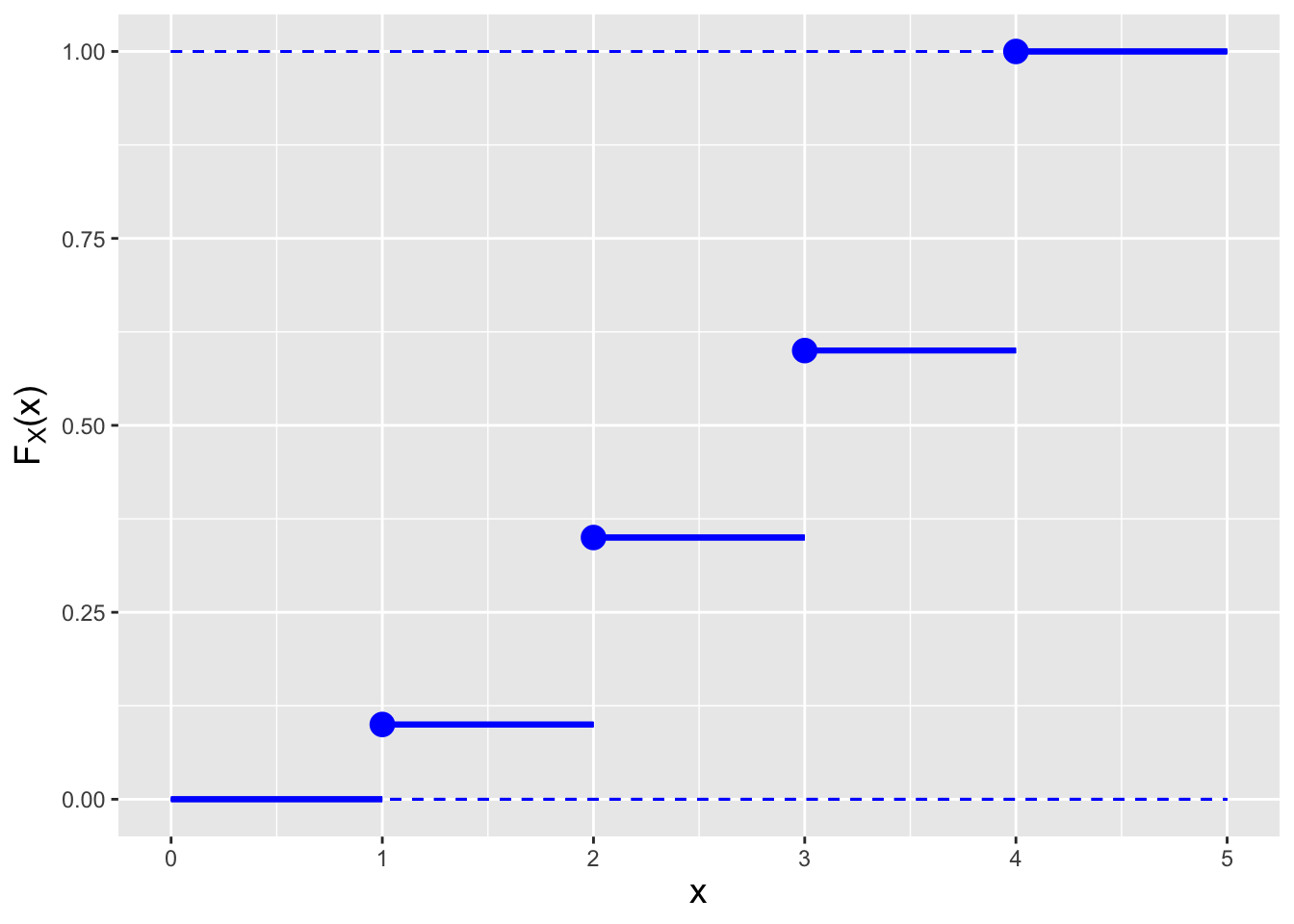

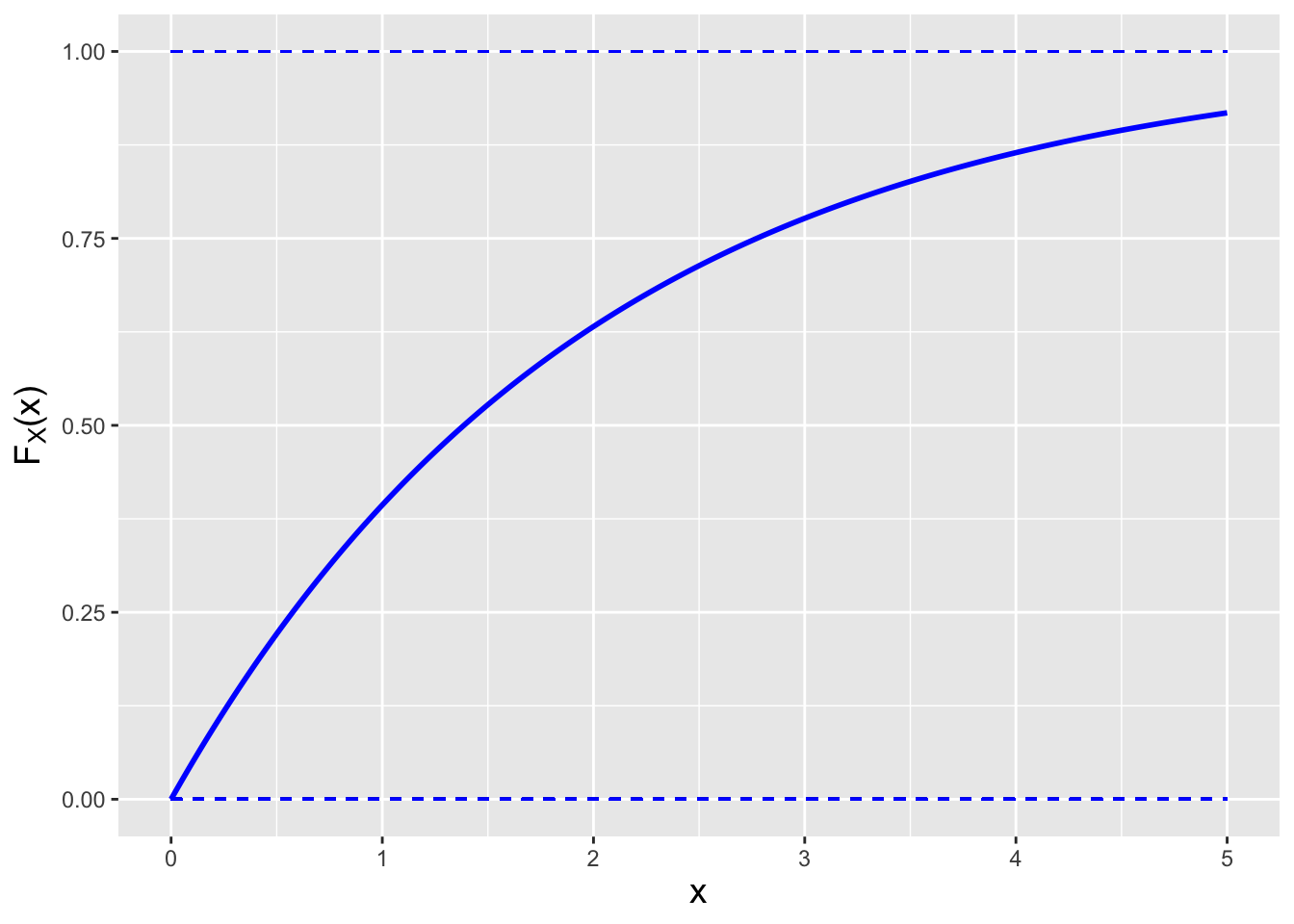

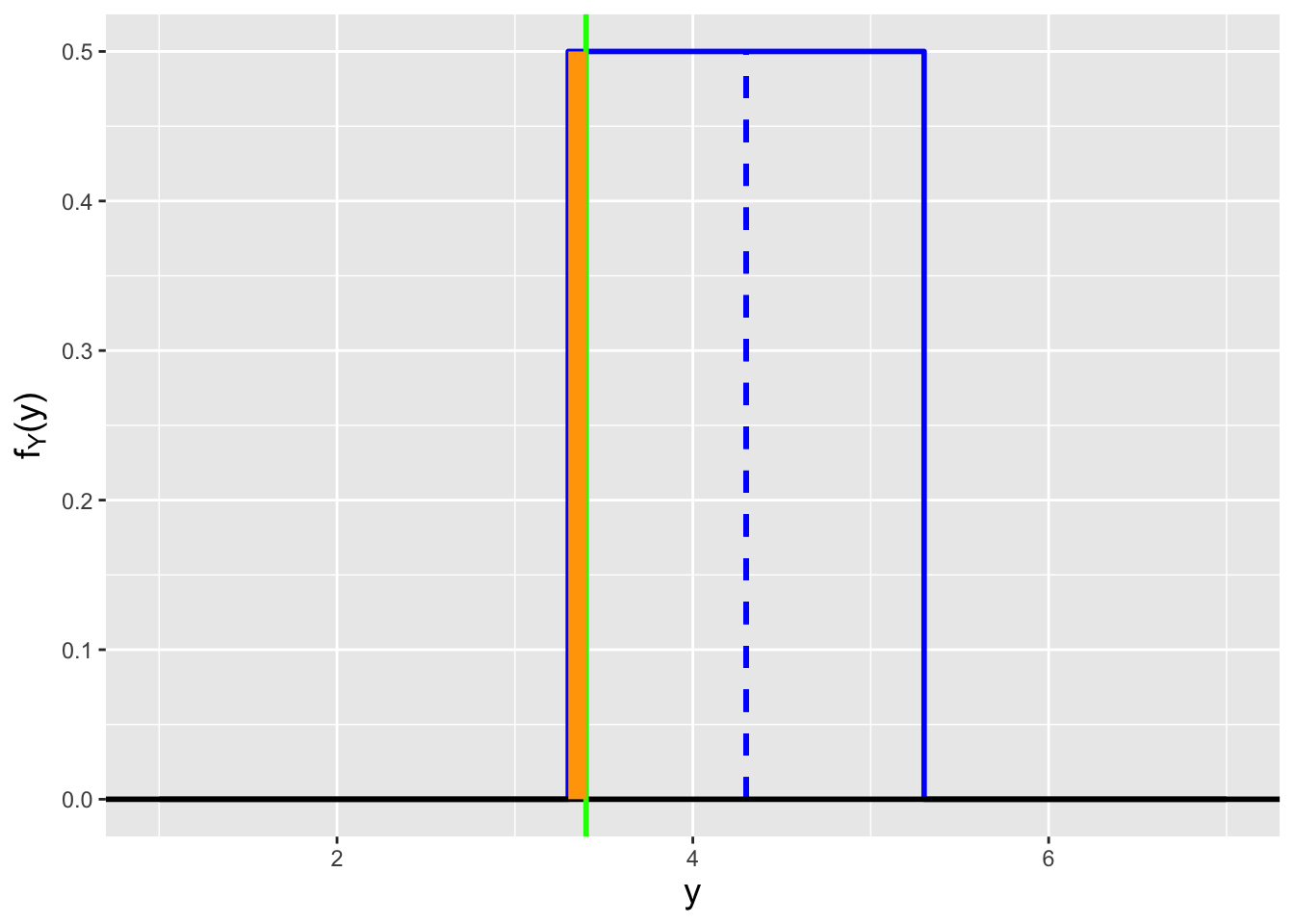

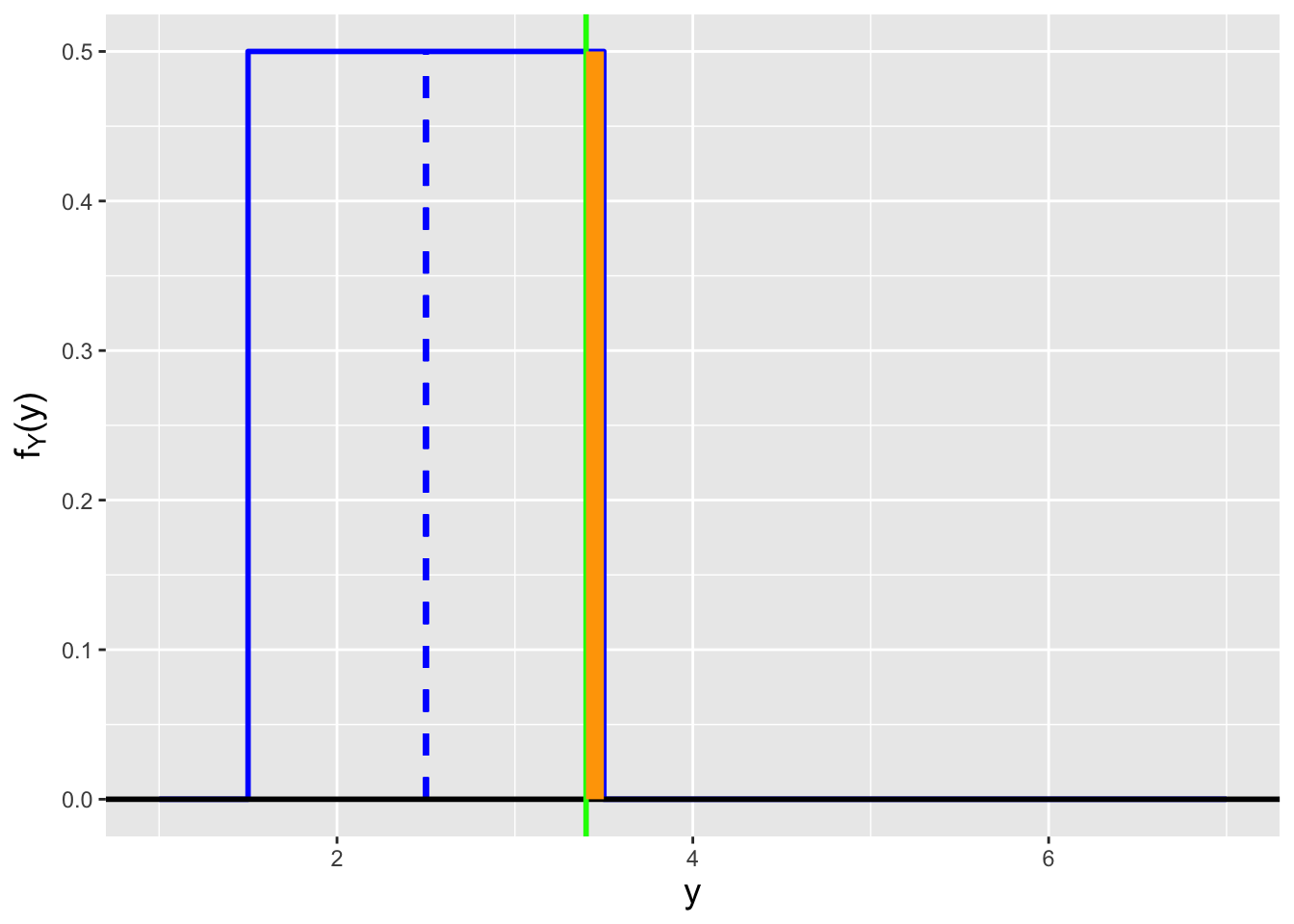

A cumulative distribution function (a cdf) is another means by which to mathematically express a probability distribution, which is to say, if we have a cdf, we can derive the associated pmf/pdf and vice-versa. A cdf is, in the discrete case, a sum of probability masses that lie to the left of a chosen coordinate \(x\) on the real-number line\(-\) \[ F_X(x) = \sum_{y \leq x} p_Y(y) \] \(-\)while in the continuous case it is an integral of the probability density that lies to the left of \(x\)\(-\) \[ F_X(x) = \int_{y \leq x} f_Y(y) dy \,. \] In both cases, we utilize a dummy variable for the pmf/pdf itself because \(x\) is the upper limit of summation/integration. See Figure 1.14, which illustrates how a cdf “collects” all the probability masses or density “to the left” of a given value of \(x\). Given this figure, it should be clear that \(F_X(-\infty) = 0\) (there is nothing to collect “to the left” of \(-\infty\)) and \(F_X(\infty) = 1\) (since, by the time we reach \(x = \infty\), all masses or density have been collected). Another thing to keep in mind is that even if a random variable is discrete, its associated cdf \(F_X(x)\) is continuously valued, because it is defined at all values of \(x\) (although it is technically not “mathematically continuous” due to the steps that \(F_X(x)\) takes at each value of \(x\) where there is a probability mass).

![\label{fig:pdfcdf}Illustration of the relationship between a probability mass function (left) and a probability density function (right) and its associated cdf (evaluated here at $x = 2.5$). For the pmf, the cdf is the sum of the probability masses to the left of $x = 2.5$ (the masses marked in green), while for the pdf, the cdf is the integral over $x \in [0,2.5]$ (the area under curve shown in green).](_main_files/figure-html/pdfcdf-1.png)

![\label{fig:pdfcdf}Illustration of the relationship between a probability mass function (left) and a probability density function (right) and its associated cdf (evaluated here at $x = 2.5$). For the pmf, the cdf is the sum of the probability masses to the left of $x = 2.5$ (the masses marked in green), while for the pdf, the cdf is the integral over $x \in [0,2.5]$ (the area under curve shown in green).](_main_files/figure-html/pdfcdf-2.png)

Figure 1.14: Illustration of the relationship between a probability mass function (left) and a probability density function (right) and its associated cdf (evaluated here at \(x = 2.5\)). For the pmf, the cdf is the sum of the probability masses to the left of \(x = 2.5\) (the masses marked in green), while for the pdf, the cdf is the integral over \(x \in [0,2.5]\) (the area under curve shown in green).

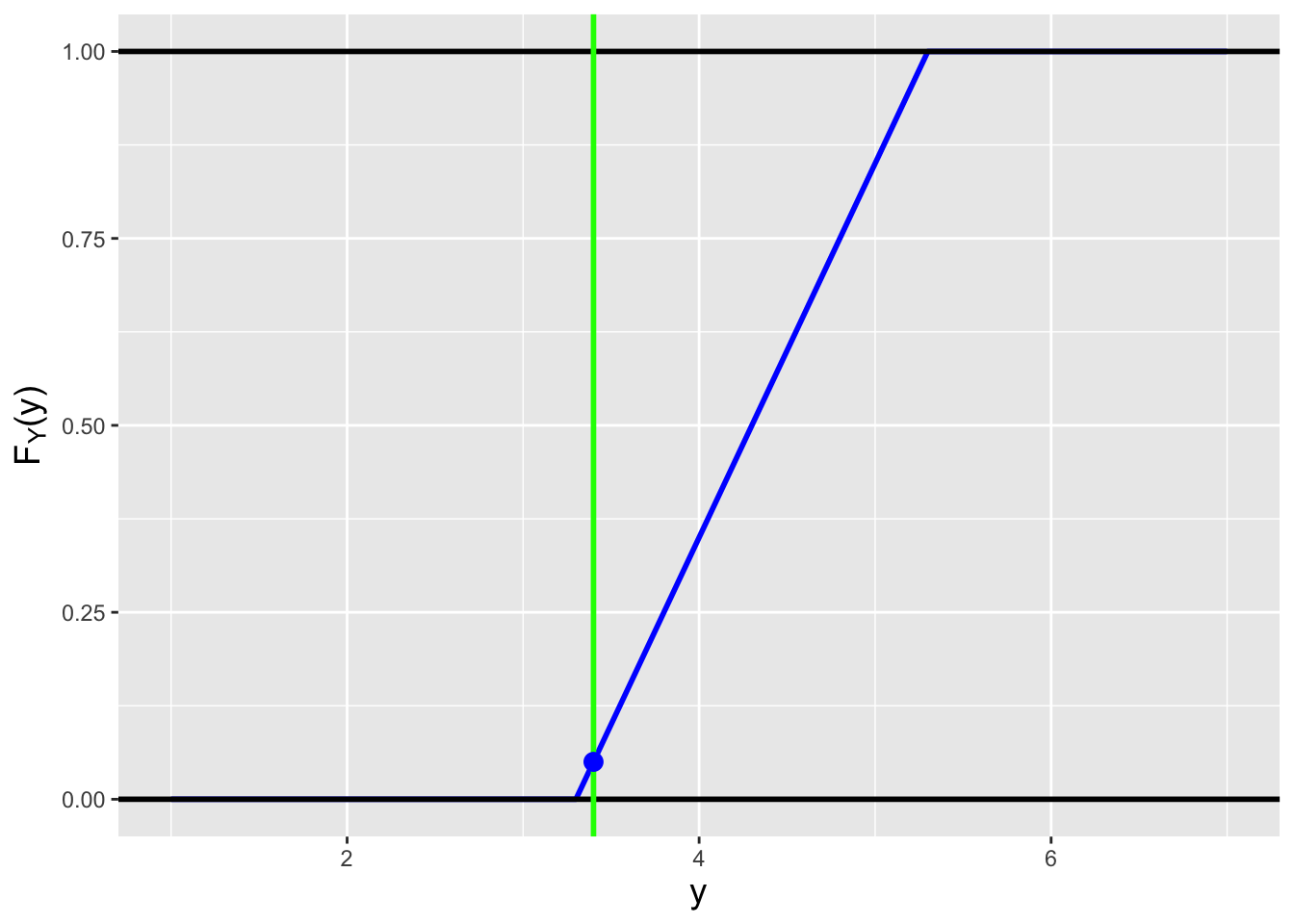

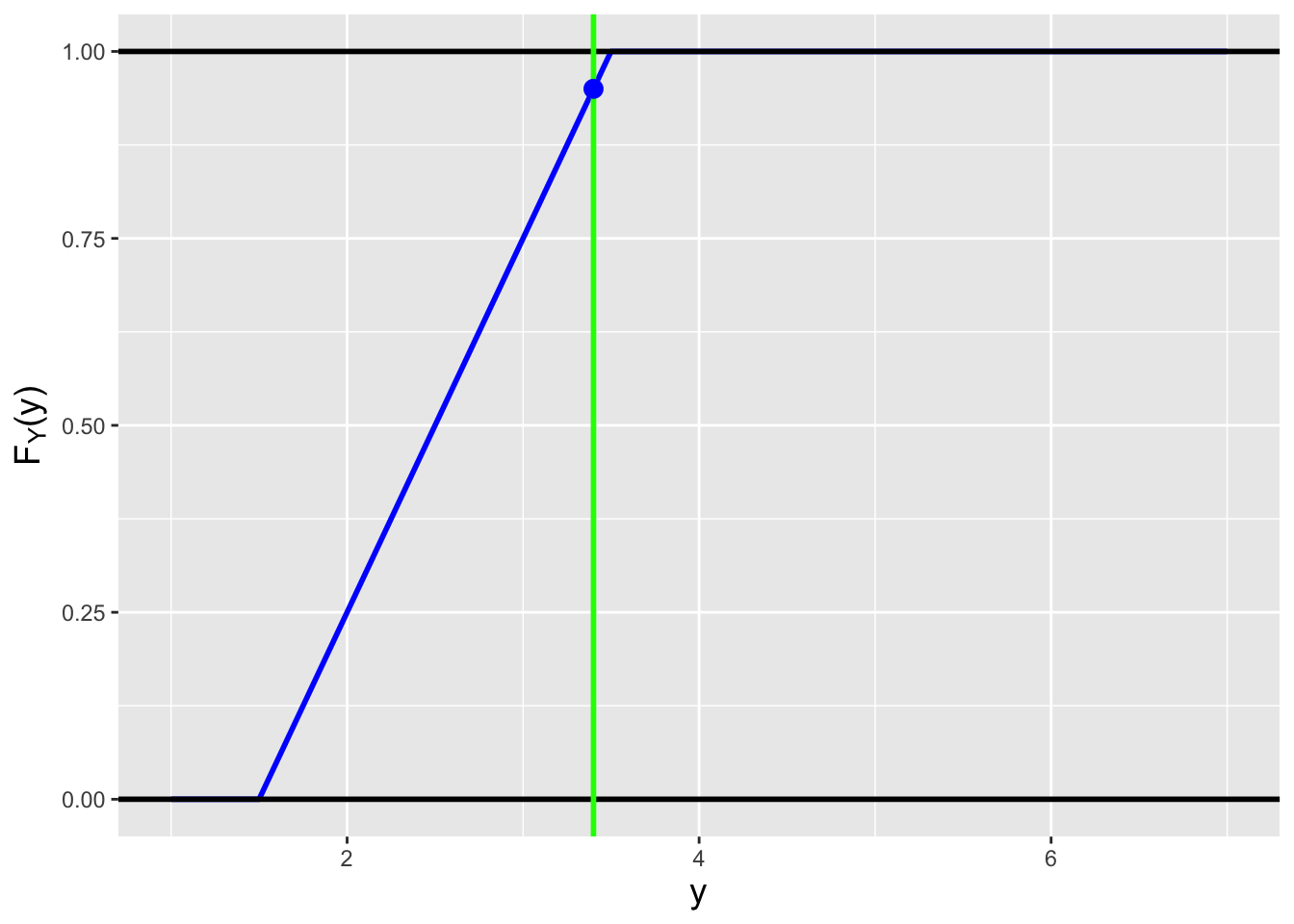

Figure 1.15: Examples of the cdfs \(F_X(x)\) for the probability mass function (left) and the probability density function (right) shown in Figure 1.14.

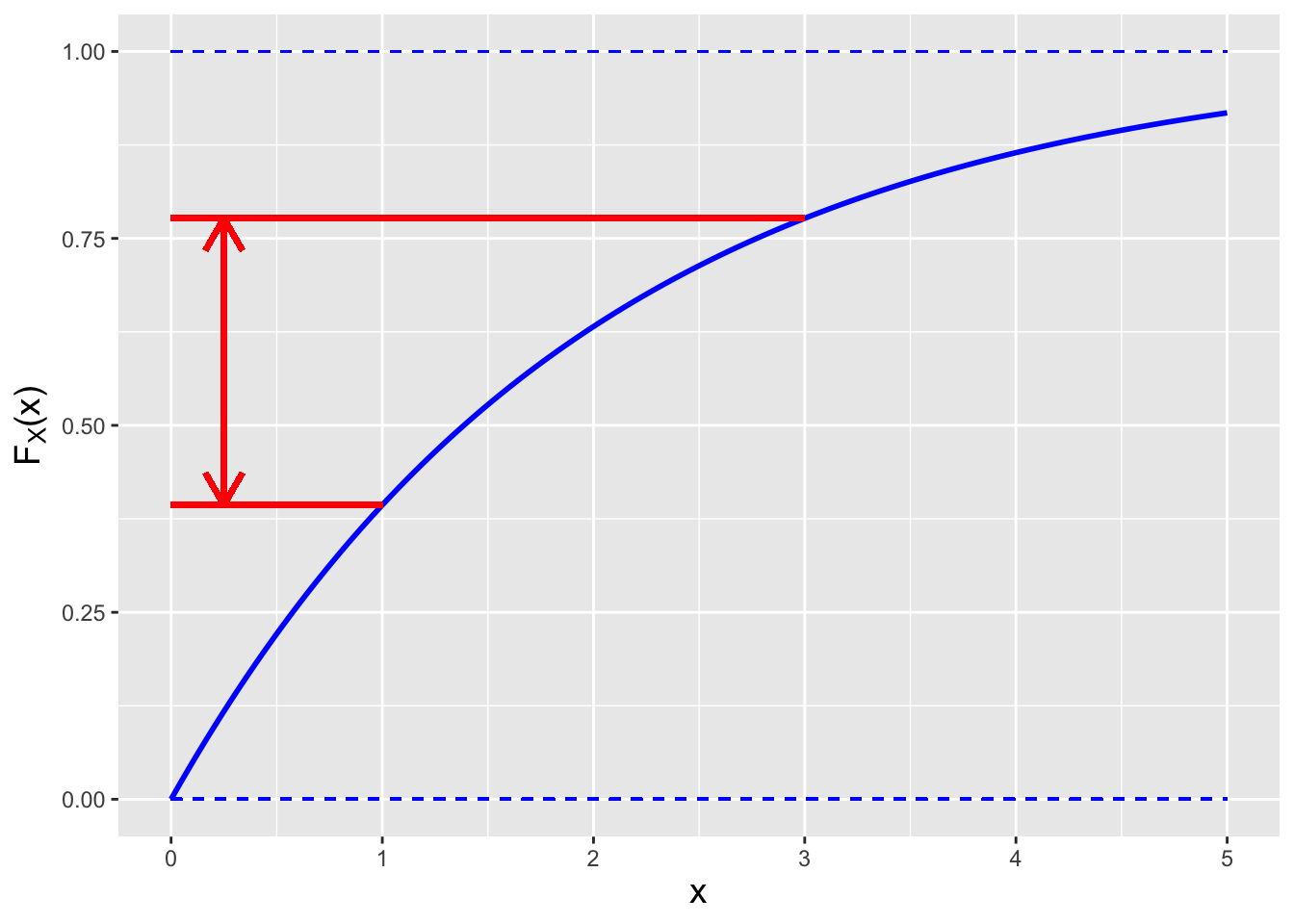

A cdf is useful to have when our goal is to compute the probability of that the value of a sampled random variable lies between \(x = a\) and \(x = b\). For the case of a continuous random variable, \[ P(a < X < b) = F_X(b) - F_X(a) \,. \] As we can see, if we have the cdf, we do not need to perform integration to compute the probability…we just plug in coordinate values. (Note that the form of the inequality, i.e., whether we have \(<\) or \(\leq\), does not matter.) However, when we are dealing with a discrete random variable, we need to tread far more carefully, because the form of the inequality can matter. Let’s suppose we have a pmf with masses given at \(x = \{0,1\}\). Then, e.g., \[\begin{align*} P(0 \leq X \leq 1) &= \sum_{x \in [0,1]} p_X(x) = p_X(0) + p_X(1) = F_X(1) \\ P(0 < X \leq 1) &= \sum_{x \in (0,1]} p_X(x) = p_X(1) = F_X(1) - F_X(0) \\ P(0 < X < 1) &= \sum_{x \in (0,1)} p_X(x) = 0 \,. \end{align*}\]

Figure 1.16: An illustration of the relationship between a cdf and probability. The probability \(P(1 < X < 3)\) is given by the distance between the two red lines (i.e., \(F_X(3)-F_X(1)\)).

We will make two final points here about cdfs.

First, as indicated above, given a cdf, we can find the associated pmf/pdf. If a pmf has non-zero masses at values \(x - \Delta x\) and \(x\), and none in between, then \[ p_X(x) = F_X(x) - F_X(x-\Delta x) \,, \] while in the continuous case, \[ f_X(x) = \frac{d}{dx}F_X(x) \,, \] assuming \(F_X(x)\) is differentiable at \(x\).

Second, we can define an inverse cumulative distribution function, or inverse cdf. The inverse cdf takes as input the total probability collected to the left of \(x\) (e.g., the green region shown in the right panel of Figure 1.14) and returns the associated value of \(x\). In other words, if \(q = F_X(x)\), then \(x = F_X^{-1}(q)\).

One issue that arises with the inverse cdf is that if \(F_X(x)\) is not

strictly monotonically increasing

(i.e., if for some range of values, \(\frac{d}{dx}F_X(x) = 0\)) then

there is no unique inverse. For instance, see the left panel

of Figure 1.15: if we input \(F_X(x) = 0.35\), then \(x \in [2,3)\). We

can circumvent this issue by utilizing the generalized inverse cdf

instead, for which

\[

x = F_X^{-1}(q) = \mbox{inf}\{ x : F_X(x) \geq q \} \,.

\]

The symbol “inf” indicates that we are finding the infimum, or smallest value,

of the indicated set of values.

Here, the output \(x\) is the smallest value for which \(F_X(x) \geq q\) holds.

For our given example, \(x = 2\). On the other hand, if we pick a value of

\(F_X(x)\) that lies between the steps, we would choose the smallest \(x\) value

associated with the next higher step. For instance, if for our example we

want the inverse cdf for \(F_X(x) = 0.5\), which lies between the steps at

0.35 and 0.6, we would take the smallest value of \(x\) associated with

\(F_X(x) = 0.6\), which is \(x = 3\). (Note that R utilizes the generalized

form of the inverse cdf.)

1.9.1 The Cumulative Distribution Function for a Probability Density Function

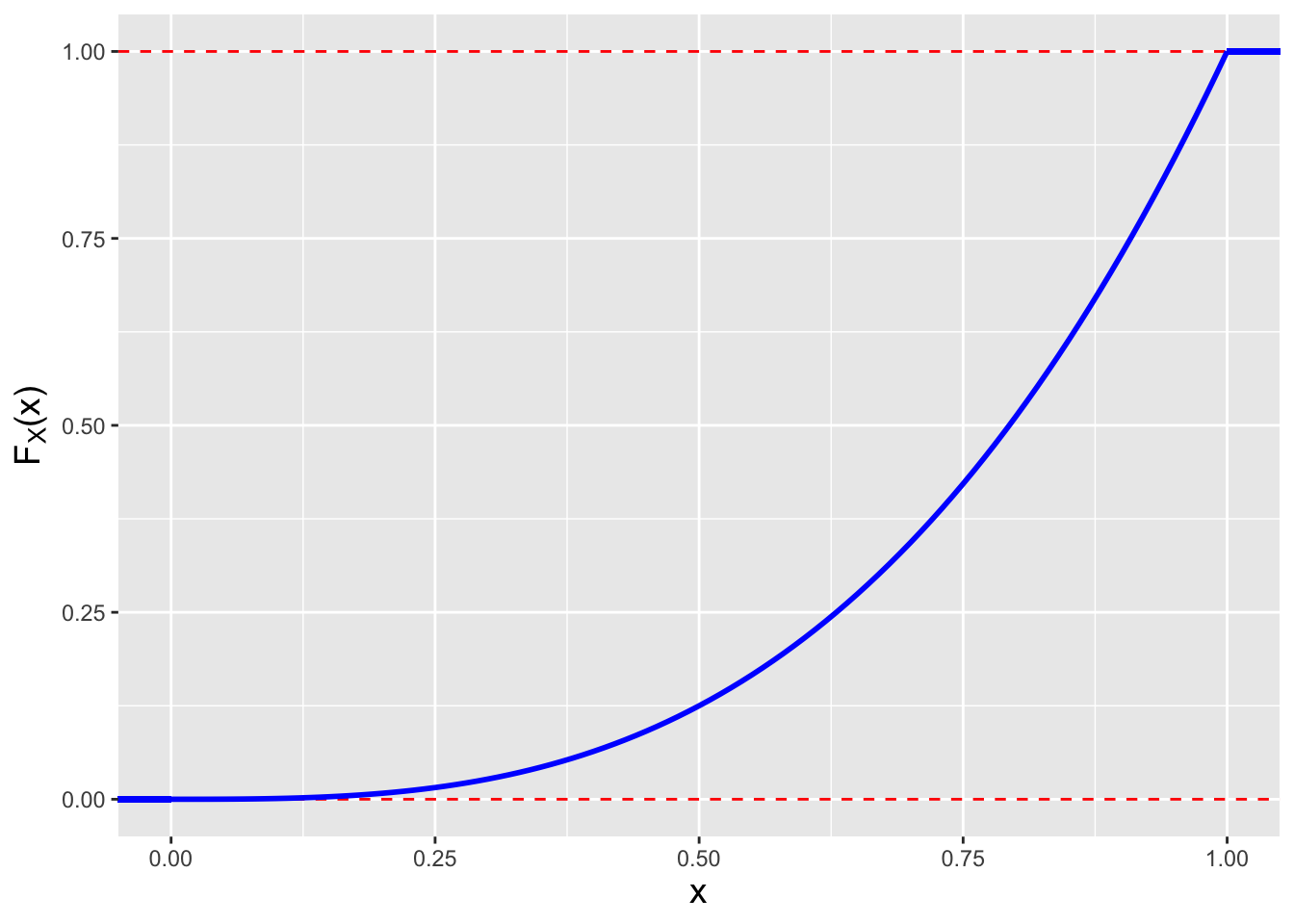

We work again with our simple parameterized pdf: \[ f_X(x \vert \theta) = \left\{ \begin{array}{cl} \theta x^{\theta-1} & 0 \leq x \leq 1 \\ 0 & \mbox{otherwise} \end{array} \right. \,. \] The cdf for this function is simply the integral of the pdf “to the left” of the coordinate \(x\): \[ F_X(x \vert \theta) = \int_0^x f_Y(y \vert \theta) dy \,. \] Because the upper bound of the integral is \(x\), we replace \(x\) in the integrand with a dummy variable. (Here, \(y\) was chosen arbitrarily.) Thus \[ F_X(x \vert \theta) = \int_0^x \theta y^{\theta-1} dy = \left. y^\theta \right|_0^x = x^\theta \,. \] We can answer a variety of questions given this cdf. For example…

- What is the median of this distribution?

The median \(\tilde{x}\) is the point on the real-number line where \[ P(X \leq \tilde{x}) = \frac{1}{2} \,. \] For our distribution, \[ \tilde{x}^\theta = \frac{1}{2} ~\Rightarrow~ \tilde{x} = \left( \frac{1}{2} \right)^{1/\theta} \,. \]

- Now let \(\theta = 3\). What is the probability of sampling a datum between \(x = 1/4\) and \(x = 3/4\)? \[ P\left(\frac{1}{4} \leq X \leq \frac{3}{4}\right) = F_X\left(\frac{3}{4} \vert \theta=3\right) - F_X\left(\frac{1}{4} \vert \theta=3\right) = \left(\frac{3}{4}\right)^3 - \left(\frac{1}{4}\right)^3 = \frac{26}{64} = \frac{13}{32} \,. \]

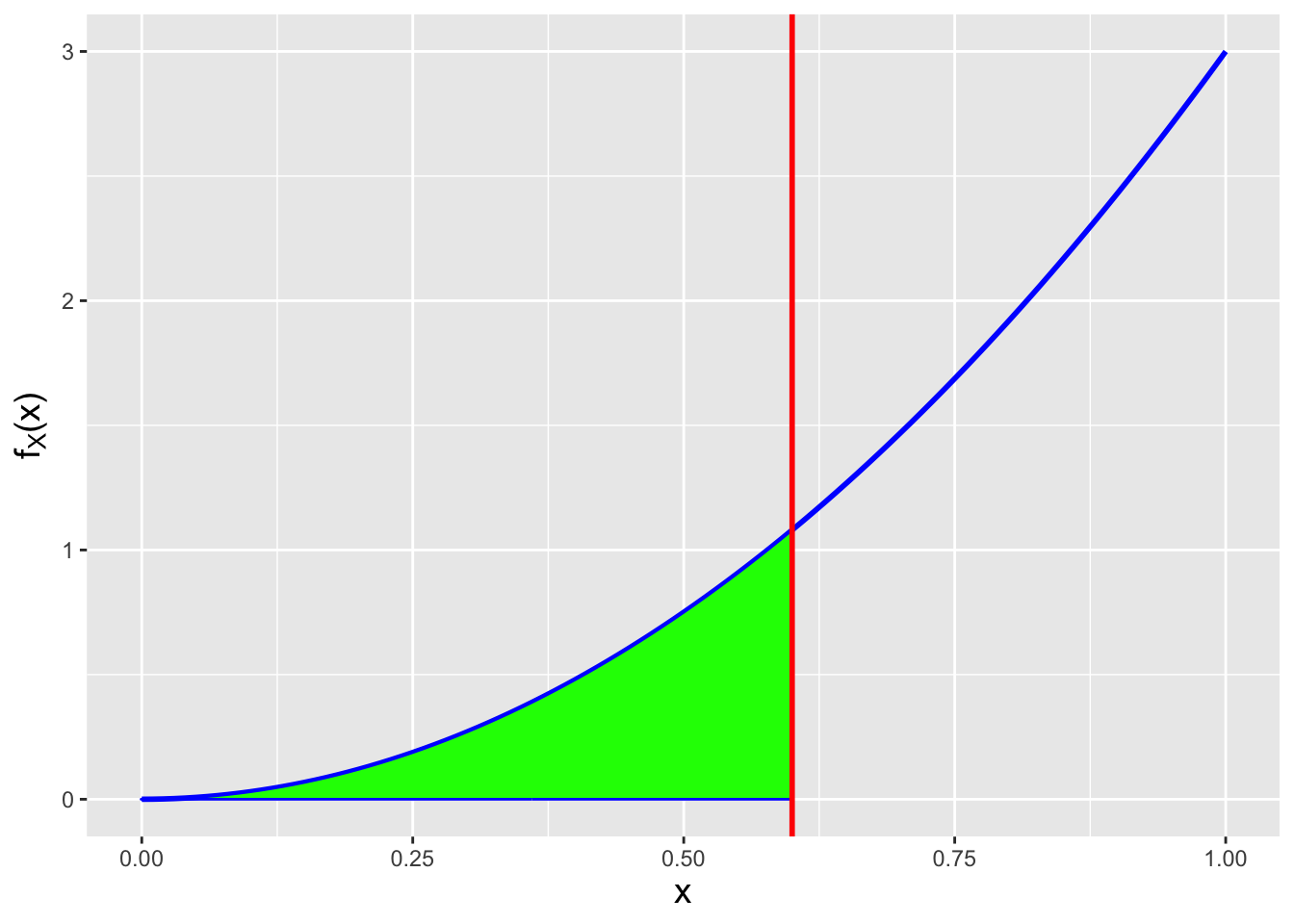

1.9.2 Visualizing the Cumulative Distribution Function in R

We continue with the pdf we use above, with \(\theta = 3\). To show the region being integrated over to compute a cdf value, for say \(x = 0.6\), we utilize

R’spolygon()function. (See Figure 1.17.)

x <- seq(0,1,by=0.01)

f.x <- 3*x^2

x.o <- 0.6

df <- data.frame(x=x,f.x=f.x)

df.shade <- subset(df,x<=x.o)

ggplot(data=df,aes(x=x,y=f.x)) +

geom_line(col="blue",lwd=1) +

geom_area(data = df.shade,aes(x,y=f.x),fill="green",col="blue",outline.type="full") +

geom_vline(xintercept=x.o,col="red",lwd=1) +

labs(y = expression(f[X]*"(x)")) +

base_theme

Figure 1.17: The cdf for \(f_X(x) = 3x^2\) at \(x = 0.6\) is the area represented in green.

What is happening in this code chunk? We first define a sequence of values for

x(viaseq()), then compute the pdf for eachxvalue (f.x). We then define a data frame withxandf.xas columns, and determine which rows correspond to values ofxthat are less than or equal to 0.6 (viasubset()). To create the polygon, we pass the subset data framedf.shadeto the functiongeom_area().

If we wish to visualize the full cdf, we can do the following. (See Figure 1.18.)

x <- seq(0,1,by=0.01)

F.x <- x^3

df <- data.frame(x=x,F.x=F.x)

ggplot(data=df,aes(x=x,y=F.x)) +

geom_hline(yintercept=0,lty=2,col="red") +

geom_hline(yintercept=1,lty=2,col="red") +

geom_line(col="blue",lwd=1) +

geom_segment(x=-1,xend=0,y=0,yend=0,col="blue",lwd=1) +

geom_segment(x=1,xend=2,y=1,yend=1,col="blue",lwd=1) +

labs(y = expression(F[X]*"(x)")) +

base_theme

Figure 1.18: The cdf for \(f_X(x) = 3x^2\).

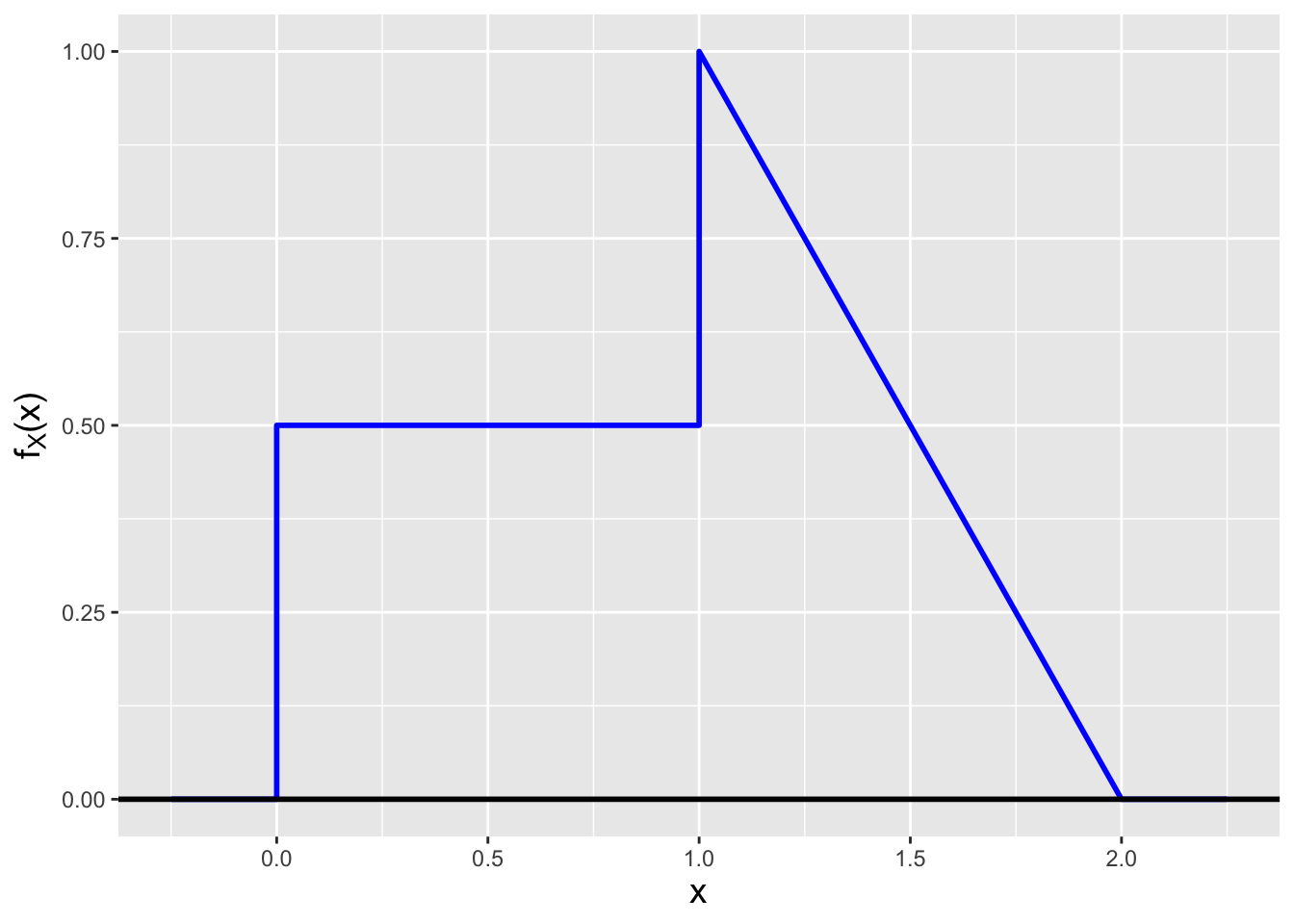

1.9.3 The CDF for a Mathematically Discontinuous Distribution

Assume that we are handed the following pdf: \[ f_X(x \vert \theta) = \left\{ \begin{array}{cl} 1/2 & 0 \leq x \leq 1 \\ 2-x & 1 \leq x \leq 2 \\ 0 & \mbox{otherwise} \end{array} \right. \,, \] which we display in Figure 1.19.

df <- data.frame(x=c(-0.25,0,0,1,1,2,2.25),f.x=c(0,0,0.5,0.5,1,0,0))

ggplot(data=df,aes(x=x,y=f.x)) +

geom_line(col="blue",lwd=1) +

geom_hline(yintercept=0,lwd=1) +

labs(y = expression(f[X]*"(x)")) +

base_theme

Figure 1.19: A continuous probability density function that is mathematically discontinuous at \(x=1\).

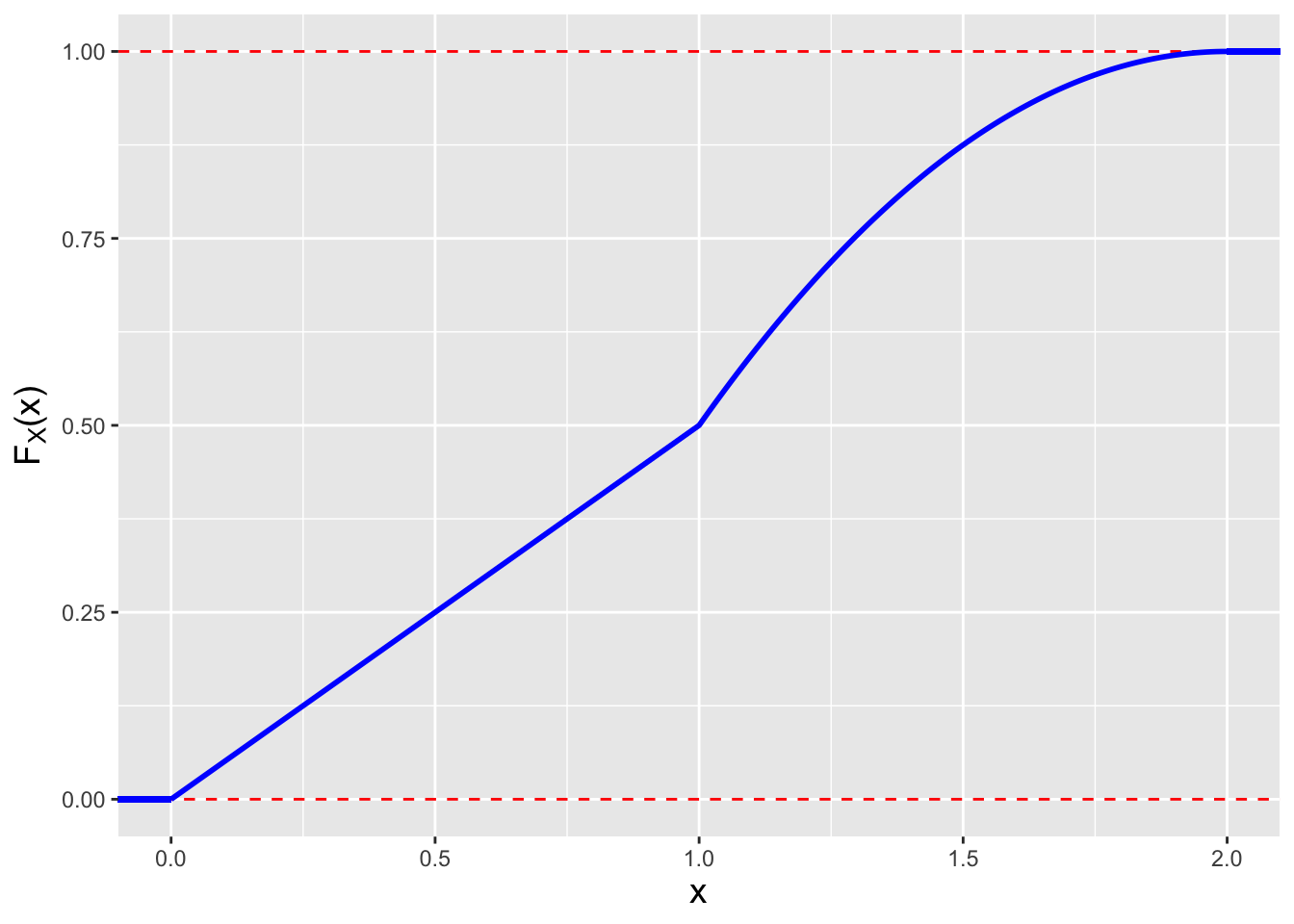

This is a completely valid, “continuous” pdf that has a mathematical discontinuity at \(x = 1\). What is the cdf for this function?

The key insight is that we should not try to evaluate the integral of \(f_X(x)\) from 0 to \(x\) when \(x > 1\) with a single integral…this will not work! We simply have to break the problem up so as to define the cdf over the domain [0,1), and then over the domain [1,2]. \[\begin{align*} F_X(x \vert x < 1) &= \int_0^x f_Y(y) dy = \frac{1}{2} \int_0^y dy = \frac{x}{2} \\ F_X(x \vert x \geq 1) &= \int_0^1 f_Y(y) dy + \int_1^x f_Y(y) dy = \left. \frac{y}{2} \right|_0^1 + \int_1^x (2-y) dy = \frac{1}{2} - \left. \frac{(2-y)^2}{2} \right|_1^x \\ &= \frac{1}{2} - \left( \frac{(2-x)^2}{2} - \frac{1}{2} \right) = 1 - \frac{(2-x)^2}{2} \,. \end{align*}\] (Not sure if this is right? We can at the very least do sanity checking, as we know \(F_X(1) = 1/2\) and \(F_X(2) = 1\)…and our formula produces these results! Alternatively, we can take the derivative of \(F_X(x)\) and see if it matches \(f_X(x)\).) We display the cdf in Figure 1.20.

x.seq <- seq(1.01,2,by=0.01)

x <- c(0,1,x.seq)

F.x <- c(0,0.5,1-(2-x.seq)^2/2)

df <- data.frame(x=x,F.x=F.x)

ggplot(data=df,aes(x=x,y=F.x)) +

geom_hline(yintercept=0,lty=2,col="red") +

geom_hline(yintercept=1,lty=2,col="red") +

geom_line(col="blue",lwd=1) +

geom_segment(x=-1,xend=0,y=0,yend=0,col="blue",lwd=1) +

geom_segment(x=2,xend=3,y=1,yend=1,col="blue",lwd=1) +

labs(y = expression(F[X]*"(x)")) +

base_theme

Figure 1.20: The cdf for our mathematically discontinuous pdf.

1.10 The Law of Total Probability

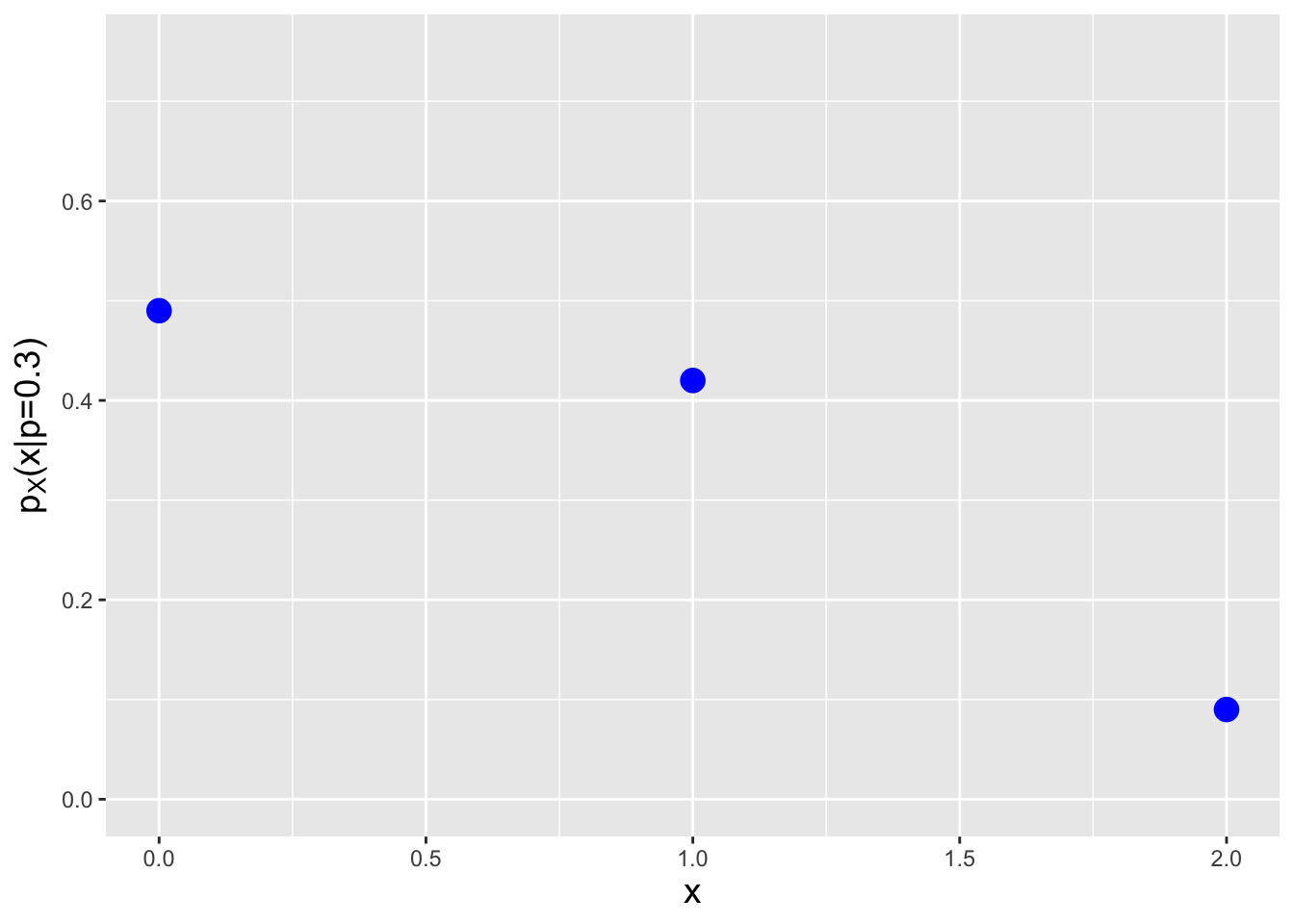

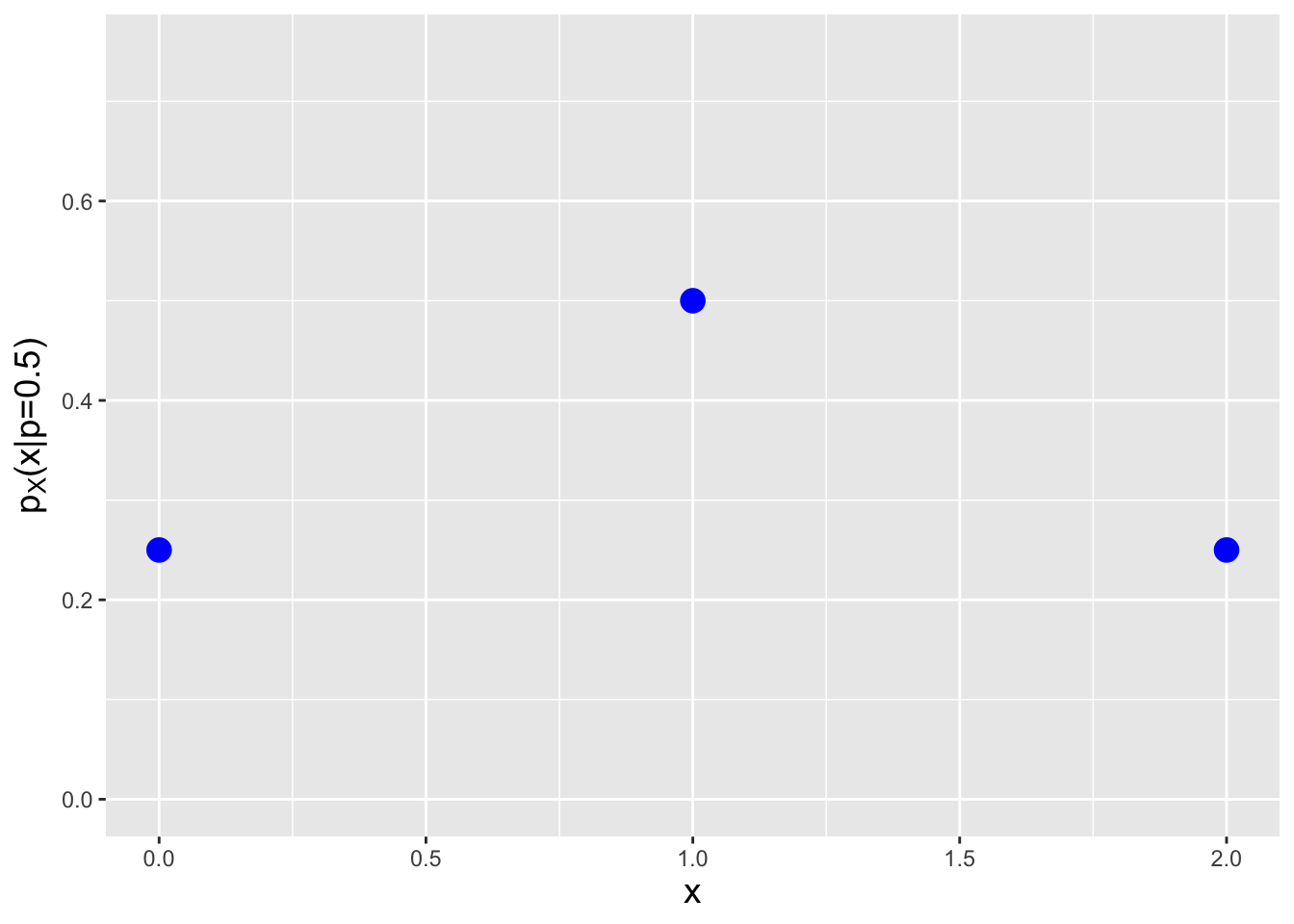

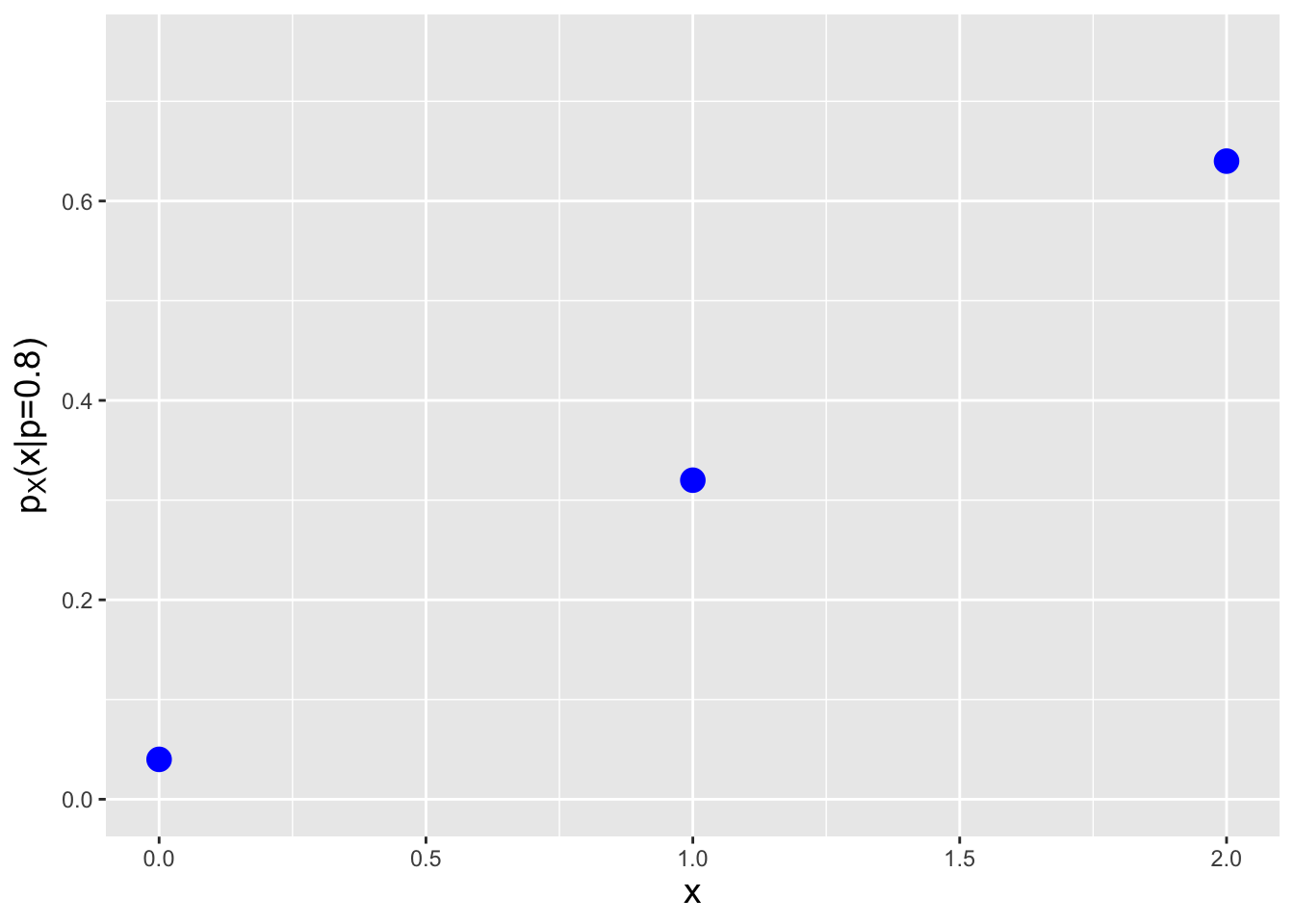

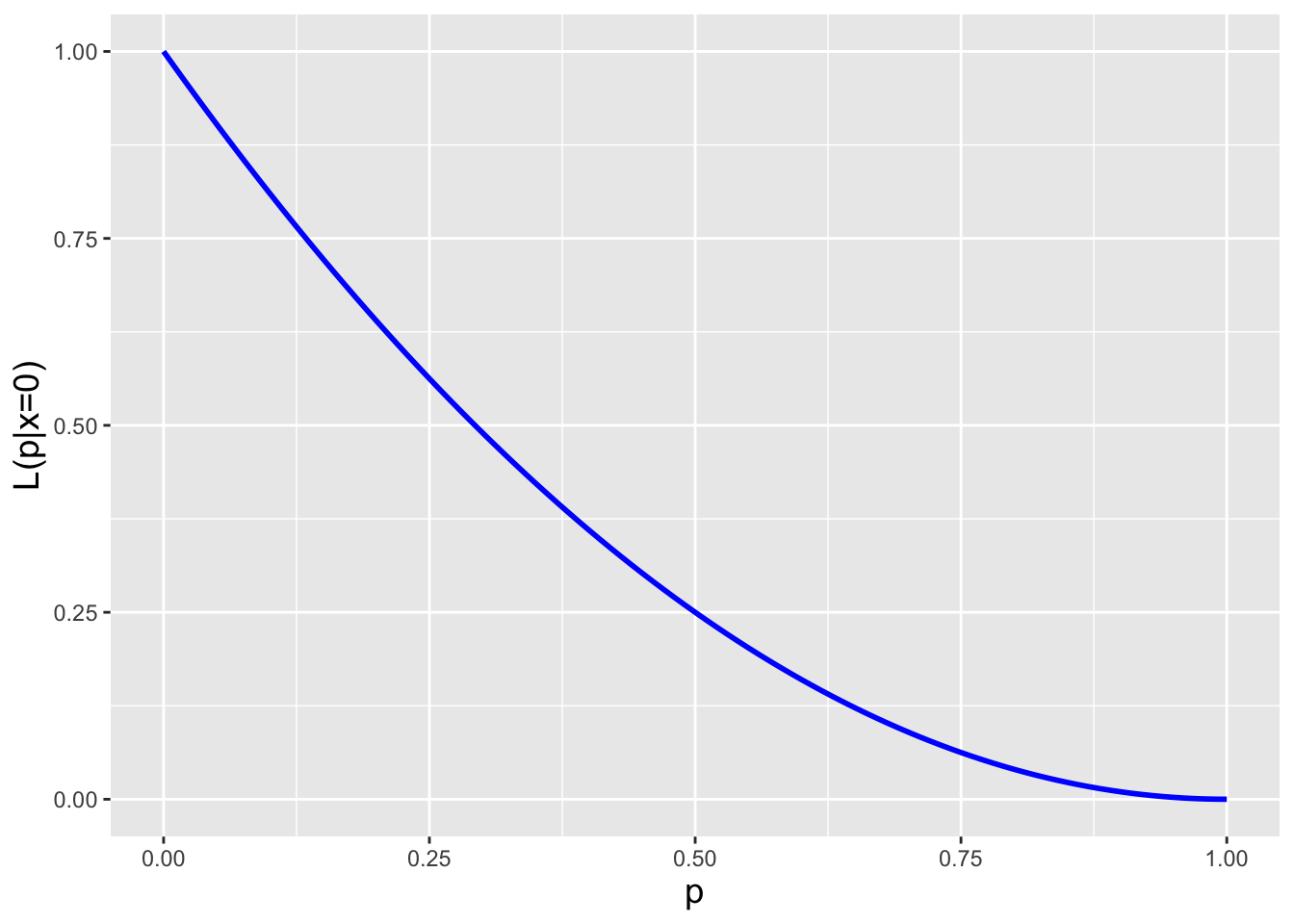

One of the laws of probability that we introduce earlier in this chapter is the Law of Total Probability, or LoTP: if we partition a sample space \(\Omega\) into \(k\) disjoint events \(\{B_1,\ldots,B_k\}\), then for any event \(A\) we can write \[ P(A) = \sum_{i=1}^k P(A \vert B_i) P(B_i) \,. \] We are in a position now, having introduced random variables and probability distributions, to update how we think of this law: it can express the probability of a random variable \(X\) when it is sampled from a discrete distribution with parameter \(\theta\)…and when \(\theta\) itself is not a fixed constant (as it has been up until now), but is itself a discrete random variable. To see this, let’s rewrite the LoTP given this description: \[ p_X(x) = \sum_\theta p_{X \vert \theta}(x \vert \theta) p_{\Theta}(\theta) \,. \] This equation is saying that the probability mass associated with the coordinate \(x\) is the value of the mass for \(x\), given the value \(\theta\), weighted by the probability that we would even observe the value \(\theta\) in the first place. Or, that \(p_X(x)\) is a weighted average of the values of the conditional distribution \(p_{X \vert \theta}(x \vert \theta)\), where the weights are given by \(p_{\Theta}(\theta)\).

What if \(\theta\) is actually a continuous random variable? We can extend the LoTP to handle that possibility by replacing the summation over a discrete random variable with an integral over a continuous one: \[ p_X(x) = \int_\theta p_{X \vert \theta}(x \vert \theta) f_{\Theta}(\theta) d\theta \,. \] And what if the distribution of \(X \vert \theta\) is continuous? We would just replace the \(p_X\) and the \(p_{X \vert \theta}\) in the equations above with \(f_X\) and \(f_{X \vert \theta}\), i.e., we would use the LoTP to define a probability density instead of a probability mass.

1.10.1 The LoTP With Two Simple Discrete Distributions

Let’s suppose we have two random variables \(X\) and \(Y\), where the probability mass function for \(Y\) is

| \(y\) | \(p_Y(y)\) |

|---|---|

| 0 | 2/3 |

| 1 | 1/3 |

and where, if \(Y = 0\), the pmf for \(X\) is

| \(x \vert y=0\) | \(p_{X \vert Y}(x \vert y=0)\) |

|---|---|

| 0 | 4/5 |

| 1 | 1/5 |

and if \(Y = 1\) the pmf for \(X\) is

| \(x \vert y=1\) | \(p_{X \vert Y}(x \vert y=1)\) |

|---|---|

| 0 | 3/5 |

| 1 | 2/5 |

What is the pmf \(p_X(x)\)?

The Law of Total Probability tells us that \[ p_X(x) = \sum_y p_{X \vert Y}(x \vert y) p_{Y}(y) \,, \] so \[\begin{align*} p_X(0) &= p_{X \vert Y}(0 \vert 0) p_{Y}(0) + p_{X \vert Y}(0 \vert 1) p_{Y}(1) = \frac{4}{5} \cdot \frac{2}{3} + \frac{3}{5} \cdot \frac{1}{3} = \frac{11}{15} \\ p_X(1) &= p_{X \vert Y}(1 \vert 0) p_{Y}(0) + p_{X \vert Y}(1 \vert 1) p_{Y}(1) = \frac{1}{5} \cdot \frac{2}{3} + \frac{2}{5} \cdot \frac{1}{3} = \frac{4}{15} \,. \end{align*}\] The pmf is thus

| \(x\) | \(p_X(x)\) |

|---|---|

| 0 | 11/15 |

| 1 | 4/15 |

The masses sum to 1, so indeed this is a proper pmf.

1.10.2 The Law of Total Expectation

If we inspect the tables above, we see that, e.g., \[\begin{align*} E[X \vert Y=0] &= 0 \cdot \frac{4}{5} + 1 \cdot \frac{1}{5} = \frac{1}{5} \,. \end{align*}\] A similar calculation yields \(E[X \vert Y=1] = 2/5\). What then is the expected value of \(X\) itself?

A result related to the Law of Total Probability is the Law of Total Expectation (LoTE), which states that when \(Y\) is finite and countable, \[ E[X] = E[E[X \vert Y]] = \sum_y E[X \vert Y=y] ~ p_Y(y) \,, \] i.e., the overall expected value is a weighted average of the individual values \(E[X \vert Y=y]\). Here, the LoTE yields \[ E[X] = \frac{1}{5} \cdot \frac{2}{3} + \frac{2}{5} \cdot \frac{1}{3} = \frac{4}{15} \,. \]

1.10.3 The LoTP With Two Continuous Distributions

Let’s suppose that we have two random variables, \(X\) and \(\theta\), such that \[\begin{align*} f_{X \vert \Theta}(x \vert \theta) &= \theta \exp(-\theta x) \\ f_{\Theta}(\theta) &= \exp(-\theta) \,, \end{align*}\] for \(x \in [0,\infty)\) and \(\theta > 0\). What is \(f_X(x)\)?

As mentioned above, the primary change to the LoTP would be that we use integrate over all possible values of \(\theta\), rather than sum, so the LoTP looks like this: \[ f_X(x) = \int_0^\infty f_{X \vert \Theta}(x \vert \theta) f_{\Theta}(\theta) d\theta \,. \] Now that we’ve established this equation, the rest is math…except as we’ll see, we need to use integration by parts. \[\begin{align*} f_X(x) &= \int_0^\infty \theta \exp(-\theta x) \exp(-\theta) d\theta \\ &= \int_0^\infty \theta \exp(-\theta (x+1)) d\theta \,. \end{align*}\] We set up the integration as follows: \[\begin{align*} u = \theta ~~~ & ~~~ dv = \exp(-\theta (x+1)) d\theta \\ du = d\theta ~~~ & ~~~ v = -\frac{1}{x+1}\exp(-\theta (x+1)) \,. \end{align*}\] Then \[\begin{align*} f_X(x) &= \left.(u v)\right|_0^\infty - \int_0^\infty v du \\ &= -\left.\frac{\theta}{x+1}\exp(-\theta (x+1))\right|_0^\infty + \int_0^\infty \frac{1}{x+1}\exp(-\theta (x+1)) d\theta \\ &= 0 + \int_0^\infty \frac{1}{x+1}\exp(-\theta (x+1)) d\theta \,. \end{align*}\] (We will stop here momentarily to remind the reader that when we evaluate an expression of the form \(x e^{-x}\), the result as \(x \rightarrow \infty\) is zero because \(e^{-x} \rightarrow 0\) faster than \(x \rightarrow \infty\). We now carry on…) \[\begin{align*} f_X(x) &= \int_0^\infty \frac{1}{x+1}\exp(-\theta (x+1)) d\theta \\ &= \left. -\frac{1}{(x+1)^2} \exp(-\theta (x+1)) \right|_0^\infty \\ &= \frac{1}{(x+1)^2} \,, \end{align*}\] for \(x \in [0,\infty)\). Done. We will leave it as an exercise to the reader to confirm that \(f_X(x)\) is a valid pdf that integrates to one.

Above, we say that “we need to use integration by parts.” This is not quite true. A handy result that we will utilize as the book goes on is that \[ \Gamma(t) = \int_0^\infty u^{t-1} \exp(-u) du \,. \] This is the gamma function. (The symbol \(\Gamma\) represents a capital gamma.) One of the properties that makes this function useful is that when \(x\) is a non-negative integer, the gamma function is related to the factorial function: \(\Gamma(x) = (x-1)! = (x-1) (x-2) \cdots 1\). But the reason why the gamma function is useful here is that we can use it to avoid integration by parts.

Our integral is \[ f_X(x) = \int_0^\infty \frac{1}{x+1}\exp(-\theta (x+1)) d\theta \,. \] To solve this, we implement variable substitution. The three steps of variable substitution are

- to write down a viable substitution \(u = g(\theta)\);

- to then derive \(du = h(u,\theta) d\theta\); and finally

- to use \(u = g(\theta)\) to transform the bounds of the integral.

For our integral \[ (1) ~~ u = (x+1)\theta ~~ \implies ~~ (2) ~~ du = (x+1)d\theta \] and \[ (3) ~~ \theta = 0 ~\implies~ u = 0 ~~~ \mbox{and} ~~~ \theta = \infty ~\implies~ u = \infty \,, \] We see from point (3) that making the variable substitution will not affect the bounds of the integral. Thus we have that \[\begin{align*} f_X(x) &= \int_0^\infty \frac{1}{x+1}\exp(-u) \frac{du}{x+1} \\ f_X(x) &= \frac{1}{(x+1)^2} \int_0^\infty u^0 \exp(-u) du \\ f_X(x) &= \frac{1}{(x+1)^2} \Gamma(1) = \frac{1}{(x+1)^2} 0! = \frac{1}{(x+1)^2} \,. \end{align*}\] (Here, we utilize the fact that zero factorial is one.)

1.11 Data Sampling

One of the primary uses of R is to perform simulations in which

we repeatedly create mock datasets and analyze them. A natural first question

would then be: how do we create such datasets?

Below, we will describe two methods for randomly sampling data given

a probability distribution. The first, rejection sampling, is appropriate

to use when we cannot work with the cumulative distribution function of the

assumed distribution analytically (i.e., with pencil and paper).

As we will see, rejection sampling

is (relatively) computationally inefficient, but it does have the benefit

that we can apply it in just about any sampling situation. The second

method, inverse transform sampling, is efficient and should always be

our first choice when the cdf is tractable. To head off a question the

reader may have: no, we do not always have to hand-code samplers when working

in R…for commonly used distributions, R supplies “wrapper functions”

that effectively abstract away the details of inverse transform sampling.

However, knowing how to code a sampler is a good skill to have!

Let’s suppose we are working with one of the pdfs that we define above: \[ f_X(x) = \left\{ \begin{array}{cl} \frac{1}{\pi} x \sin x & 0 \leq x \leq \pi \\ 0 & \mbox{otherwise} \end{array} \right. \,, \] The cdf for this distribution is \[ F_X(x) = \frac{1}{\pi}\left( \sin x - x \cos x \right) \,, \] which is not easily inverted. Thus to sample data from this distribution, we utilize the following algorithmic steps.

- Determine the range of values over which we will sample data values: \([x_{lo},x_{hi}]\). Nominally this will be the domain of the distribution, but sometimes that’s not viable, such as when the domain is semi- or fully infinite. (Here, the range is easily specified: \([0,\pi]\).)

- Within \([x_{lo},x_{hi}]\), determine the maximum value of \(f_X(x)\).

(For our assumed distributione, this is not necessarily a simple

calculation, as the derivative of \(f_X(x)\) is \((\sin x + x \cos x)/\pi\).

We can solve for the root using, e.g.,

R’suniroot()function:

## [1] 2.028758This looks for the root of the given function within the stated interval;

since there is a root at 0, corresponding to a functional minimum, we

exclude that point by setting the interval lower bound to 0.01. uniroot()

is an extremely useful function and we will see it again throughout the

rest of this book. The root is \(x_{max} = 2.0288\) and

\(f_X(x_{max}) = 0.5792\).)

3. We repeat the following steps until we reach our target

sample size \(n\): (a) sample

a random number \(u\) assuming uniform weighting between \(x_{lo}\) and \(x_{hi}\);

(b) sample another random number \(v\) assuming uniform weighting

between 0 and \(f_X(x_{max})\);

and (c) keep \(u\) as part of our sample if \(v \leq f_X(u)\).

(a) and (b) are summed up by the statement “draw a rectangle whose vertices

are \((x_{lo},0)\), \((x_{hi},0)\), \(x_{hi},f_X(x_{max}))\), and

\(x_{lo},f_X(x_{max}))\) and pick a random point inside the rectangle,”

while (c) is summed up by saying “keep the random point if it lies below

\(f_X(x)\).”

Note that we will

assume that at the very least, we can use an R wrapper function to sample

a numbers with uniform weighting; without this assumption, we would have

to wade into the quagmire that is random number generation, which is

well beyond the scope of this book!

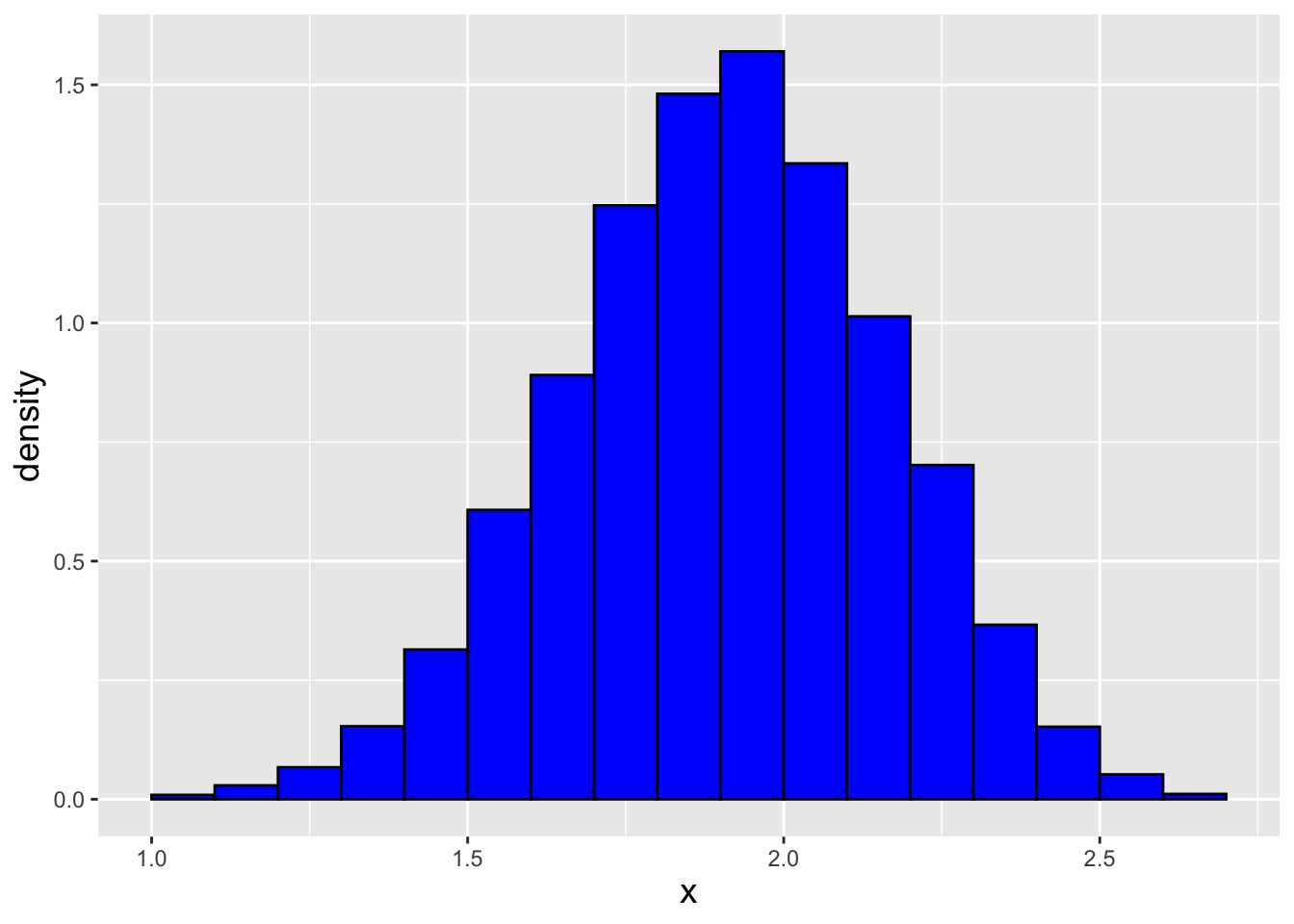

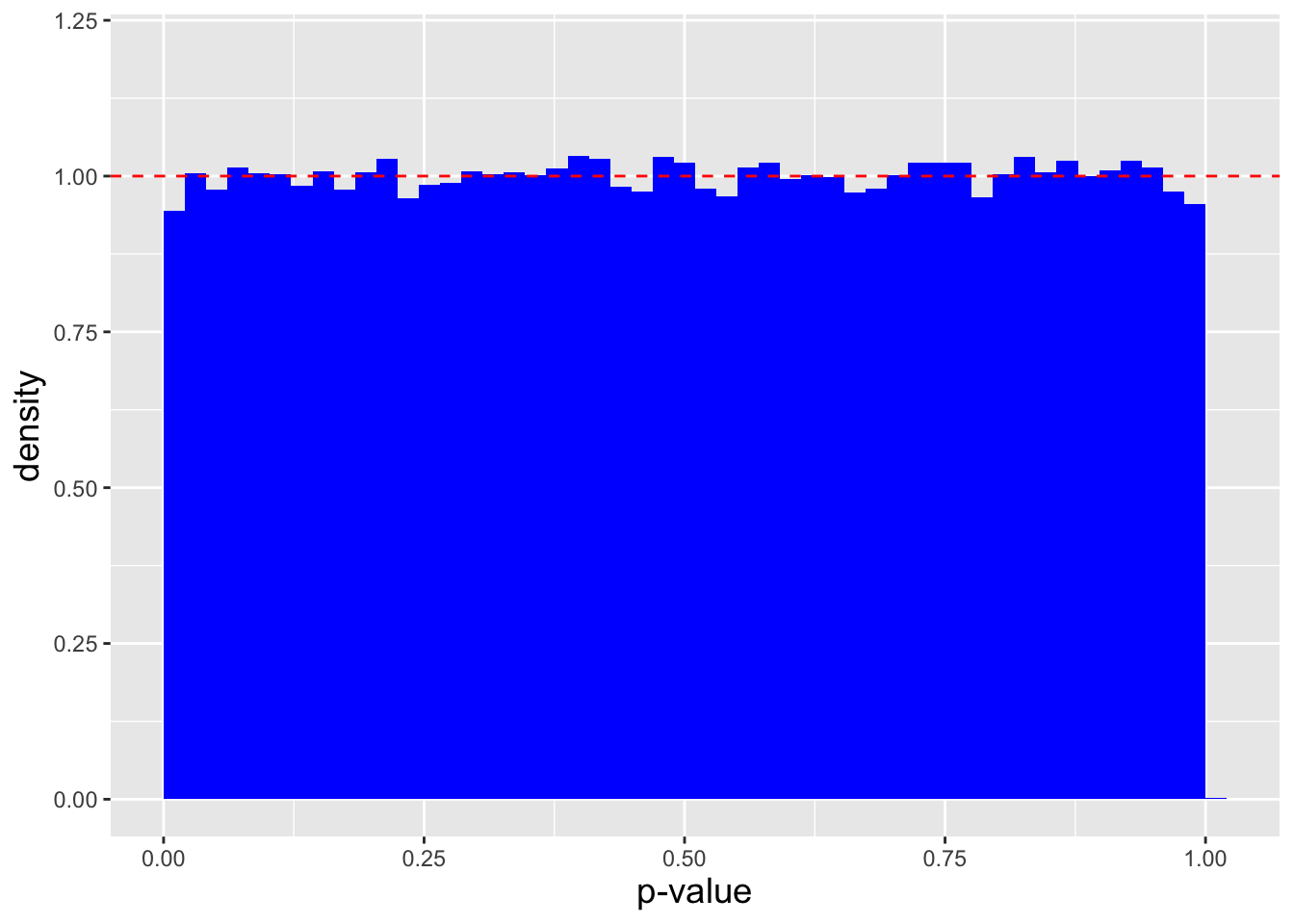

In a code chunk and in Figure 1.21 we show how we sample \(n = 1000\) data sampled from our distribution, and the final result. (We dub the observed distribution the empirical distribution of the data, where “empirical” simply means “what we actually observe.”) Rejection sampling seems quick and easy…should we always use it when we are not already provided a sampling function for our pmf or pdf? No, not necessarily, because as noted above it is computationally inefficient: we might have to sample \(m \gg n\) points in order to populate a sample of size \(n\).

(We will also note here that this is the first time that we are running

across the R function set.seed(). This initializes the underlying random

number generator such that we generate the same numerical results every time

we run the subsequent code…which is useful when doing analyses that

we want to be reproducible.

If we leave out set.seed(), then every time we run the subsequent code, we

get a different data sample. The number that we pass to set.seed()

can be anything…we adopt 101 here, but it can any real number.)

set.seed(101)

n <- 1000

x.lo <- 0

x.hi <- pi

f.x.hi <- 0.58 # rounding up is OK, it just decreases algorithm efficiency

X.sample <- rep(NA,n) # rejection sampling

ii <- 0

while ( ii < n ) {

u <- runif(1,min=x.lo,max=x.hi)

v <- runif(1,min=0,max=f.x.hi)

if ( v < u*sin(u)/pi ) {

ii <- ii+1

X.sample[ii] <- u

}

}

empirical.dist <- data.frame(X.sample=X.sample)

x <- seq(0,pi,by=0.01)

f.x <- x*sin(x)/pi

true.dist <- data.frame(x=x,f.x=f.x)

ggplot(data=empirical.dist,aes(x=X.sample)) +

geom_histogram(aes(y=after_stat(density)),col="black",fill="blue",

breaks=seq(0,3.2,by=0.2)) +

geom_line(data=true.dist,aes(x=x,y=f.x),col="red",lwd=1) +

labs(x="x") +

base_theme

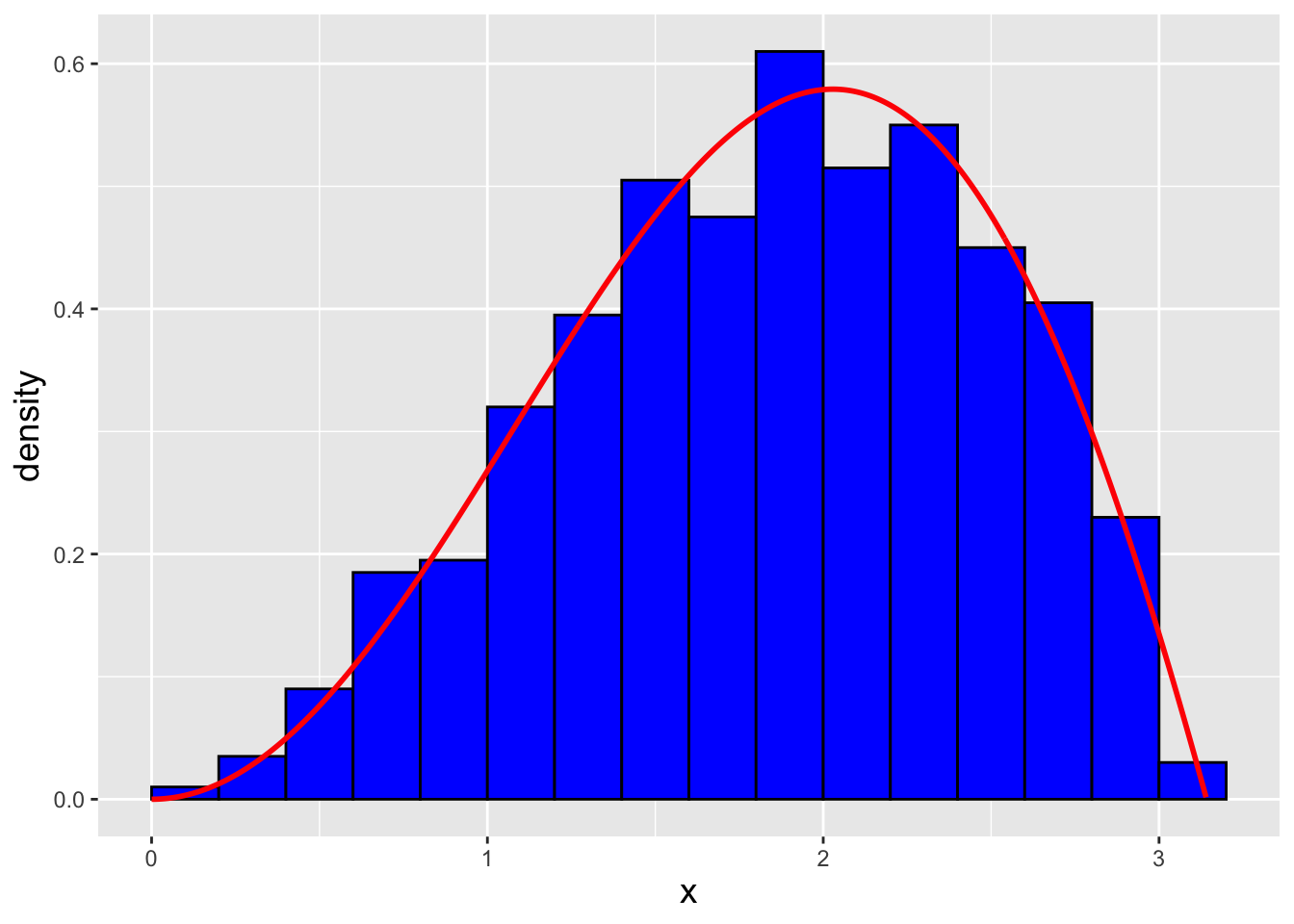

Figure 1.21: \(n = 1000\) data sampled from the distribution \(f_X(x) = (x \sin x)/\pi\) via the rejection sampling algorithm. We observe that our empirical distribution follows the true distribution well.

A primary alternative to rejection sampling is inverse transform sampling, in which we utilize the inverse cdf function to generate appropriately distributed data. Inverse transform sampling is efficient in that every proposal point is kept.

Let’s suppose we are working with the pdf: \[ f_X(x) = \theta x^{\theta-1} \,, \] where \(\theta > 0\) and \(x \in [0,1]\). The inverse cdf, as derived in an example above, is \(F_X^{-1}(q) = q^{1/\theta}\). Inverse transform sampling utilizes the following algorithmic steps.

- Pick the target sample size \(n\).

- Sample \(n\) data with uniform weighting between 0 and 1. These are the cdf bounds. Call these data \(q\).

- Transform the data \(q\) to be \(x = F_X^{-1}(q)\).

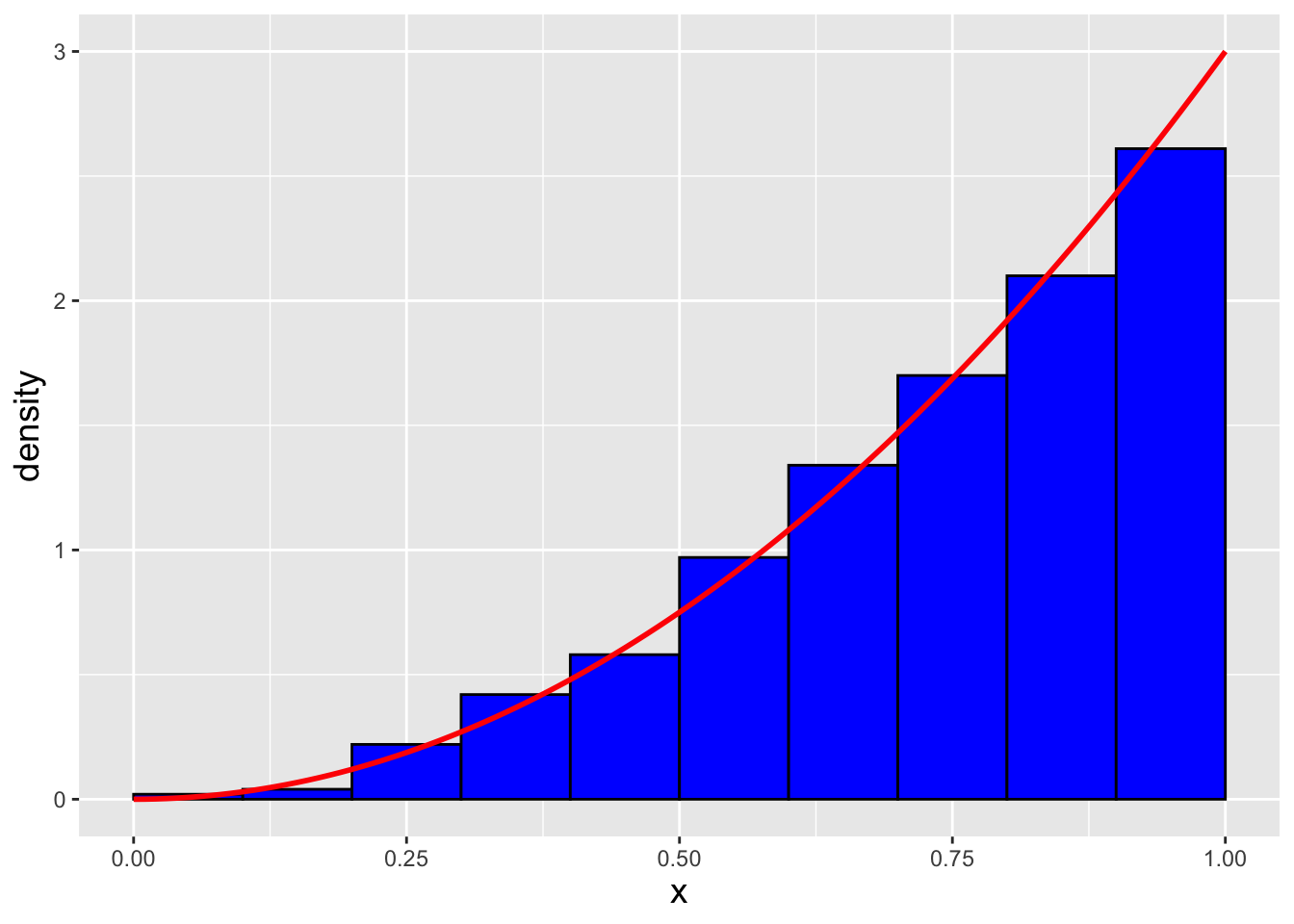

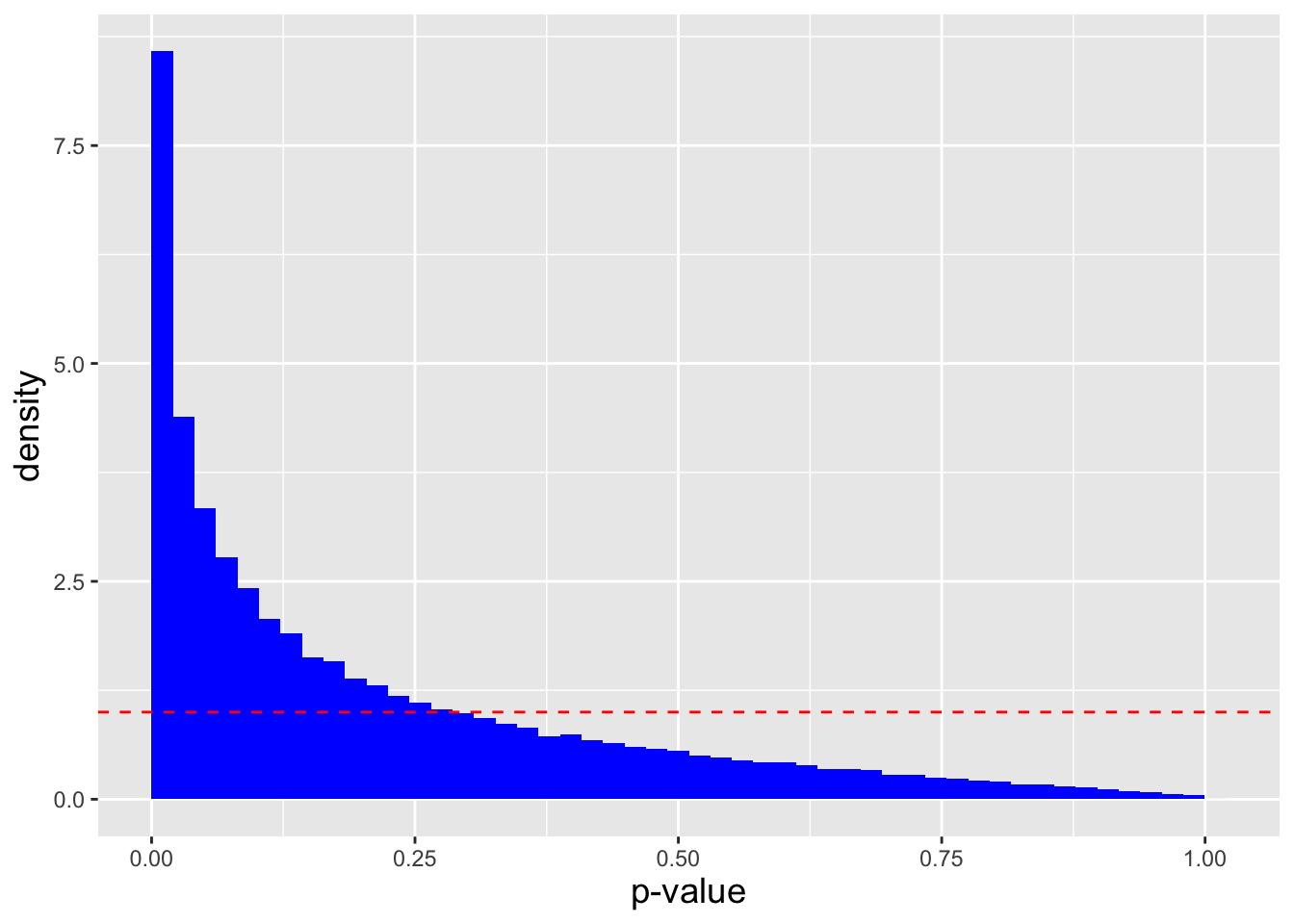

In a code chunk and in Figure 1.22 we display our inverse-transform sampling code as well as the empirical distribution of \(n = 1000\) data sampled from our distribution (assuming \(\theta = 3\)). We note that the code to generate our sample is much simpler than the code needed to perform rejection sampling!

set.seed(101)

theta <- 3

n <- 1000

q <- runif(n,min=0,max=1)

X.sample <- q^(1/theta)

empirical.dist <- data.frame(X.sample=X.sample)

x <- seq(0,1,by=0.01)

f.x <- theta*x^(theta-1)

true.dist <- data.frame(x=x,f.x=f.x)

ggplot(data=empirical.dist,aes(x=X.sample)) +

geom_histogram(aes(y=after_stat(density)),col="black",fill="blue",

breaks=seq(0,1,by=0.1)) +

geom_line(data=true.dist,aes(x=x,y=f.x),col="red",lwd=1) +

labs(x="x") +

base_theme

Figure 1.22: \(n = 1000\) data sampled from the distribution \(f_X(x) = 3x^2\) via the inverse transform sampling algorithm. We observe that our empirical distribution follows the true distribution well.

1.11.1 More Inverse-Transform Sampling

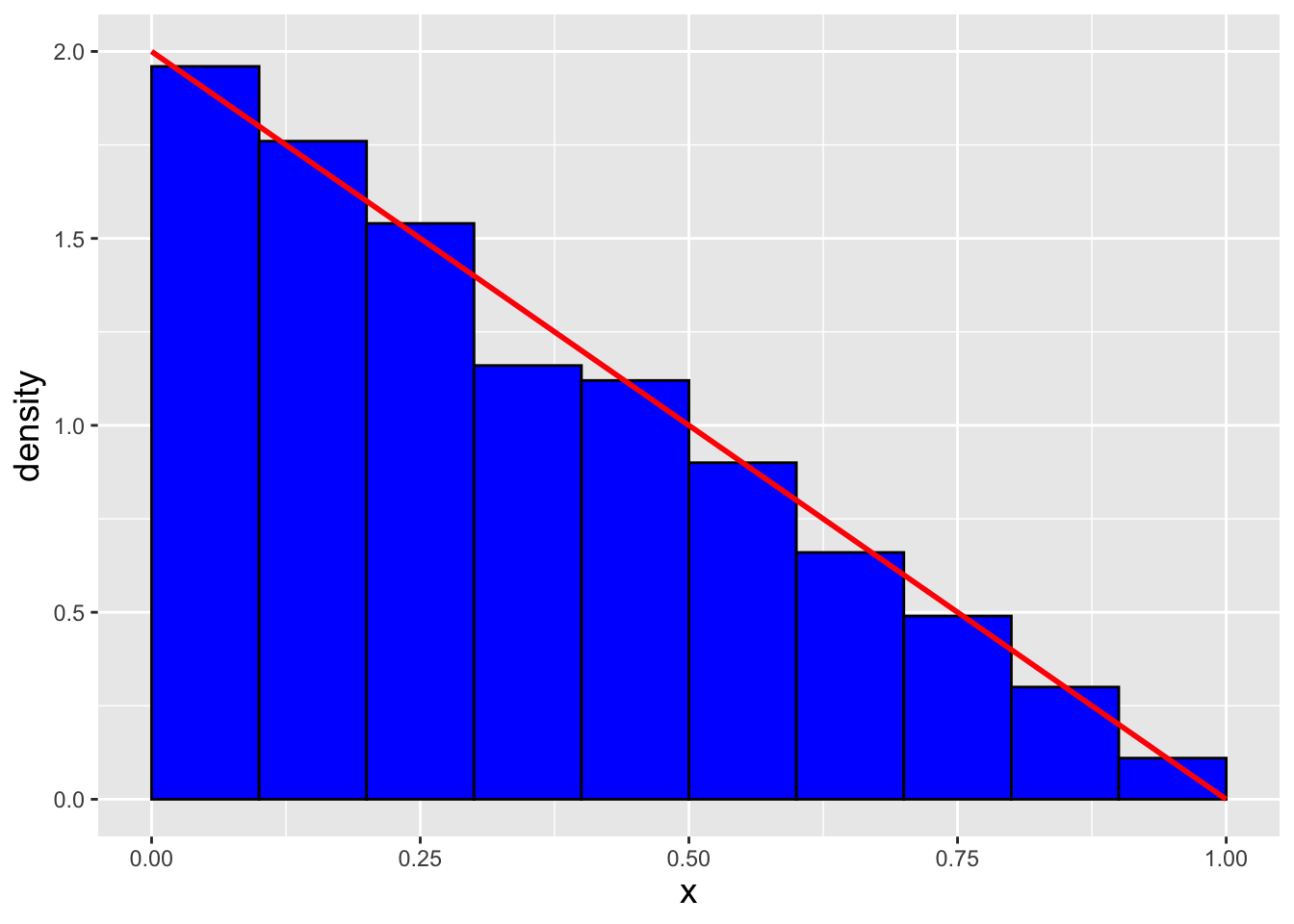

Let’s suppose that we are to sample \(n\) data from the following distribution: \[ f_X(x) = 2(1-x) ~~~ x \in [0,1] \,. \] Can we do this via inverse-transform sampling? The answer is yes, if (a) we can derive the cdf \(F_X(x)\), and (b) we can invert it. Here, \[ F_X(x) = \int_0^x f_V(v) dv = \int_0^x 2(1-v) dv = \left. -(1-v)^2 \right|_0^x = -(1-x)^2 - (-1) = 1 - (1-x)^2 \,. \] To invert the cdf, we set it equal to \(q\) and solve for \(x\): \[\begin{align*} q &= 1 - (1-x)^2 \\ \Rightarrow ~~~ 1 - q &= (1-x)^2 \\ \Rightarrow ~~~ \sqrt{1 - q} &= 1-x \\ \Rightarrow ~~~ x &= 1 - \sqrt{1 - q} \., \end{align*}\] To check for the correctness of our inversion, we utilize a code like the one in the main body of the section above and compare our sampled data against the pdf. See Figure 1.23.

set.seed(101)

n <- 1000

q <- runif(n,min=0,max=1)

X.sample <- 1 - sqrt(1-q)

empirical.dist <- data.frame(X.sample=X.sample)

x <- seq(0,1,by=0.01)

f.x <- 2*(1-x)

true.dist <- data.frame(x=x,f.x=f.x)

ggplot(data=empirical.dist,aes(x=X.sample)) +

geom_histogram(aes(y=after_stat(density)),col="black",fill="blue",

breaks=seq(0,1,by=0.1)) +

geom_line(data=true.dist,aes(x=x,y=f.x),col="red",lwd=1) +

labs(x="x") +

base_theme

Figure 1.23: \(n = 1000\) data sampled from the distribution \(f_X(x) = 2(1-x)\) via the inverse transform sampling algorithm. We observe that our empirical distribution follows the true distribution well.

1.12 Statistics and Sampling Distributions

Let’s say that we run an experiment in which we randomly sample many data from some distribution \(P\): \[ \mathbf{X} = \{X_1,X_2,\ldots,X_n\} \overset{iid}{\sim} P \,. \] The expected value for this distribution is \(E[X] = \mu\), while the variance is \(V[X] = \sigma^2\) (assumed to be finite).

So we have data…now what?

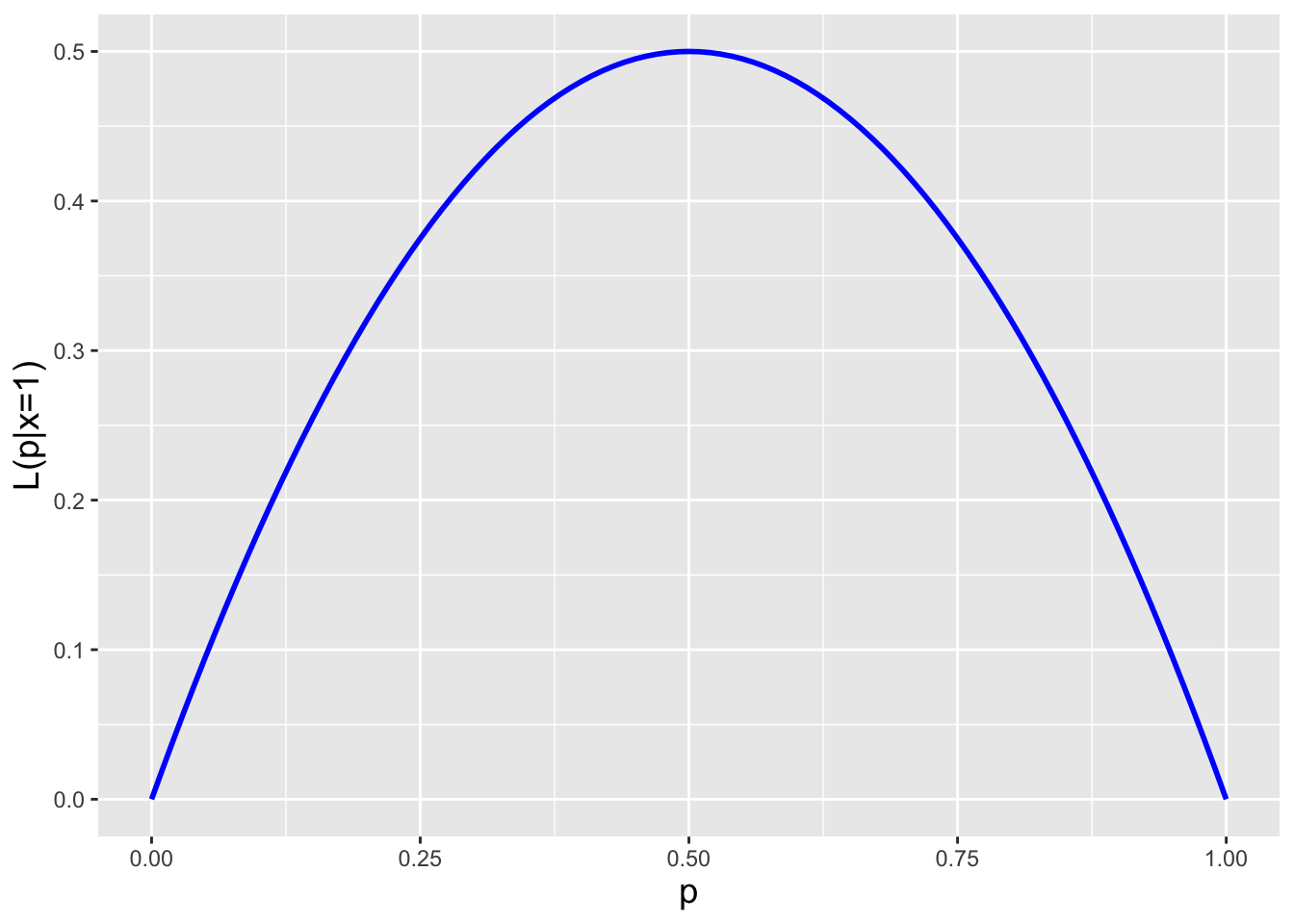

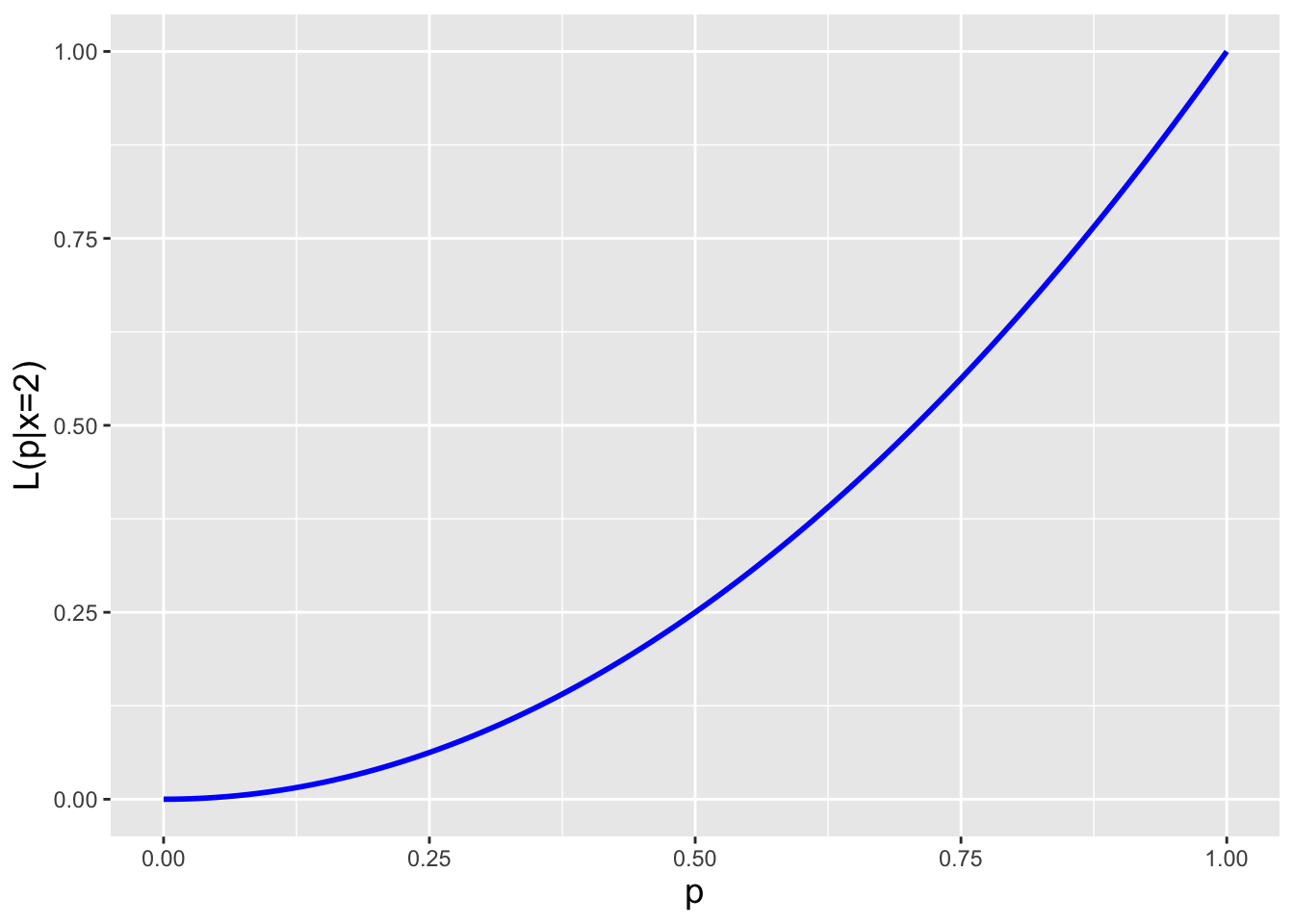

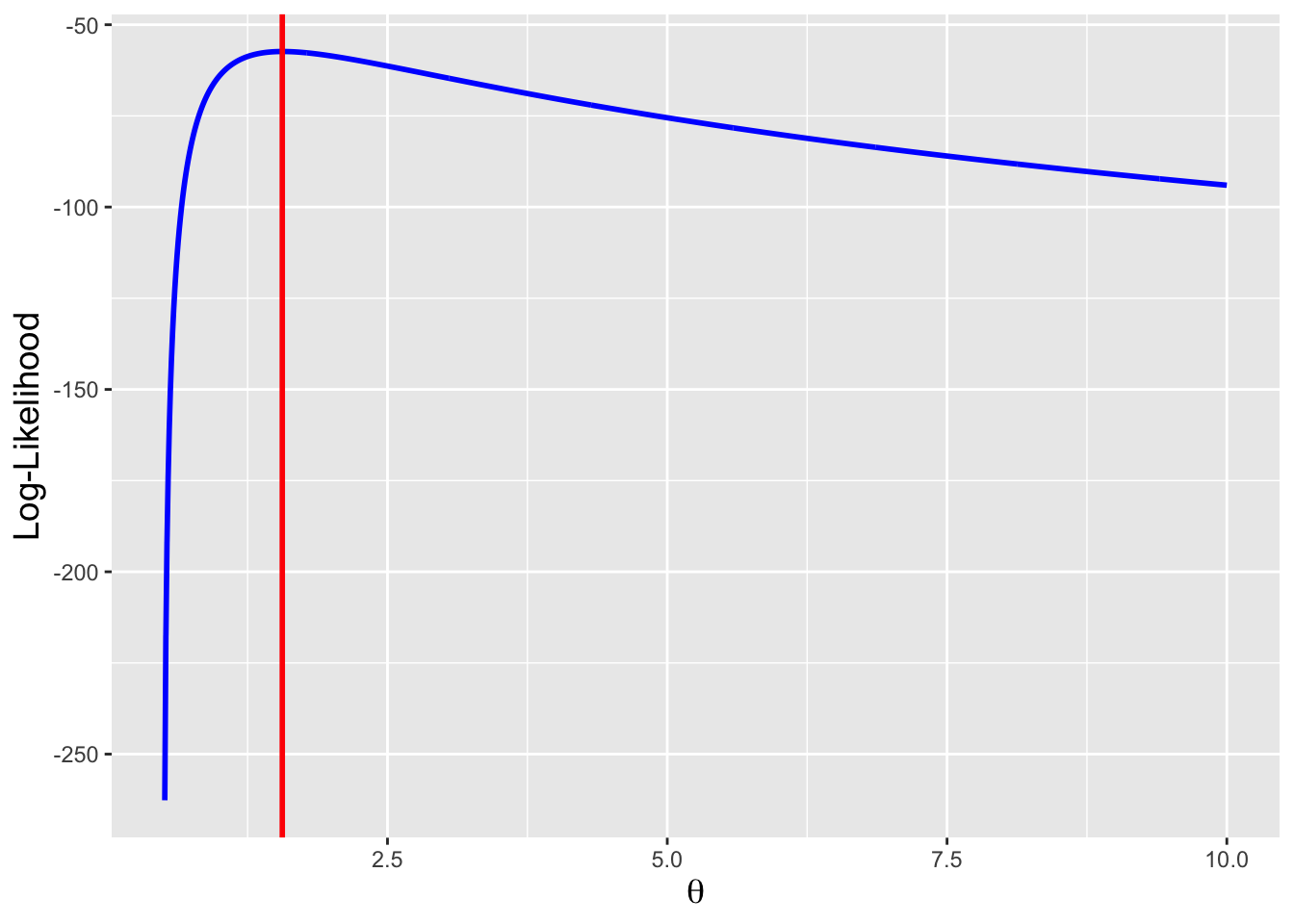

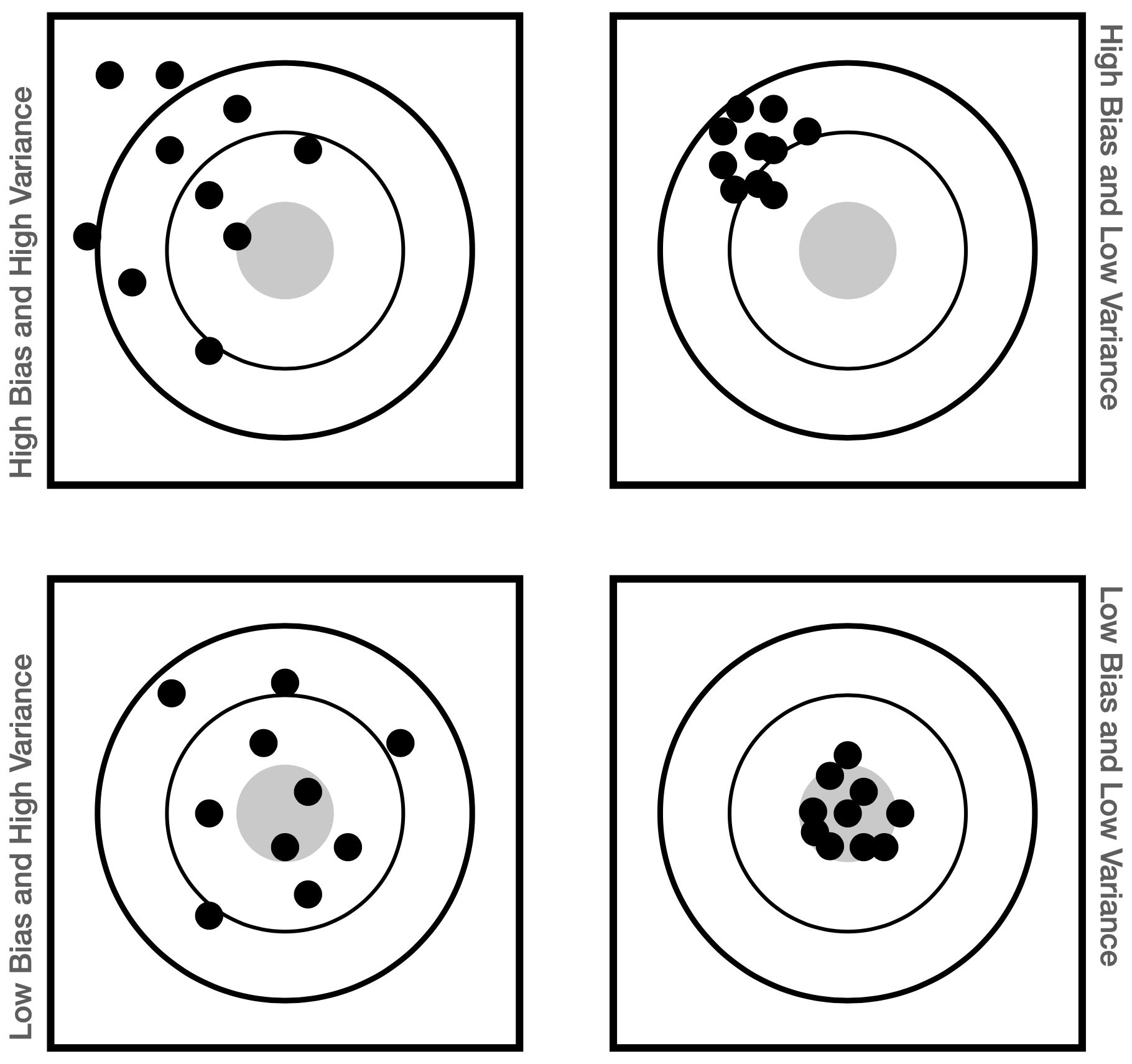

Figure 1.24: The canonical experiment-and-infer cycle. We gather data sampled from an unknown population, assume that the population can be represented by some family of distributions parameterized by \(\theta\), and compute and use statistics to infer the value(s) of \(\theta\).