3 The Binomial (and Related) Distributions

3.1 Motivation

Let’s assume we are holding a coin. It may be a fair coin (meaning that the probabilities of observing heads or tails in a given flip of the coin are each 0.5)…or perhaps it is not. We decide that we are going to flip the coin some fixed number of times \(k\), and we will record the outcome of each flip: \[ \mathbf{Y} = \{Y_1,Y_2,\ldots,Y_k\} \,, \] where, e.g., \(Y_1 = 1\) if we observe heads or \(0\) if we observe tails. This is an example of a Bernoulli process, where “process” denotes a sequence of observations, and “Bernoulli” indicates that there are two possible discrete outcomes for each observation.

A binomial experiment is one that generates Bernoulli process data through the running of \(k\) trials (e.g., \(k\) separate coin flips). The properties of such an experiment are that:

- The number of trials \(k\) is fixed in advance.

- Each trial has two possible outcomes, generically denoted as \(S\) (success) or \(F\) (failure).

- The probability of success remains \(p\) throughout the experiment.

- The outcome of any one trial is independent of the outcomes of the others.

The random variable of interest for a binomial experiment is the number of observed successes. A closely related alternative to a binomial experiment is a negative binomial experiment, where the number of successes \(s\) is fixed in advance, instead of the number of trials \(k\), and the random variable of interest is the number of failures that we observe before achieving \(s\) successes. A simple example would be flipping a coin until \(s\) heads are observed and recording the overall number of tails that we observe.

As a side note to the third point above, about the probability of success remaining \(p\) throughout the experiment: binomial and negative binomial experiments rely on sampling with replacement…if we observe a head for a given coin flip, we can observe heads again in the future. In the real world, however, the reader will observe instances where, e.g., a binomial distribution is used to model experiments featuring sampling without replacement: we have \(K = 100\) widgets, of which ten are defective; we check one to see if it is defective (with probability \(p = 0.1\)) and set it aside, then check another (with probability either 10/99 or 9/99, depending on the outcome of the first trial), etc. The convention for using the binomial distribution to model data in such a situation is that it is fine to do so if the number of trials \(k \lesssim K/10\). However, in the age of computers, there is no reason to apply the binomial distribution when we can apply the hypergeometric distribution instead.

And, as a side note to the fourth point above, about the outcome of each trial being independent of the outcomes of the others: in a general process, each datum can be dependent on the data observed previously. How each datum is dependent on previous data defines the type of process that is observed: a Markov process, a Gaussian process, etc. A Bernoulli process is termed a memoryless process because it is comprised of independent (and identically distributed) data.

3.2 Probability Mass Function

Let’s focus first on the outcome of a binomial experiment, with the random variable \(X\) being the number of observed successes in \(k\) trials. What is the probability of observing \(X=x\) successes, if the probability of observing a success in any one trial is \(p\)? \[\begin{align*} \mbox{$x$ successes}&: p^x \\ \mbox{$k-x$ failures}&: (1-p)^{k-x} \,. \end{align*}\] So \(P(X=x) = p^x (1-p)^{k-x}\)…but, no, this isn’t right. Let’s start again and think this through. Assume \(k = 2\). The sample space of possible experimental outcomes is \[ \Omega = \{ SS, SF, FS, FF \} \,. \] If \(p\) = 0.5, then we can see that the probability of observing one success in two trials is 0.5…but our proposed probability mass function tells us that \(P(X=1) = (0.5)^1 (1-0.5)^1 = 0.25\). What are we missing? We are missing that there are two ways of observing a single success…and we need to count both. Because we ultimately do not care about the order in which successes and failures are observed, we utilize counting via combination: \[ \binom{k}{x} = \frac{k!}{x! (k-x)!} \,, \] where the exclamation point represents the factorial function \(x! = x(x-1)(x-2)\cdots 1\). So now we can correctly write down the binomial probability mass function: \[ P(X=x) = p_X(x) = \binom{k}{x} p^x (1-p)^{k-x} ~~~ x \in \{0,\ldots,k\} \,. \] We denote the distribution of the random variable \(X\) as \(X \sim\) Binomial(\(k\),\(p\)). Note that when \(k = 1\), we have a Bernoulli distribution.

Recall: a probability mass function is one way to represent a discrete probability distribution, and it has the properties (a) \(0 \leq p_X(x) \leq 1\) and (b) \(\sum_x p_X(x) = 1\), where the sum is over all values of \(x\) in the distribution’s domain.

(The reader should note that the number of trials is commonly denoted as \(n\), not as \(k\). However, since \(n\) is conventionally used to denote the sample size in an experiment, to avoid confusion we use \(k\) to denote the number of trials in this text.)

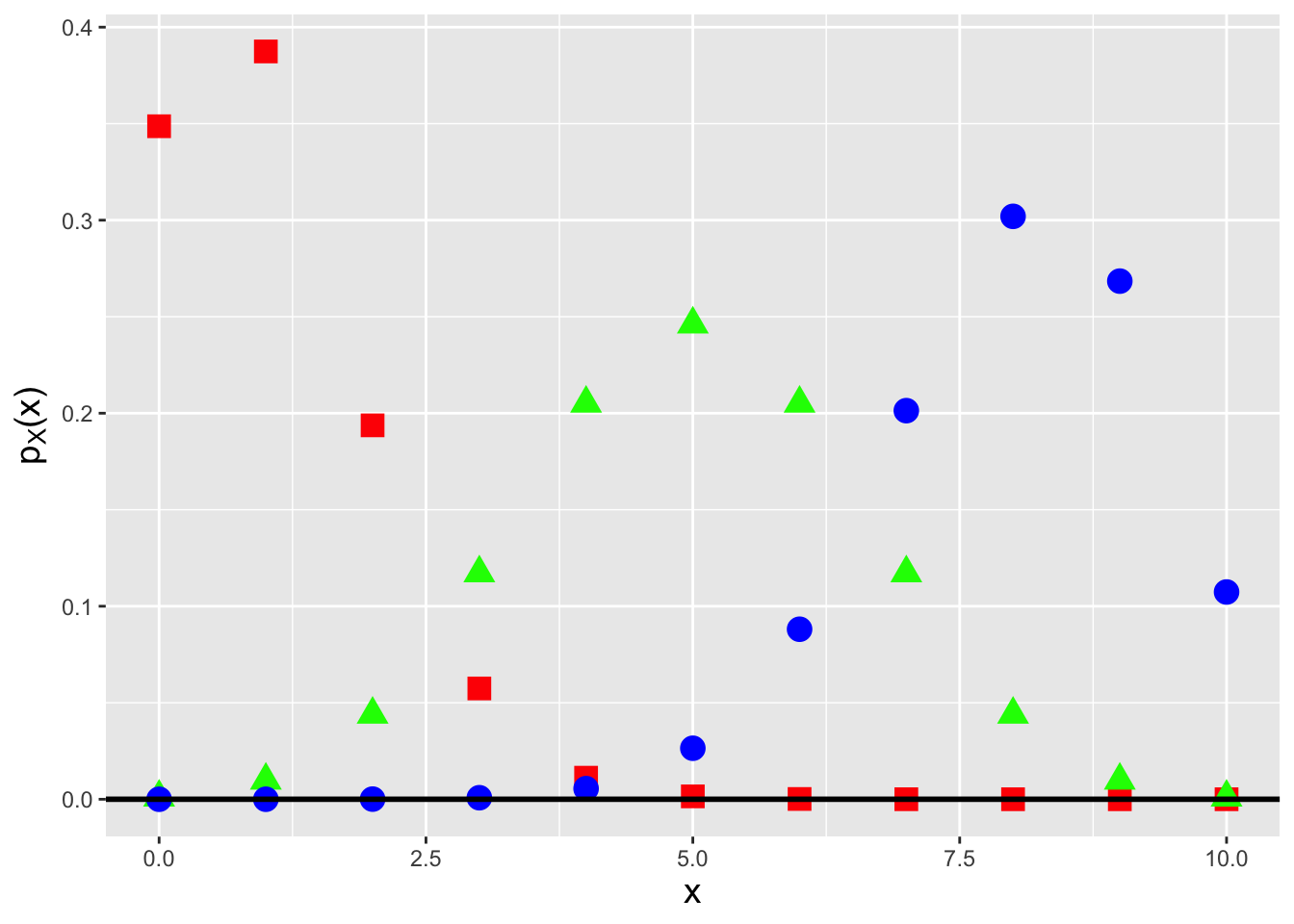

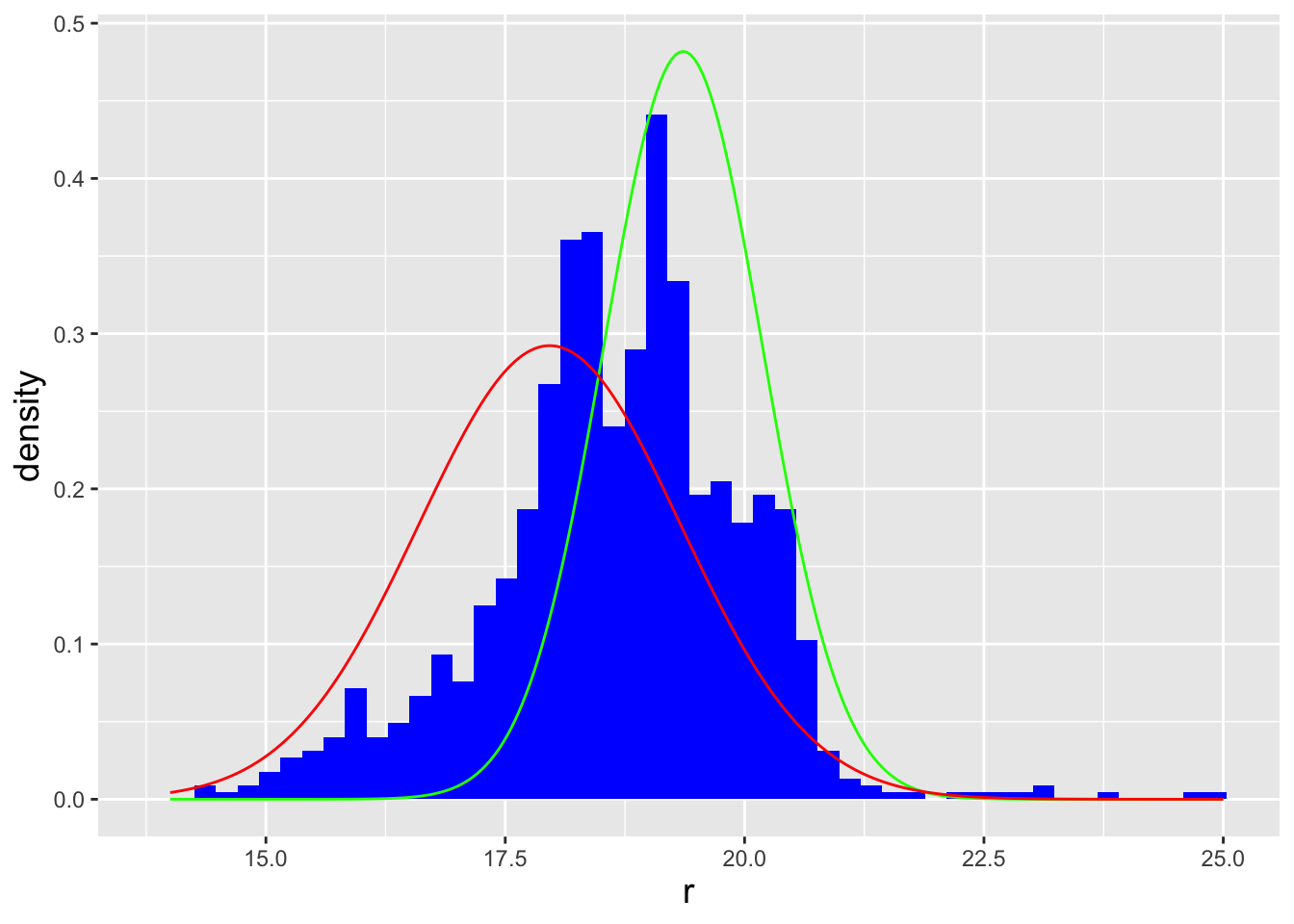

In Figure 3.1, we display three binomial pmfs, one each for probabilities of success 0.1 (red, to the left), 0.5 (green, to the center), and 0.8 (blue, to the right). This figure indicates an important aspect of the binomial pmf, namely that it can attain a shape akin to that of a normal distribution, if \(p\) is such that any truncation observed at the values \(x=0\) and \(x=k\) is minimal. In fact, a binomial random variable converges in distribution to a normal random variable in certain limiting situations, as we show in an example below.

Figure 3.1: Binomial probability mass functions for number of trials \(k = 10\) and success probabilities \(p = 0.1\) (red squares), 0.5 (green triangles), and 0.8 (blue circles).

Recall: the expected value of a discretely distributed random variable is \[ E[X] = \sum_x x p_X(x) \,, \] where the sum is over all values of \(x\) within the domain of the pmf p_X(x). The expected value is equivalent to a weighted average, with the weight for each possible value of \(x\) given by \(p_X(x)\).

For the binomial distribution, the expected value is \[ E[X] = \sum_{x=0}^k x \binom{k}{x} p^x (1-p)^{k-x} \,. \] At first, this does not appear to be easy to evaluate. One trick in our arsenal is to pull constants out of the summation such that whatever is left as the summand is a pmf (and thus sums to 1). Let’s try this here: \[\begin{align*} E[X] &= \sum_{x=0}^k x \binom{k}{x} p^x (1-p)^{k-x} \\ &= \sum_{x=1}^k x \frac{k!}{x!(k-x)!} p^x (1-p)^{k-x} \\ &= \sum_{x=1}^k \frac{k!}{(x-1)!(k-x)!} p^x (1-p)^{k-x} \\ &= kp \sum_{x=1}^k \frac{(k-1)!}{(x-1)!(k-x)!} p^{x-1} (1-p)^{k-x} \,. \end{align*}\] The summation appears almost like that of a binomial random variable. Let’s set \(y = x-1\). Then \[\begin{align*} E[X] &= kp \sum_{x=1}^k \frac{(k-1)!}{(x-1)!(k-x)!} p^{x-1} (1-p)^{k-x} \\ &= kp \sum_{y=0}^{k-1} \frac{(k-1)!}{y!(k-(y+1))!} p^y (1-p)^{k-(y+1)} \\ &= kp \sum_{y=0}^{k-1} \frac{(k-1)!}{y!((k-1)-y)!} p^y (1-p)^{(k-1)-y} \,. \end{align*}\] The summand is now the pmf for the random variable \(Y \sim\) Binomial(\(k-1\),\(p\)), summed over all values of \(y\) in the domain of the distribution. Thus the summation evaluates to 1: \(E[X] = kp\). In an example below, we use a similar strategy to determine the variance \(V[X] = kp(1-p)\).

A negative binomial experiment is governed by the negative binomial distribution, whose pmf is \[ p_X(x) = \binom{x+s-1}{x} p^s (1-p)^x ~~~ x \in \{0,1,\ldots,\infty\} \] The form of this pmf follows from the fact that the underlying Bernoulli process would consist of \(x+s\) data, with the last datum being the observed success that ends the experiment. The first \(x+s-1\) data would feature \(s-1\) successes and \(x\) failures, with the order of success and failure not mattering…so we can view these data as being binomially distributed (albeit with \(x\) representing failures…hence the “negative” in negative binomial!): \[ p_X(x) = \underbrace{\binom{x+s-1}{x} p^{s-1} (1-p)^x}_{\mbox{first $x+s-1$ trials}} \cdot \underbrace{p}_{\mbox{last trial}} \,. \] Note that when \(s = 1\), the resulting distribution is called the geometric distribution.

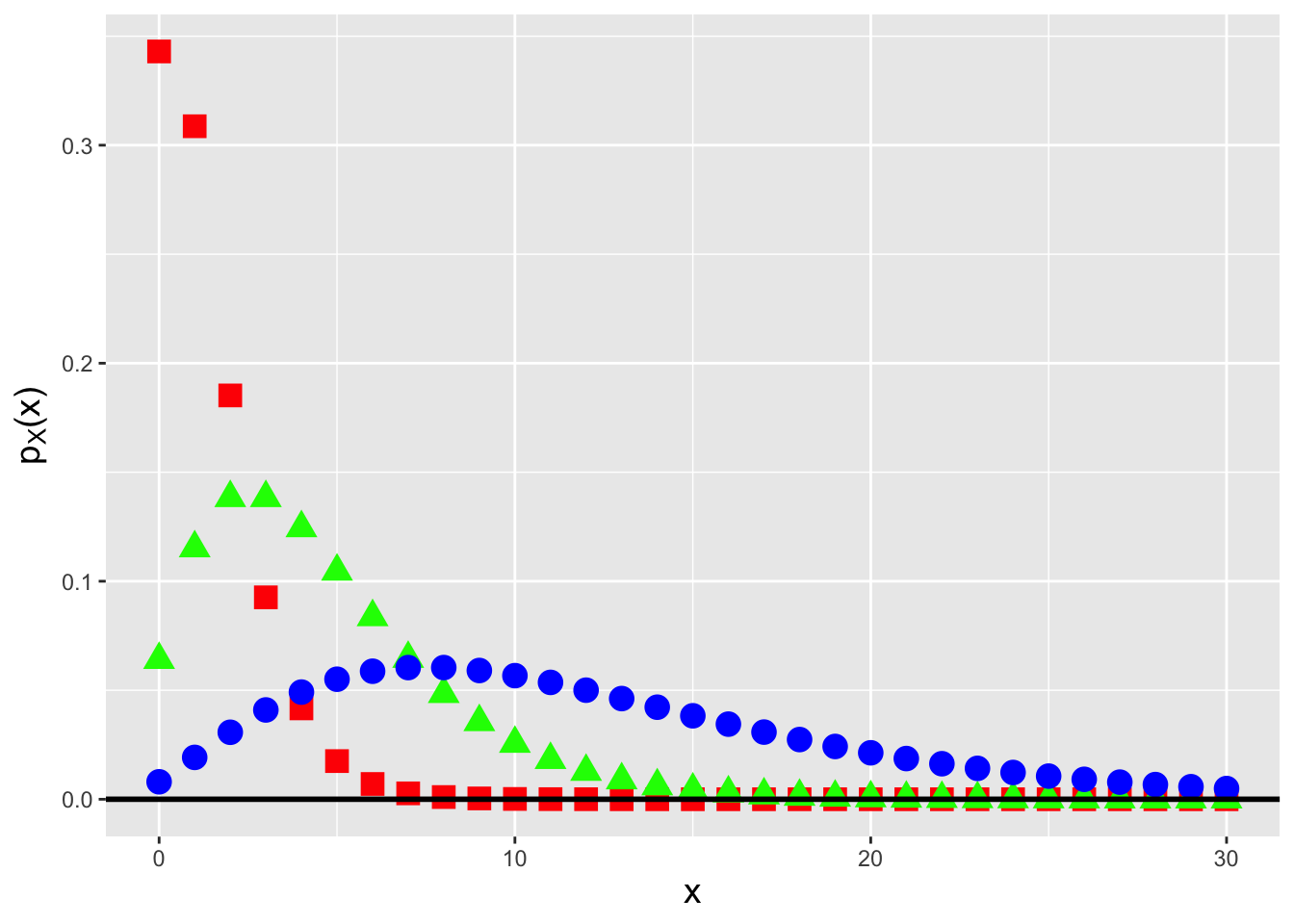

Figure 3.2: Negative binomial probability mass functions for the number of successes \(s = 2\) and success probabilities \(p = 0.7\) (red squares), 0.5 (green triangles), and 0.2 (blue circles).

In an example below, we show how one would derive the expected value of a negative binomial random variable, \(E[X] = s(1-p)/p\). (We can enact a similar calculation to show that the variance is \(V[X] = s(1-p)/p^2\).)

3.2.1 Variance of a Binomial Random Variable

Recall: the variance of a discretely distributed random variable is \[ V[X] = \sum_x (x-\mu)^2 p_X(x) = E[X^2] - (E[X])^2\,, \] where the sum is over all values of \(x\) in the domain of the pmf \(p_X(x)\). The variance represents the square of the “width” of a probability mass function, where by “width” we mean the range of values of \(x\) for which \(p_X(x)\) is effectively non-zero.

The variance of a random variable is given by the shortcut formula that we have been using since Chapter 1: \(V[X] = E[X^2] - (E[X])^2\). So we would expect that we would need to compute \(E[X^2]\) here, since we already know that \(E[X] = kp\). But for reasons that will become apparent below, it is actually far easier for us to compute \(E[X(X-1)]\), and to work with that to eventually derive the variance: \[\begin{align*} E[X(X-1)] &= \sum_{x=0}^k x(x-1) \binom{k}{x} p^x (1-p)^{k-x} \\ &= \sum_{x=0}^k x(x-1) \frac{k!}{x!(k-x)!} p^x (1-p)^{k-x} \\ &= \sum_{x=2}^k \frac{k!}{(x-2)!(k-x)!} p^x (1-p)^{k-x} \\ &= k(k-1) p^2 \sum_{x=2}^k \frac{(k-2)!}{(x-2)!(k-x)!} p^{x-2} (1-p)^{k-x} \,. \end{align*}\] The advantage to using \(x(x-1)\) was that it matches the first two terms of \(x! = x(x-1)\cdots(1)\), allowing easy cancellation. If we set \(y = x-2\), we find that the summand above will, in a similar manner as in the calculation of \(E[X]\), become the pmf for the random variable \(Y \sim\) Binomial(\(k-2,p\))…and thus the summation will evaluate to 1.

So \(E[X(X-1)] = E[X^2] - E[X] = k(k-1)p^2\), and \(E[X^2] = k^2p^2-kp^2 + kp = V[X] + (E[X])^2\), and \(V[X] = k^2p^2-kp^2+kp-k^2p^2 = kp-kp^2 = kp(1-p)\). Done.

3.2.2 The Expected Value of a Negative Binomial Random Variable

The calculation for the expected value \(E[X]\) for a negative binomial random variable is similar to that for a binomial random variable: \[\begin{align*} E[X] &= \sum_{x=0}^{\infty} x \binom{x+s-1}{x} p^s (1-p)^x \\ &= \sum_{x=0}^{\infty} x \frac{(x+s-1)!}{(s-1)!x!} p^s (1-p)^x \\ &= \sum_{x=1}^{\infty} \frac{(x+s-1)!}{(s-1)!(x-1)!} p^s (1-p)^x \,. \end{align*}\] Let \(y = x-1\). Then \[\begin{align*} E[X] &= \sum_{y=0}^{\infty} \frac{(y+s)!}{(s-1)!y!} p^s (1-p)^{y+1} \\ &= \sum_{y=0}^{\infty} s(1-p) \frac{(y+s)!}{s!y!} p^s (1-p)^y \\ &= \sum_{y=0}^{\infty} \frac{s(1-p)}{p} \frac{(y+s)!}{s!y!} p^{s+1} (1-p)^y \\ &= \frac{s(1-p)}{p} \sum_{y=0}^{\infty} \frac{(y+s)!}{s!y!} p^{s+1} (1-p)^y \\ &= \frac{s(1-p)}{p} \,. \end{align*}\] The summand is that of a negative binomial distribution for \(s+1\) successes, hence the summation is 1, and thus \(E[X] = s(1-p)/p\).

We can use a similar calculation in which we evaluate \(E[X(X-1)]\) in order to derive the variance of a negative binomial random variable: \(V[X] = s(1-p)/p^2\).

3.2.3 Binomial Distribution: Normal Approximation

In certain limiting situations, a binomial random variable converges in distribution to a normal random variable. In other words, if \[ P\left(\frac{X-\mu}{\sigma} < a \right) = P\left(\frac{X-kp}{\sqrt{kp(1-p)}} < a \right) \approx P(Z < a) = \Phi(a) \,, \] then we can state that \(X \stackrel{d}{\rightarrow} Y \sim \mathcal{N}(kp,kp(1-p))\), or that \(X\) converges in distribution to a normal random variable \(Y\). Now, what do we mean by “certain limiting situations”? For instance, if \(p\) is close to zero or one, then the binomial distribution is truncated at 0 or at \(k\), and the shape of the pmf does not appear to be like that of a normal pdf. One convention is that the normal approximation is adequate if \[ k > 9\left(\frac{\mbox{max}(p,1-p)}{\mbox{min}(p,1-p)}\right) \,. \] The reader might question why we would mention this approximation at all: if we have binomially distributed data and a computer, then we need not ever utilize such an approximation to, e.g., compute probabilities. This point is correct (and is the reason why, for instance, we do not mention the so-called continuity correction here; our goal is not to compute probabilities). The reason we mention this is that this approximation underlies a commonly used hypothesis test framework, the Wald interval, that we will mention later in the chapter.

3.2.4 Computing Probabilities

Let \(X\) be a random variable sampled from a binomial distribution with number of trials \(k = 6\) and success probability \(p = 0.4\), and let \(Y\) be a random variable sampled from a negative binomial distribution with number of successes \(s = 3\) and success probability \(p = 0.6\).

- What is \(P(2 \leq X < 4)\)?

To find this probability, we sum over the binomial probability mass function for values \(x = 2\) and \(x = 3\). (The form of the inequalities matter for discrete distributions!) We can perform this computation analytically: \[\begin{align*} P(2 \leq X < 4) &= \binom{6}{2} (0.4)^2 (1-0.4)^4 + \binom{6}{3} (0.4)^3 (1-0.4)^3 \\ &= \frac{6!}{2!4!} \cdot 0.16 \cdot 0.1296 + \frac{6!}{3!3!} \cdot 0.064 \cdot 0.216 = 15 \cdot 0.0207 + 20 \cdot 0.0138 = 0.5875 \,. \end{align*}\] There is a 58.75% chance that the next time we sample data according to this distribution, we will observe a value of 2 or 3.

It is ultimately simpler to use

Rto perform this calculation. While we can compute the probability as the difference of two cumulative distribution function values, for discrete distributions it is often more straightforward to sum over the relevant probability masses directly, since then we need not worry about whether the input to the cdf is correct given the form of the inequality:

## [1] 0.58752

- What is \(P(Y > 1)\)?

If we were to determine this probability by hand, the first thing we would do is specify that we will compute \(1 - P(Y \leq 3)\), as this has a finite (and small!) number of terms: \[\begin{align*} P(Y > 1) &= 1 - \binom{0+3-1}{0} (0.6)^3 (1-0.6)^0 - \binom{1+3-1}{1} (0.6)^3 (1-0.6)^1 \\ &= 1 - 1 \cdot 0.216 \cdot 1 - 3 \cdot 0.216 \cdot 0.4 = 0.5248 \,. \end{align*}\] There is a 52.48% chance that we would observe more than one failure the next time we sample data according to this distribution.

Like before, it is ultimately simpler to use

R:

## [1] 0.52483.2.5 The Binomial Distribution as Part of the Exponential Family

Recall that the exponential family of distributions, introduced in the last chapter, comprises distributions whose probability mass or density functions can be written in the form \[\begin{align*} h(x) \exp\left( \eta(\theta)T(x) - A(\theta) \right) \,. \end{align*}\] Is the binomial distribution a member of the larger exponential family of distributions? It would initially appear that the answer is no (as there are no exponential functions in its pmf), but if we note that \[\begin{align*} p^x = \exp\left(\log p^x\right) = \exp\left(x \log p\right) \end{align*}\] and that \[\begin{align*} (1-p)^{k-x} = \exp\left(\log (1-p)^{k-x}\right) = \exp\left((k-x) \log (1-p)\right) \,, \end{align*}\] we can see that \[\begin{align*} p_X(x \vert p) = \binom{k}{x} \exp\left( x [ \log(p) - \log(1-p) ] + k \log (1-p) \right) \end{align*}\] and thus that \[\begin{align*} h(x) &= \binom{k}{x} \\ \eta(p) &= \log(p) - \log(1-p) = \log \frac{p}{1-p} \\ T(x) &= x \\ A(p) &= -k \log(1-p) \,. \end{align*}\] The binomial distribution is indeed a member of the exponential family. We will leave it as an exercise to the reader to show that the negative binomial distribution lies within the exponential family as well.

3.3 Cumulative Distribution Function

Recall: the cumulative distribution function, or cdf, is another means by which to encapsulate information about a probability distribution. For a discrete distribution, it is defined as \(F_X(x) = \sum_{y\leq x} p_Y(y)\), and it is defined for all values \(x \in (-\infty,\infty)\), with \(F_X(-\infty) = 0\) and \(F_X(\infty) = 1\).

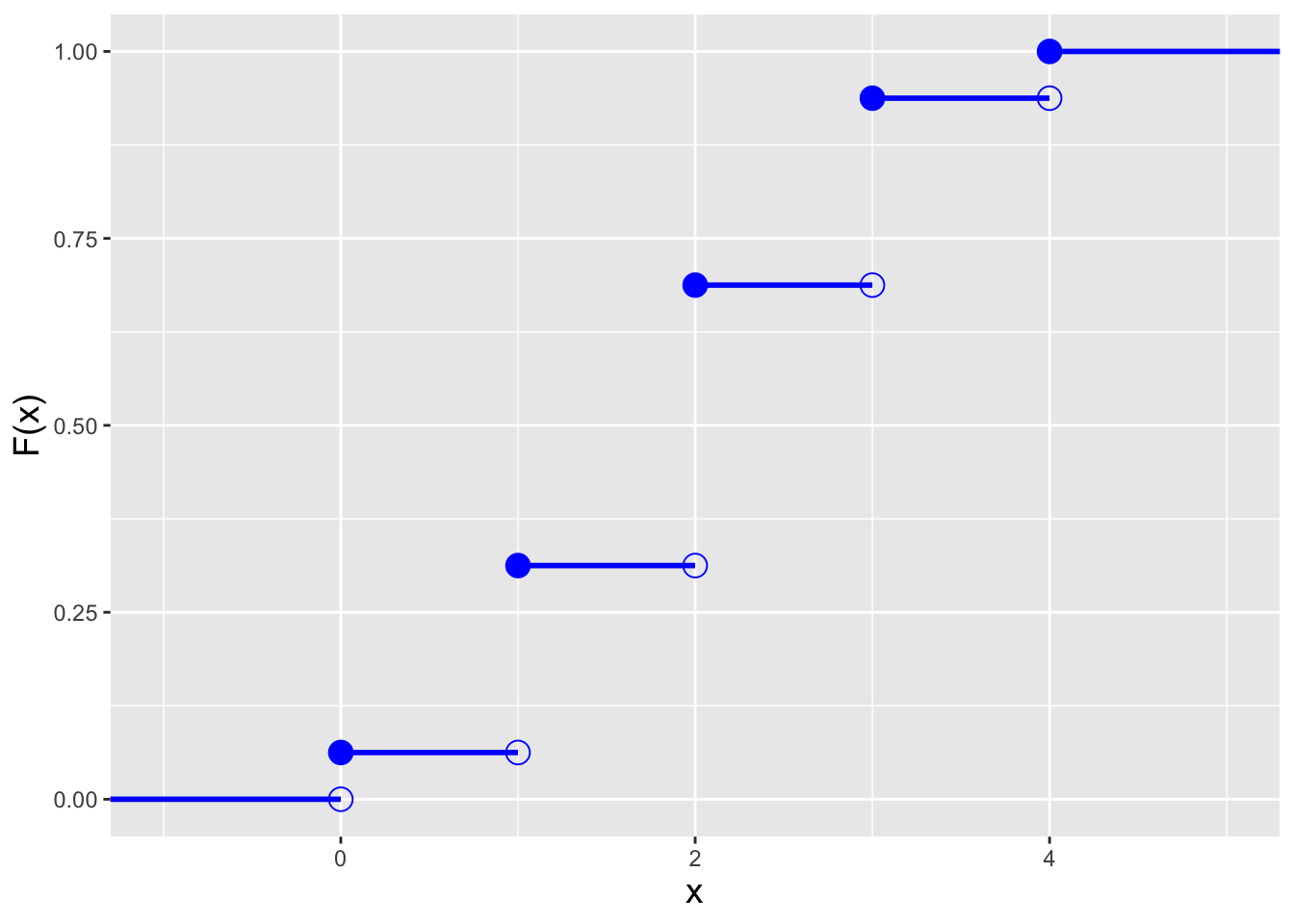

For the binomial distribution, the cdf is \[ F_X(x) = \sum_{y=0}^{\lfloor x \rfloor} p_Y(y) = \sum_{y=0}^{\lfloor x \rfloor} \binom{k}{y} p^y (1-p)^{k-y} \,, \] where \(\lfloor x \rfloor\) denotes the floor function, which returns the largest integer that is less than or equal to \(x\) (e.g., if \(x\) = 6.75, \(\lfloor x \rfloor\) = 6). (In closed form, we can represent this cdf with a regularized incomplete beta function, which is not analytically easy to work with.) Also, because a pmf is defined at discrete values of \(x\), its associated cdf is a step function, as illustrated in the left panel of Figure 3.3. As we can see in this figure, the cdf steps up at each value of \(x\) in the domain of \(p_X(x)\), and unlike the case for continuous distributions, the form of the inequalities in a probabilistic statement matter: \(P(X < x)\) and \(P(X \leq x)\) will not be the same, if \(x\) is an integer with value \(\{0,1,2,\ldots,k\}\).

Recall: an inverse cdf function \(x = F_X^{-1}(q)\) takes as input a distribution quantile \(q \in [0,1]\) and returns the value of \(x\). A discrete distribution has no unique inverse cdf; it is convention to utilize the generalized inverse cdf, \(x = \mbox{inf}\{x : F_X(x) \geq q\}\), where “inf” indicates that the function is to return the smallest value of \(x\) such that \(F_X(x) \geq q\).

In the right panel of Figure 3.3, we display the inverse cdf for the same distribution used to generate the figure in the left panel (\(k=4\) and \(p=0.5\)). Like the cdf, the inverse cdf for a discrete distribution is a step function. Below, in an example, we show how we adapt the inverse transform sampler algorithm of Chapter 1 to accommodate the step-function nature of an inverse cdf.

Figure 3.3: Illustration of the cumulative distribution function \(F_X(x)\) (left) and inverse cumulative distribution function \(F_X^{-1}(q)\) (right) for a binomial distribution with number of trials \(k = 4\) and probability of success \(p=0.5\).

3.3.1 Computing Probabilities

Because computing the binomial pmf for a range of values of \(x\) can be laborious, we typically utilize

Rfunctions when computing probabilities.

- If \(X \sim\) Binomial(10,0.6), which is \(P(4 \leq X < 6)\)?

We first note that due to the form of the inequality, we do not include \(X=6\) in the computation. Thus \(P(4 \leq X < 6) = p_X(4) + p_X(5)\), which equals \[ \binom{10}{4} (0.6)^4 (1-0.6)^6 + \binom{10}{5} (0.6)^5 (1-0.6)^5 \,. \] Even computing this is unnecessarily laborious; instead, we call on

R:

## [1] 0.3121349(This utilizes

R’s vectorization feature: we need not explicitly define afor-loop to evaluatedbinom()for \(x=4\) and then at \(x=5\).) We can also utilize cdf functions here: \(P(4 \leq X < 6) = P(X < 6) - P(X < 4) = P(X \leq 5) - P(X \leq 3) = F_X(5) - F_X(3)\), which inRis computed via

## [1] 0.3121349As we can see, the direct summation approach is the more straightforward one.

- \(X \sim\) Binomial(10,0.6), what is the value of \(a\) such that \(P(X \leq a) = 0.9\)?

First, we set up the inverse cdf formula: \[ P(X \leq a) = F_X(a) = 0.9 ~~ \Rightarrow ~~ a = F_X^{-1}(0.9) \] Note that we didn’t do anything differently here than we would have done in a continuous distribution setting…and we can proceed directly to

Rbecause it utilizes the generalized inverse cdf algorithm.

## [1] 8We can see immediately how the cdf for a discrete distribution is not a one-to-one function, as if we plug \(x = 8\) into the cdf, we will not recover the initial value \(q = 0.9\):

## [1] 0.95364263.3.2 Sampling Data From an Arbitrary Probability Mass Function

While we would always utilize

Rshortcut functions likerbinom()when they exist, there may be instances when we need to code our own functions for sampling data from discrete distributions. The code below shows such a function for an arbitrary probability mass function.

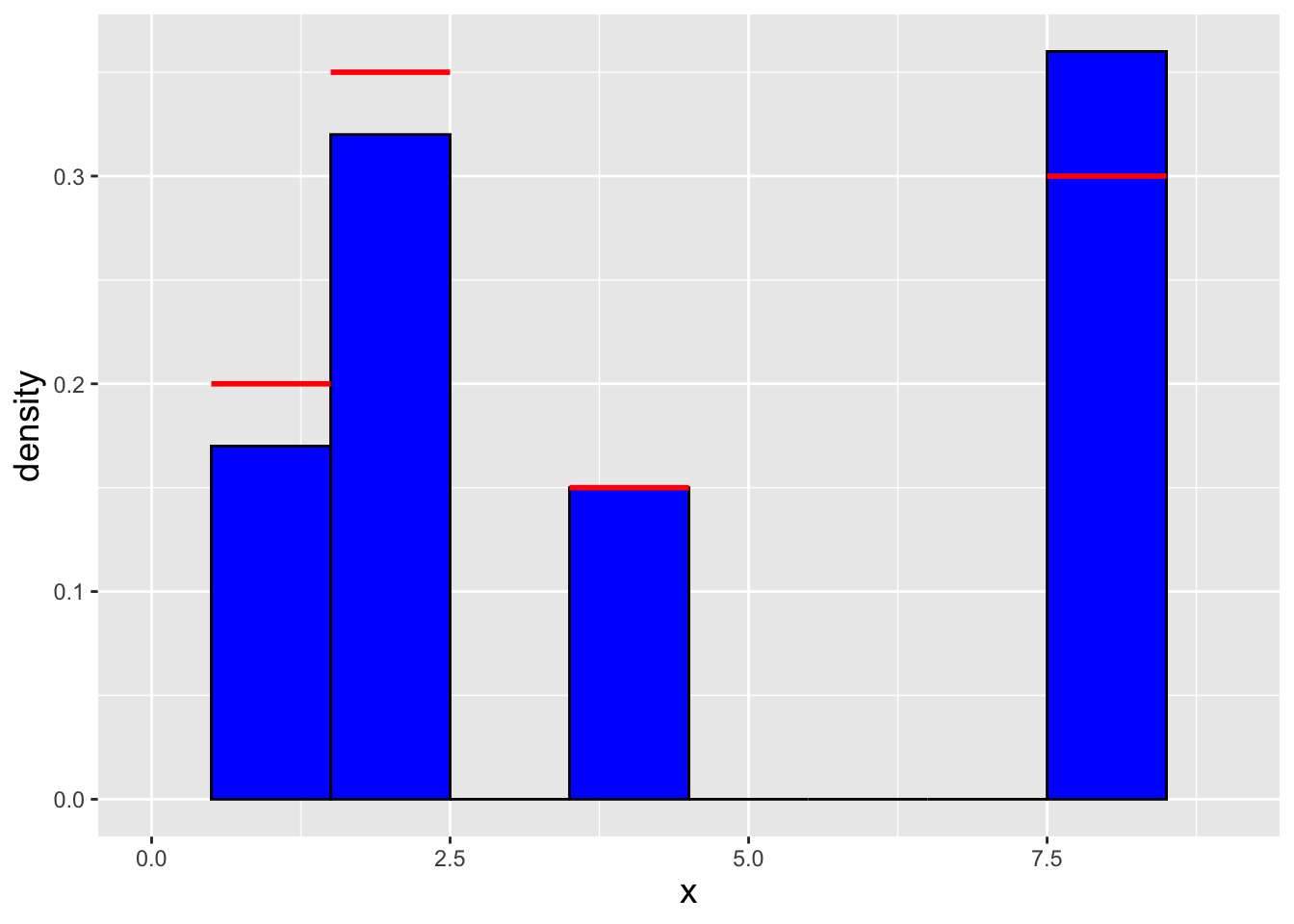

set.seed(101)

x <- c(1,2,4,8) # domain of x

p.x <- c(0.2,0.35,0.15,0.3) # p_X(x)

F.x <- cumsum(p.x) # cumulative sum -> produces F_X(x)

n <- 100

q <- runif(n) # we still ultimately need runif!

i <- findInterval(q,F.x)+1 # the output are bin numbers [0,3], and not [1,4]

# hence we add 1

# 1 means q is between 0 and F.x[1], etc.

x.sample <- x[i]

Figure 3.4: Histogram of \(n = 100\) iid data drawn using an inverse tranform sampler adapted to the discrete distribution setting. The red lines indicate the true density for each value of \(x\).

3.4 Linear Functions of Random Variables

Let’s assume we are given \(n\) iid binomial random variables: \(X_1,X_2,\ldots,X_n \sim\) Binomial(\(k\),\(p\)). Can we determine the distribution of the sum \(Y = \sum_{i=1}^n X_i\)? Yes, we can…via the method of moment-generating functions.

Recall: the moment-generating function, or mgf, is a means by which to encapsulate information about a probability distribution. When it exists, the mgf is given by \(m_X(t) = E[e^{tX}]\). Also, if \(Y = \sum_{i=1}^n a_iX_i\), then \(m_Y(t) = m_{X_1}(a_1t) m_{X_2}(a_2t) \cdots m_{X_n}(a_nt)\); if we can identify \(m_Y(t)\) as the mgf for a known family of distributions, then we can immediately identify the distribution for \(Y\) and the parameters of that distribution.

The mgf for the binomial distribution is \[\begin{align*} m_X(t) = E[e^{tX}] &= \sum_{x=0}^k e^{tx} \binom{k}{x} p^x (1-p)^{k-x} \\ &= \sum_{x=0}^k \binom{k}{x} (pe^t)^x (1-p)^{k-x} \,. \end{align*}\] We utilize the binomial theorem \[ (x+y)^k = \sum_{i=0}^k \binom{k}{i} x^i y^{k-i} \] to re-express \(m_X(t)\): \[ m_X(t) = [pe^t + (1-p)]^k \,. \] Note that one may see this written as \((pe^t+q)^k\), where \(q = 1-p\).

The mgf for \(Y = \sum_{i=1}^n X_i\) is thus \[ m_Y(t) = \prod_{i=1}^n m_{X_i}(t) = [m_X(t)]^n = [pe^t + (1-p)]^{nk} \,. \] We can see that this has the form of a binomial mgf: \(Y \sim\) Binomial(\(nk\),\(p\)), with expected value \(E[Y] = nkp\) and variance \(V[Y] = nkp(1-p)\). This makes sense, as the act of summing binomial data is equivalent to concatenating \(n\) separate Bernoulli processes into one longer Bernoulli process…whose data can subsequently be modeled using a binomial distribution.

While we can identify the distribution of the sum by name, we cannot

say the same about the sample mean.

We know that the expected value is \(E[\bar{X}] = \mu = kp\)

and that the variance is \(V[\bar{X}] = \sigma^2/n = kp(1-p)/n\),

but when we attempt to use the mgf method with

\(a_i = 1/n\) instead of \(a_i = 1\), we find that

\[

m_{\bar{X}}(t) = [pe^{t/n} + (1-p)]^{nk} \,.

\]

Changing \(t\) to \(t/n\) has the effect of creating an mgf that does not

have the form of any known mgf.

However, we do know the distribution: it has a pmf that is identical

in form to that of the binomial distribution, but has the domain

\(\{0,1/n,2/n,...,k\}\).

(We can derive this result mathematically by making

the transformation \(\sum_{i=1}^n X_i \rightarrow (\sum_{i=1}^n X_i)/n\),

as we see below in an example.)

We could define the pmf ourselves

using our own R function, but there is no real need to: as we will see,

if we wish to construct a confidence interval for \(p\), we can just use the sum

\(\sum_{i=1}^n X_i\) as our statistic.

(We could also, in theory, utilize the Central Limit Theorem if

\(n \gtrsim 30\), but there is absolutely no reason to do that to make

inferences about \(p\): we know the distribution of the sum of the data

exactly, and thus there is no need to fall back upon approximations.)

3.4.1 The MGF for a Geometric Random Variable

Recall that a geometric distribution is equivalent to a negative binomial distribution with number of successes \(s = 1\); its probability mass function is \[ p_X(x) = p (1-p)^x \,, \] with \(x = \{0,1,\ldots\}\) and \(p \in [0,1]\). The moment-generating function for a geometric random variable is thus \[\begin{align*} m_X(t) = E[e^{tX}] &= \sum_{x=0}^{\infty} e^{tx} p (1-p)^x = p \sum_{x=0}^\infty e^{tx} (1-p)^x \\ &= p \sum_{x=0}^\infty [e^t(1-p)]^x = \frac{p}{1-e^t(1-p)} \,. \end{align*}\] The last equality utilizes the formula for the sum of an infinite geometric series: \(\sum_{i=0}^\infty x^i = (1-x)^{-1}\), when \(\vert x \vert < 1\). (If \(t < 0\) and \(p > 0\), then the condition that \(\vert e^t(1-p) \vert < 1\) holds.)

The sum of \(s\) geometric random variables has the moment-generating function \[ m_Y(t) = \prod_{i=1}^s m_{X_i}(t) = \left[\frac{p}{1-e^t(1-p)}\right]^s \,. \] This is the mgf for a negative binomial distribution for \(s\) successes. In the same way that the sum of \(k\) Bernoulli random variables is a binomially distributed random variable, the sum of \(s\) geometric random variables is a negative binomially distributed random variable.

3.4.2 The PMF for the Sample Mean

Let’s assume we are given \(n\) iid binomial random variables: \(X_1,X_2,\ldots,X_n \sim\) Binomial(\(k\),\(p\)). As we observe above, the distribution of the sum \(Y = \sum_{i=1}^k X_i\) is binomial with mean \(nkp\) and variance \(nkp(1-p)\).

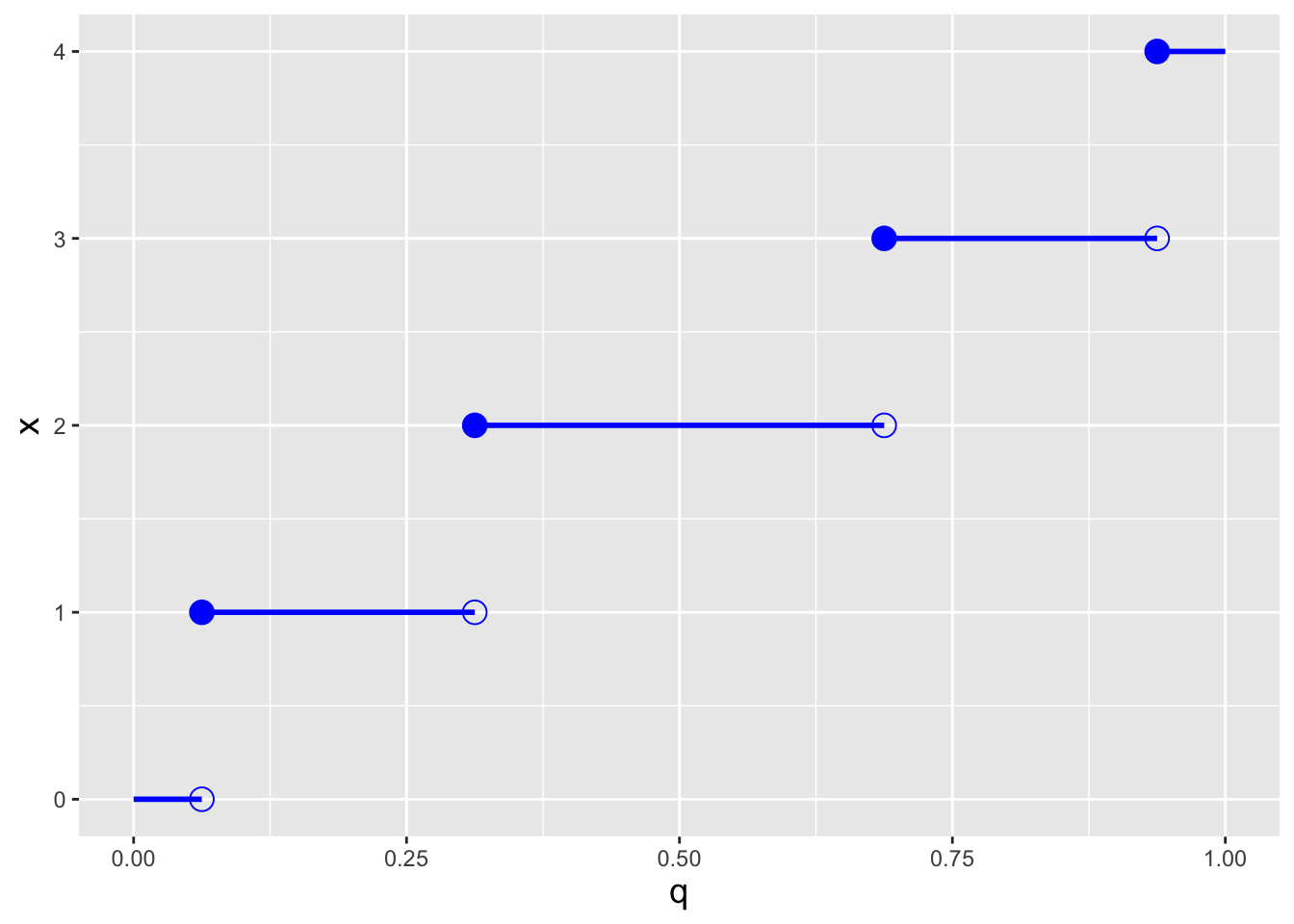

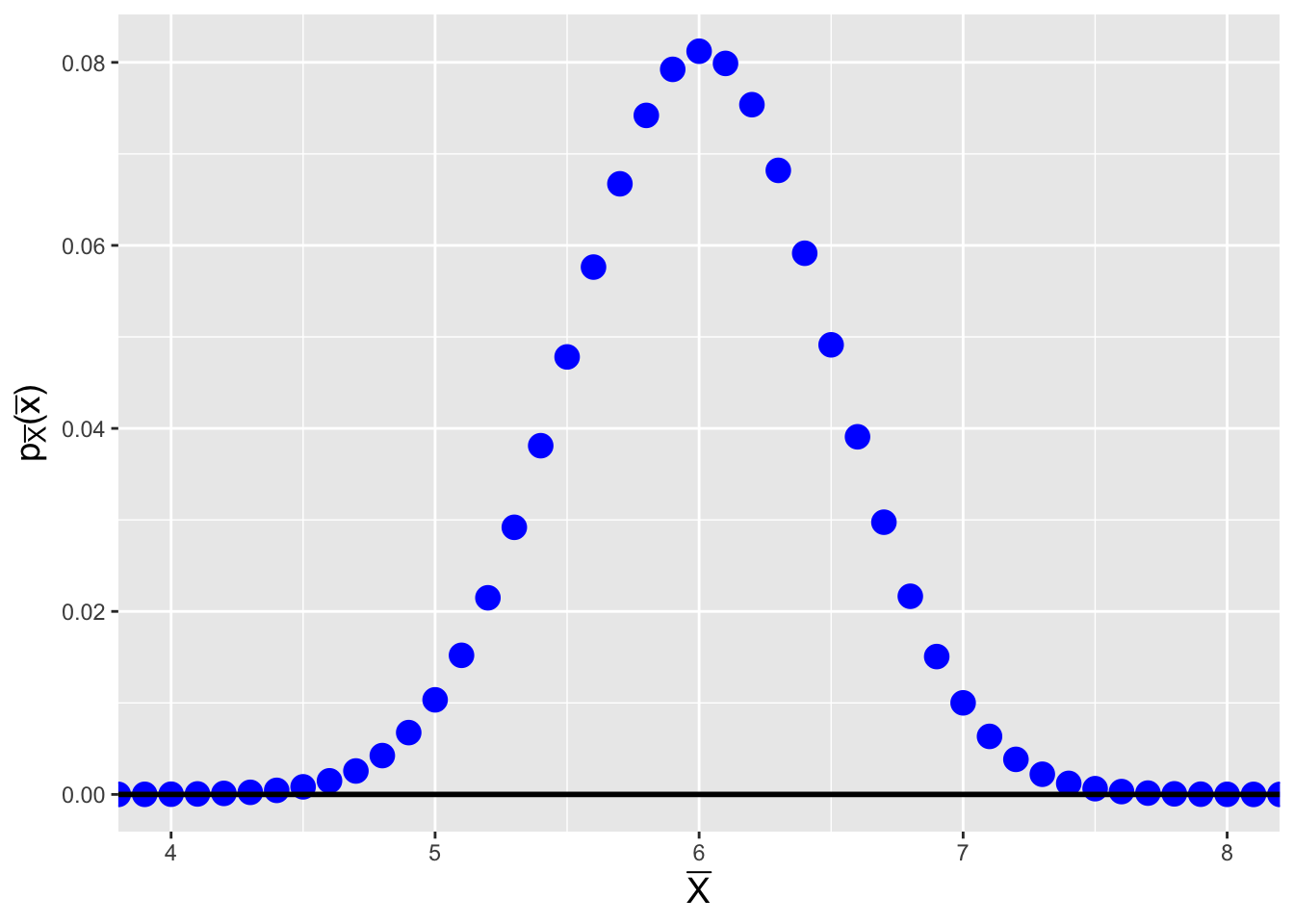

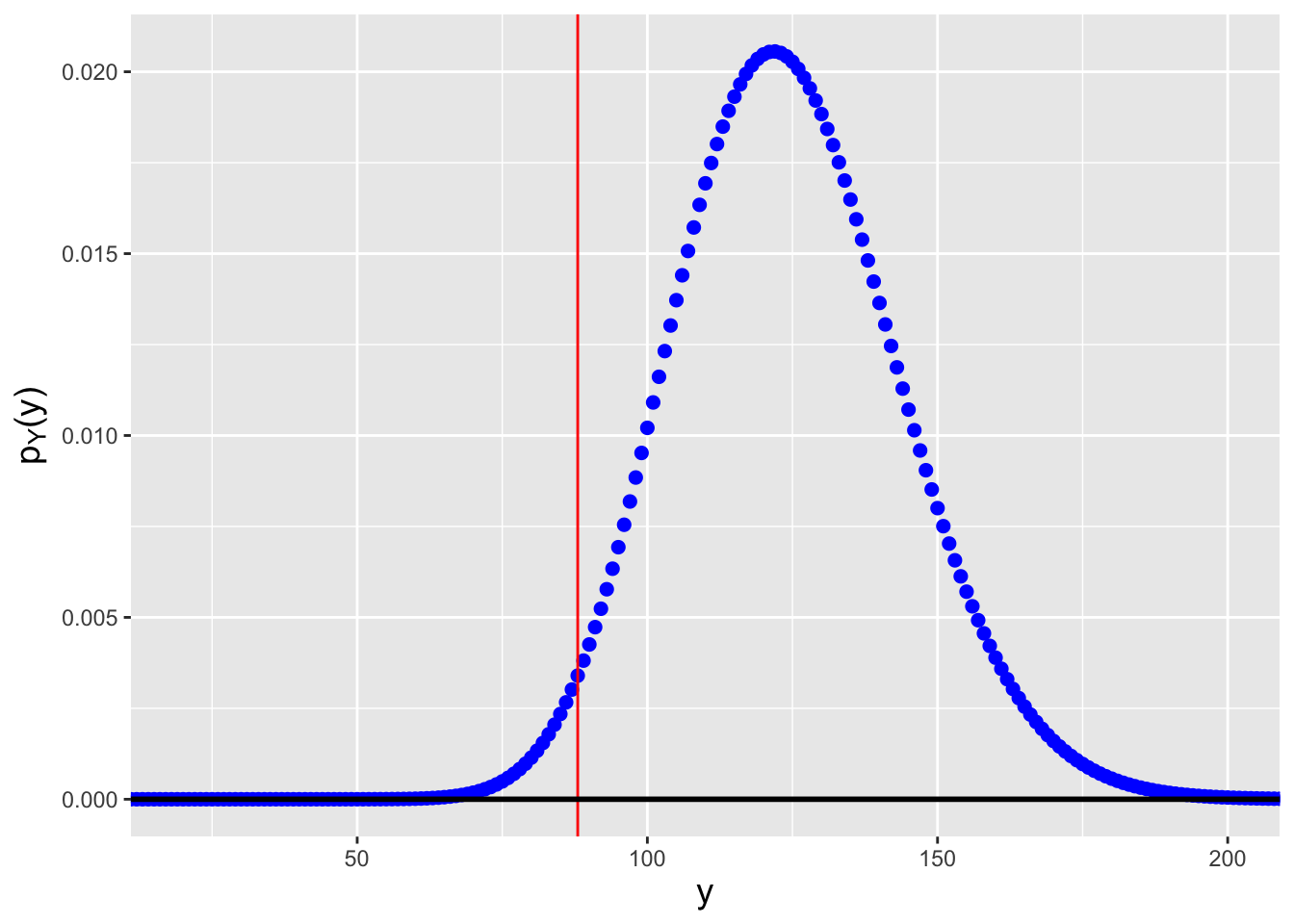

The sample mean is \(\bar{X} = Y/n\), and so \[ F_{\bar{X}}(\bar{x}) = P(\bar{X} \leq \bar{x}) = P(Y \leq n\bar{x}) = \sum_{y=0}^{n\bar{x}} p_Y(y) \,. \] (We note that \(n\bar{x}\) is integer-valued by definition; we do not need to round down here.) Because we are dealing with a pmf, we cannot simply take the derivative of \(F_{\bar{X}}(\bar{x})\) to find \(f_{\bar{X}}(\bar{x})\)…but what we can do is assess the jump in the cumulative distribution function at each step, because that is the pmf. In other words, we can compute \[ f_{\bar{X}}(\bar{x}) = P(Y \leq n\bar{x}) - P(Y \leq n\bar{x}-1) \] and store this as a numerically expressed pmf for \(\bar{X}\). See Figure 3.5.

But it turns out we can say more about this pmf, by looking at the problem in a different way. We know that \(Y \sim\) Binomial\((nkp,nkp(1-p))\) and thus that \(Y \in [0,1,\ldots,nk]\). When we compute the quotient \(\bar{X} = Y/n\), all we are doing is redefining the domain of the pmf from being \([0,1,\ldots,nk]\) to being \([0,1/n,2/n,\ldots,k]\). We do not actually change the probability masses! So we can write \[ p_{\bar{X}}(\bar{x}) = \binom{nk}{n\bar{x}} p^{n\bar{x}} (1-p)^{nk-n\bar{x}} ~~ \bar{x} \in [0,1/n,2/n,\ldots,k] \,. \] This pmf has the functional form of a binomial pmf…but not the domain of a binomial pmf. For that reason, we cannot say that \(\bar{X}\) is binomially distributed. The pmf has a functional form, it has a domain, but it has no known “name” and thus no associated

Rfunctions that we can utilize when performing statistical inference. (This is why we will utilize the sum of the data instead:Rfunctions for its distribution exist!)

Figure 3.5: Probability mass function for the sample mean of \(n = 10\) iid binomial random variables, for \(k = 10\) and \(p = 0.6\).

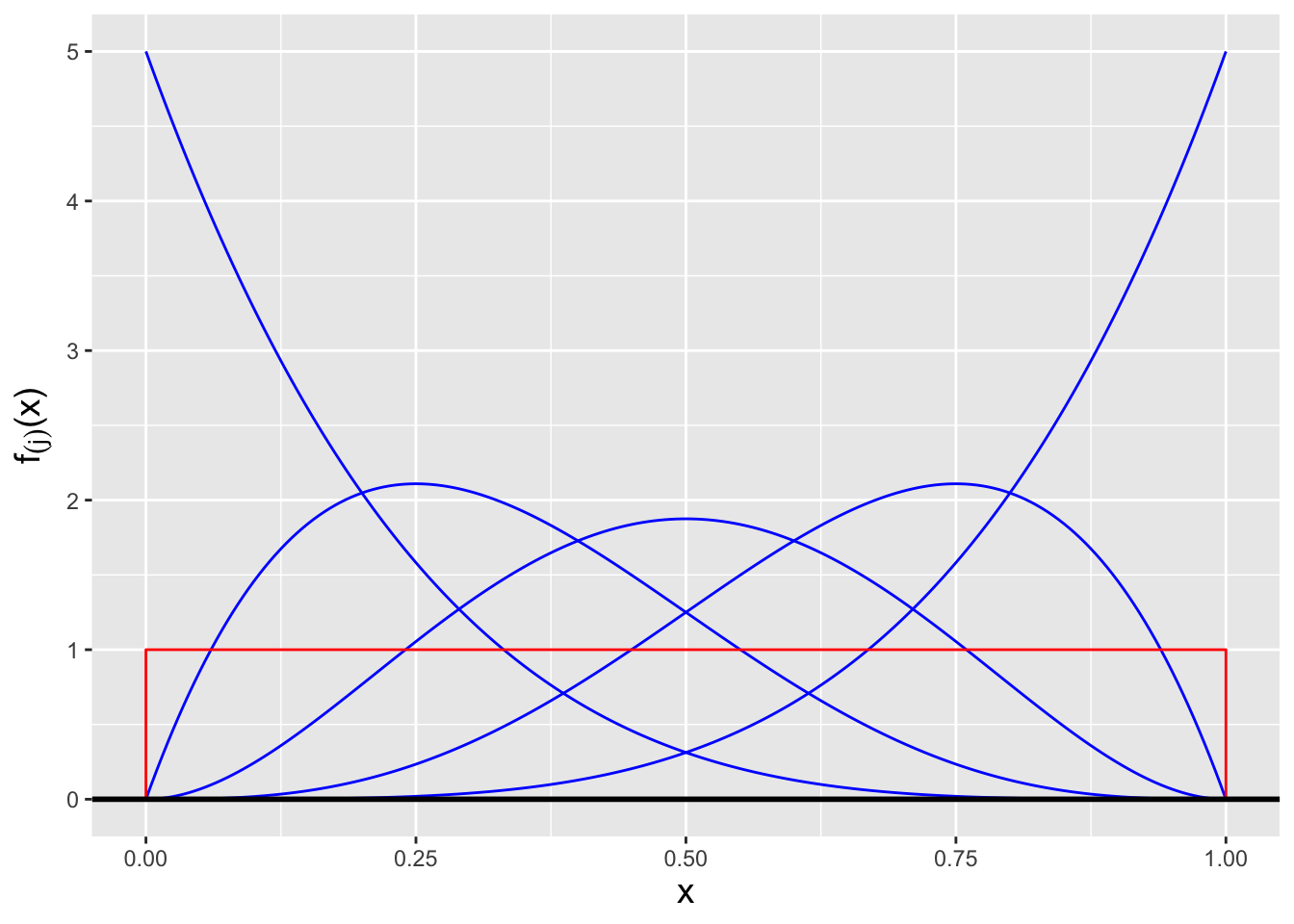

3.5 Order Statistics

Let’s suppose that we have sampled \(n\) iid random variables \(\{X_1,\ldots,X_n\}\) from some arbitrary distribution. Previously, we have summarized such data with the sample mean and the sample variance. However, there are other summary statistics, some of which are only calculable if we sort the data into ascending order: \(\{X_{(1)},\ldots,X_{(n)}\}\). These are dubbed order statistics and the \(j^{th}\) order statistic is the sample’s \(j^{th}\) smallest value (i.e., the smallest-valued datum in the sample is \(X_{(1)}\) and the largest-valued datum is \(X_{(n)}\)). Examples of statistics based on ordering include \[\begin{align*} \mbox{Range:}& ~~X_{(n)} - X_{(1)} \\ \mbox{Median:}& ~~X_{(n+1)/2} ~ \mbox{if $n$ is odd} \\ & ~~(X_{n/2}+X_{(n+1)/2})/2 ~ \mbox{if $n$ is even} \,. \end{align*}\] The most important point to keep in mind is that the probability mass and density functions for order statistics differ from the pmfs and pdfs for their constituent iid data. For instance, if we sample \(n\) data from a \(\mathcal{N}(0,1)\) distribution, we would not expect the minimum value to be distributed the same way; if anything, the mean should take on larger and larger negative values, and the variance on those values should decrease, as \(n\) increases.

So: why are we discussing order statistics here, in the middle of a discussion of the binomial distribution? It is because we can derive, e.g., the pdf for an order statistic of a continuous distribution using the binomial pmf. (Note that order statistics exist for discretely valued data, but the probability mass functions for them are not easily derived and thus we will only consider order statistics for continuously valued data here.)

![\label{fig:order}If we have, e.g., a probability density function $f_X(x)$ whose domain is $[a,b]$, and we view success as sampling a datum less than a given value $x$, then when we sample $n$ data, the number that have values $\leq x$ is a binomial random variable with $k=n$ and $p = F_X(x)$.](figures/order.png)

Figure 3.6: If we have, e.g., a probability density function \(f_X(x)\) whose domain is \([a,b]\), and we view success as sampling a datum less than a given value \(x\), then when we sample \(n\) data, the number that have values \(\leq x\) is a binomial random variable with \(k=n\) and \(p = F_X(x)\).

See Figure 3.6. Without loss of generality, we can assume that \(f_X(x) > 0\) for \(x \in [a,b]\) and that we sample \(n\) data from this distribution. The number of data \(X\) that have value less than some arbitrarily chosen \(x\) is a binomial random variable: \[ Y \sim \mbox{Binomial}(n,p=F_X(x)) \] What is the probability that the \(j^{th}\) ordered datum has a value \(\leq x\)? That’s equivalent to asking for the probability that \(Y \geq j\), i.e., did we see at least \(j\) successes in \(n\) trials? \[ F_{(j)}(x) = P(X_{(j)} \leq x) = P(Y \geq j) = \sum_{i=j}^n \binom{n}{i} [F_X(x)]^i [1 - F_X(x)]^{n-i} \,. \] \(F_{(j)}(x)\) is the cdf for the \(j^{th}\) ordered datum.

Recall: a continuous distribution’s pdf is the derivative of its cdf.

Leaving aside algebraic details, we can write down the pdf for \(X_{(j)}\): \[ f_{(j)}(x) = \frac{d}{dx}F_{(j)}(x) = \frac{n!}{(j-1)!(n-j)!} f_X(x) [F_X(x)]^{j-1} [1 - F_X(x)]^{n-j} \,, \] and write down simplified expressions for the pdfs for the minimum and maximum data values: \[ f_{(1)}(x) = n f_X(x) [1 - F_X(x)]^{n-1} ~~\mbox{and}~~ f_{(n)}(x) = n f_X(x) [F_X(x)]^{n-1} \,. \]

3.5.1 Distribution of the Minimum Value Sampled from an Exponential Distribution

The probability density function for an exponential random variable is \[ f_X(x) = \frac{1}{\theta} \exp\left(-\frac{x}{\theta}\right) \,, \] for \(x \geq 0\) and \(\theta > 0\), and the expected value of \(X\) is \(E[X] = \theta\). What is the pdf for the smallest value among \(n\) iid data sampled from an exponential distribution? What is the expected value for the smallest value?

First, if we do not immediately recall the cumulative distribution function \(F_X(x)\), we can easily derive it: \[ F_X(x) = \int_0^x \frac{1}{\theta} e^{-y/\theta} dy = 1 - e^{-x/\theta} \,. \] We plug \(F_X(x)\) into the expression of the pdf of the minimum datum given above: \[\begin{align*} f_{(1)}(x) &= n \frac{1}{\theta} e^{-x/\theta} \left[ 1 - (1-e^{-x/\theta}) \right]^{n-1} \\ &= n \frac{1}{\theta} e^{-x/\theta} e^{-(n-1)x/\theta} \\ &= \frac{n}{\theta} e^{-nx/\theta} \,. \end{align*}\] \(X_{(1)}\) is thus an exponentially distributed random variable with parameter \(\theta/n\) and expected value \(\theta/n\). We can derive this result as follows. \[ E[X_{(1)}] = \int_0^\infty x \frac{n}{\theta} e^{-nx/\theta} dx \,. \] We recognize this as almost having the form of a gamma-function integral: \[ \Gamma(u) = \int_0^\infty x^{u-1} e^{-x} dx \,. \] We affect a variable transformation \(y = nx/\theta\); for this transformation, \(dy = (n/\theta)dx\), and if \(x = 0\) or \(\infty\), \(y = 0\) or \(\infty\) (meaning the integral bounds are unchanged). Our new integral is \[ E[X] = \int_0^\infty \frac{\theta y}{n} \frac{n}{\theta} e^{-y} \frac{\theta}{n} dy = \frac{\theta}{n} \int_0^\infty y e^{-y} dy = \frac{\theta}{n} \Gamma(2) = \frac{\theta}{n} 1! = \frac{\theta}{n} = \frac{E[X]}{n} \,. \]

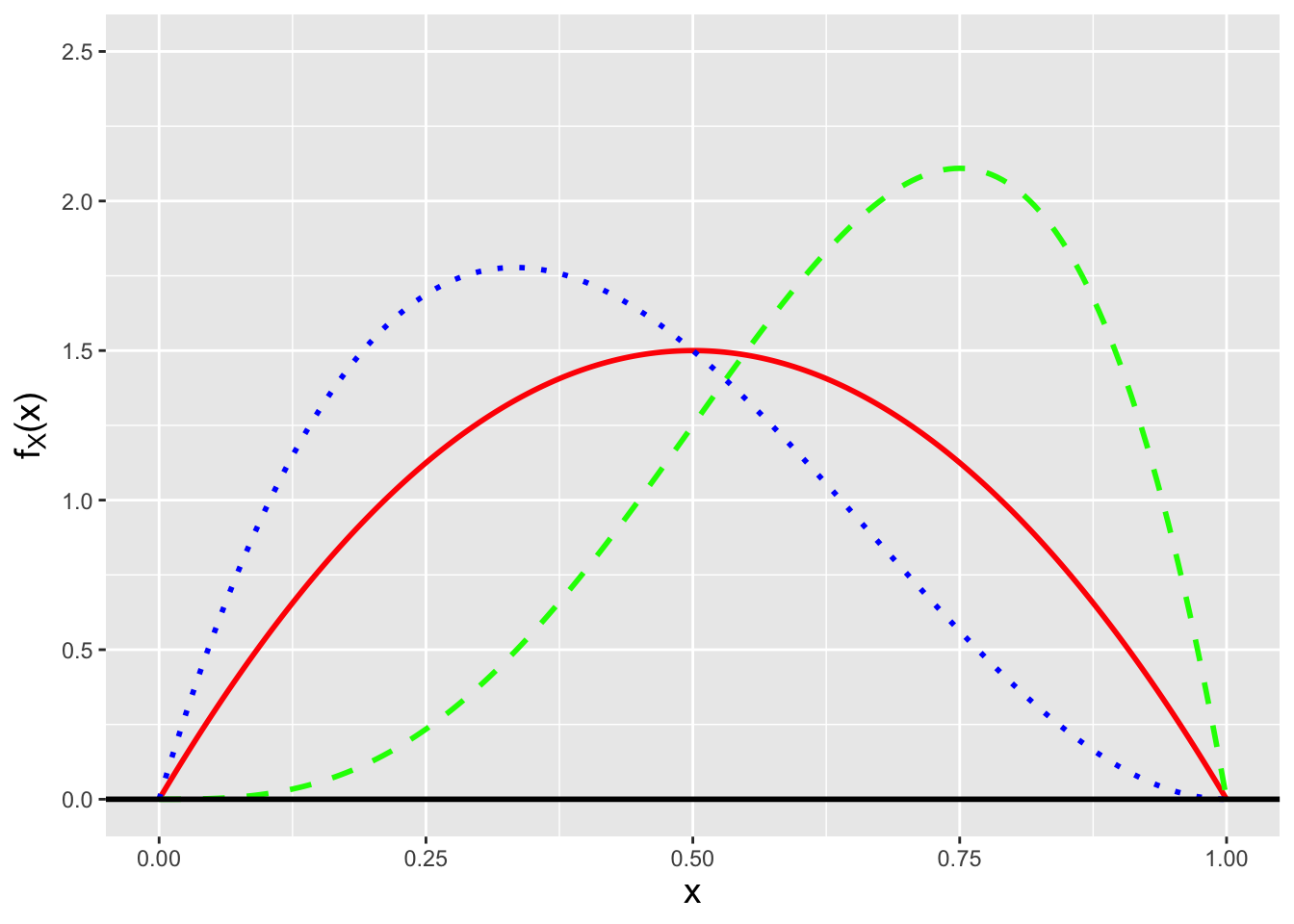

3.5.2 Distribution of the Median Value Sampled from a Uniform(0,1) Distribution

The probability density function for a Uniform(0,1) distribution is \[ f_X(x) = 1 \] for \(x \in [0,1]\). The cdf for this distribution is thus \[ F_X(x) = \int_0^x 1 dy = x \,. \] Let’s assume that we sample \(n\) iid data from this distribution, where \(n\) is an odd number. The index of the median value is thus \((n+1)/2\), and if we plug into the general expression for the pdf of the \(m^{th}\) ordered datum, we find that \[\begin{align*} f_{(n+1)/2} &= \frac{n!}{\left(\frac{n+1}{2}-1\right)!\left(n - \frac{n+1}{2}\right)!} \cdot 1 \cdot x^{\left(\frac{n+1}{2}\right)-1} \cdot (1-x)^{n - \left(\frac{n+1}{2}\right)} \\ &= \frac{n!}{2\left(\frac{n-1}{2}\right)!} x^{\left(\frac{n-1}{2}\right)} (1-x)^{\left(\frac{n-1}{2}\right)} \,. \end{align*}\] As we will see later, this is a beta distribution with parameters \(\alpha = \beta = (n+1)/2\). The median value has expected value 1/2 and a variance that shrinks with \(n\).

3.6 Point Estimation

In the first two chapters, we introduce a number of concepts related to point estimation, the act of using statistics to make inferences about a population parameter \(\theta\). We…

- assess point estimators using the metrics of bias, variance, mean-squared error, and consistency;

- utilize the Fisher information metric to determine the lower bound on the variance for unbiased estimators (the Cramer-Rao Lower Bound, or CRLB); and

- define estimators via the maximum likelihood algorithm, which generates estimators that are at least asymptotically unbiased and at least asymptotically reach the CRLB, and which converge in distribution to normal random variables.

We will review these concepts in the context of estimating population quantities for binomial distributions below, in the body of the text and in examples. For now…

Recall: the bias of an estimator is the difference between the average value of the estimates it generates and the true parameter value. If \(E[\hat{\theta}-\theta] = 0\), then the estimator \(\hat{\theta}\) is said to be unbiased.

Recall: the value of \(\theta\) that maximizes the likelihood function is the maximum likelihood estimate, or MLE, for \(\theta\). The maximum is found by taking the (partial) derivative of the (log-)likelihood function with respect to \(\theta\), setting the result to zero, and solving for \(\theta\). That solution is the maximum likelihood estimate \(\hat{\theta}_{MLE}\). Also recall the invariance property of the MLE: if \(\hat{\theta}_{MLE}\) is the MLE for \(\theta\), then \(g(\hat{\theta}_{MLE})\) is the MLE for \(g(\theta)\).

Here we will introduce another means by which to define an estimator. The minimum variance unbiased estimator (or MVUE) is the one that has the smallest variance among all unbiased estimators of \(\theta\). The reader’s first thought might be “well, why didn’t we use this estimator in the first place…after all, the MLE is not guaranteed to yield an unbiased estimator, so why have we put off discussing the MVUE?” The primary reasons are that MVUEs are sometimes not definable (i.e., we can reach insurmountable roadblocks when trying to derive them), and unlike MLEs, they do not exhibit the invariance property. (For instance, if \(\hat{\theta}_{MLE} = \bar{X}\), then \(\hat{\theta^2}_{MLE} = \bar{X}^2\), but if \(\hat{\theta}_{MVUE} = \bar{X}\), it is not necessarily the case that \(\hat{\theta^2}_{MVUE} = \bar{X}^2\).) However, we should always at least try to define the MVUE, because if we can, it will be at least equal the performance of, if not do better than, the MLE, in terms of bias and/or variance.

There are two steps to carry out when deriving the MVUE:

- determining a sufficient statistic for \(\theta\); and

- correcting any bias that is observed when we utilize that sufficient statistic as our initial estimator.

A sufficient statistic for a parameter \(\theta\) captures all information about \(\theta\) contained in the sample. The sufficiency principle holds that if, e.g., \(Y\) is a sufficient statistic for \(\theta\), and we collect two datasets \(\mathbf{U}\) and \(\mathbf{V}\) such that \(Y(\mathbf{U}) = Y(\mathbf{V})\), then the inferences we make about \(\theta\) given that we observe \(\mathbf{U}\) will be exactly the same as those we would make if we were to observe \(\mathbf{V}\) instead. Using any statistic beyond a sufficient statistic will not lead to improved inferences about \(\theta\). This means that, for instance, combining \(\bar{X}\) (a sufficient statistic for the normal mean \(\mu\) when the variance is known) with, say, the sample median will not reduce the length of confidence intervals for \(\mu\) or change the power of hypothesis tests about \(\mu\), relative to what we would derive using \(\bar{X}\) alone. Note that utilizing the sufficiency principle is but one way by which statisticians can attempt data reduction prior to inference; two others, which are beyond the scope of this book, are the likelihood and equivalence principles. For a deeper treatment of sufficiency and of data reduction than we provide here, the interested reader should consult Chapter 6 of Casella and Berger (2002).

A statistic \(Y(\mathbf{X})\) is a sufficient statistic for \(\theta\) if the ratio \[\begin{align*} \frac{f_X(\mathbf{x} \vert \theta)}{f_Y(y \vert \theta)} \mathrel{{iid}\rightarrow} \frac{\prod_{i=1}^n f_X(x_i \vert \theta)}{f_Y(y \vert \theta)} \,, \end{align*}\] where \(f_Y(y \vert \theta)\) is the probability density function of the sampling distribution for \(Y\), is constant as a function of \(\theta\). We note that by this definition, \(\mathbf{X}\) itself comprises a sufficient statistic for \(\theta\), as does the full set of order statistics \(\{X_{(1)},\ldots,X_{(n)}\}\). The relevant questions are: can we define a sufficient statistic that actually reduces the data? and if so, how can we identify it?

Let’s answer the second question first. The simplest way by which to identify a sufficient statistic is to write down the likelihood function and then to factorize it into two separate functions, one of which depends only on the observed data and the other of which depends on both the observed data and the parameter of interest: \[ \mathcal{L}(\theta \vert \mathbf{x}) = g(\mathbf{x},\theta) \cdot h(\mathbf{x}) \,. \] This is the so-called factorization criterion. In the expression \(g(\mathbf{x},\theta)\), the data will appear within, e.g., a summation (e.g., \(\sum_{i=1}^n x_i\)) or a product (e.g., \(\prod_{i=1}^n x_i\)), and we would identify that summation or product as a sufficient statistic for \(\theta\). For instance, for the binomial distribution, the factorized likelihood (given \(n\) iid data) is \[ \mathcal{L}(p \vert \mathbf{x}) = \prod_{i=1}^n \binom{k}{x_i} p^{x_i} (1-p)^{k-x_i} = \underbrace{\left[ \prod_{i=1}^n \binom{k}{x_i} \right]}_{h(\mathbf{x})} \underbrace{p^{\sum_{i=1}^n x_i} (1-p)^{nk-\sum_{i=1}^n x_i}}_{g(\sum_{i=1}^n x_i,p)} \,. \] By inspecting the function \(g(\mathbf{x},p)\), we immediately determine that a sufficient statistic for \(p\) is \(Y = \sum_{i=1}^n X_i\). We say “a” sufficient statistic because sufficient statistics are not unique: any one-to-one function of a sufficient statistic is also a sufficient statistic. For instance, if \(Y = \sum_{i=1}^n X_i\) is a sufficient statistic for \(\theta\), so is \(Y = \bar{X}\), etc.

Now we return to the first question above: can we define a sufficient statistic that actually reduces the data? The answer is “not always.” In fact, across all possible distributions, it is relatively rare that we can do this. The Pitman-Koopman-Darmois theorem holds that, among families of distributions that do not have domain-specifying parameters, only the exponential family of distributions have sufficient statistics whose dimension does not increase as the sample size increases. (This theorem motivates, in large part, our introduction of the exponential family in Chapter 2.) Recall that the exponential family of distributions comprises a set of distributions whose probability mass or density functions can be written as \[\begin{align*} h(x) \exp\left( \eta(\theta) T(x) - A(\theta) \right) \,. \end{align*}\] (Here we assume that the distribution has just one free parameter \(\theta\).) In this expression, \(T(x)\) is the sufficient statistic; as seen in a previous example, for the binomial distribution \(T(X) = X\). If we collect \(n\) iid data, then the sufficient statistic is \(T(\mathbf{X}) = \sum_{i=1}^n X_i\), and as we can see it is still a single number\(-\)i.e., it has a dimension of one\(-\)regardless of the value of \(n\). Now, restricting ourselves to exponential family distributions in inferential situations would appear to limit the practical utility of the sufficiency principle…except it is the case that many (if not most) of the distributions that we utilize in inference are indeed exponential-family distributions, including all the ones that we focus on in this book.

(We note here for completeness that once we identify a sufficient statistic, we are technically required to demonstrate that it is both minimally sufficient and complete, as it needs to be both so that we can use it, e.g., to determine the minimum variance unbiased estimator. It suffices to say here that the sufficient statistics that we identify for exponential family distributions via likelihood factorization are minimally sufficient and complete. See Chapter 7 for more details on minimal sufficiency and completeness.)

If \(Y\) is a sufficient statistic for \(\theta\), and there is a function \(h(Y)\) that is an unbiased estimator for \(\theta\) and that depends on the data only through \(Y\), then \(h(Y)\) is the MVUE for \(\theta\). (Recall from above that one-to-one functions of sufficient statistics are themselves sufficient statistics, hence \(h(Y)\) is sufficient for \(\theta\).) Here, given \(Y = \sum_{i=1}^n X_i\), we need to find a function \(h(\cdot)\) such that \(E[h(Y)] = p\). Earlier in this chapter, we determined that the distribution for the sum of iid binomial random variables is Binomial(\(nk\),\(p\)), and thus we know that this distribution has expected value \(nkp\). Thus \[ E\left[Y\right] = nkp ~\implies~ E\left[\frac{Y}{nk}\right] = p ~\implies~ h(Y) = \frac{Y}{nk} = \frac{\bar{X}}{k} ~\mbox{is the MVUE for}~p \,. \] The variance of \(\hat{p}\) is \[ V[\hat{p}] = V\left[\frac{\bar{X}}{k}\right] = \frac{1}{k^2}V[\bar{X}] = \frac{1}{k^2} \frac{V[X]}{n} = \frac{1}{k^2}\frac{kp(1-p)}{n} = \frac{p(1-p)}{nk} \,. \] We know that this variance abides by the restriction \[ V[\hat{p}] \geq \frac{1}{nI(p)} = -\frac{1}{nE\left[\frac{d^2}{dp^2} \log p_X(X \vert p) \right]} \,. \] But is it equivalent to the lower bound for unbiased estimators, the CRLB? (Note that in particular situations, the MVUE may have a variance larger than the CRLB; when this is the case, unbiased estimators that achieve the CRLB simply do not exist.) For the binomial distribution, \[\begin{align*} p_{X}(x) &= \binom{k}{x} p^{x} (1-p)^{k-x} \\ \log p_{X}(x) &= \log \binom{k}{x} + x \log p + (k-x) \log (1-p) \\ \frac{d}{dp} \log p_{X}(x) &= 0 + \frac{x}{p} - \frac{k-x}{(1-p)} \\ \frac{d^2}{dp^2} \log p_{X}(x) &= -\frac{x}{p^2} - \frac{k-x}{(1-p)^2} \\ E\left[\frac{d^2}{dp^2} \log p_{X}(X)\right] &= -\frac{1}{p^2}E[X] - \frac{1}{(1-p)^2}E[k-X] \\ &= -\frac{kp}{p^2}-\frac{k-kp}{(1-p)^2} \\ &= -\frac{k}{p}-\frac{k}{1-p} = -\frac{k}{p(1-p)} \,. \end{align*}\] The lower bound on the variance is thus \(p(1-p)/(nk)\), and so the MVUE does achieve the CRLB. We cannot define a better unbiased estimator for \(p\) than \(\bar{X}/k\).

3.6.1 The MLE for the Binomial Success Probability

Recall: the value of \(\theta\) that maximizes the likelihood function is the maximum likelihood estimate, or MLE, for \(\theta\). The maximum is found by taking the (partial) derivative of the (log-)likelihood function with respect to \(\theta\), setting the result to zero, and solving for \(\theta\). That solution is the maximum likelihood estimate \(\hat{\theta}_{MLE}\).

Above, we determined that the likelihood function for \(n\) iid binomial random variables \(\{X_1,\ldots,X_n\}\) is \[ \mathcal{L}(p \vert \mathbf{x}) = \left[\prod_{i=1}^n \binom{k}{x_i} \right] p^{\sum_{i=1}^n x_i} (1-p)^{nk-\sum_{i=1}^n x_i} \,. \] Recall that the value \(\hat{p}_{MLE}\) that maximizes \(\mathcal{L}(p \vert x)\) also maximizes \(\ell(p \vert x) = \log \mathcal{L}(p \vert x)\), which is considerably easier to work with: \[\begin{align*} \ell(p \vert \mathbf{x}) &= \left(\sum_{i=1}^n x_i\right) \log p + \left(nk - \sum_{i=1}^n x_i\right) \log (1-p) \\ \frac{d}{dp} \ell(p \vert \mathbf{x}) &= \frac{1}{p} \sum_{i=1}^n x_i - \frac{1}{1-p} \left(nk - \sum_{i=1}^n x_i\right) = 0 \,. \end{align*}\] (Here, we drop the binomial coefficient, which does not depend on \(p\) and thus differentiates to zero.) After rearranging terms, we find that \[ p = \frac{1}{nk}\sum_{i=1}^n x_i ~\implies~ \hat{p}_{MLE} = \frac{\bar{X}}{k} \,. \] The MLE matches the MVUE, thus we know that the MLE is unbiased and we know that it achieves the CRLB.

A useful property of MLEs is the invariance property, whereby the MLE for a function of \(\theta\) is given by applying the same function to the MLE itself. Thus

- the MLE for the population mean \(E[X] = \mu = kp\) is \(\hat{\mu}_{MLE} = \bar{X}\); and

- the MLE for the population variance \(V[X] = \sigma^2 = kp(1-p)\) is \(\widehat{\sigma^2}_{MLE} = \bar{X}(1-\bar{X}/k)\).

Last, note that asymptotically, \(\hat{p}_{MLE}\) converges in distribution to a normal random variable: \[ \hat{p}_{MLE} \stackrel{d}{\rightarrow} Y \sim \mathcal{N}\left(p,\frac{1}{nI(p)} = \frac{p(1-p)}{nk}\right) \,. \]

3.6.2 Sufficient Statistics for the Normal Distribution

If we have \(n\) iid data drawn from a normal distribution with unknown mean \(\mu\) and unknown variance \(\sigma^2\), then the factorized likelihood is \[ \mathcal{L}(\mu,\sigma^2 \vert \mathbf{x}) = \underbrace{(2 \pi \sigma^2)^{-n/2} \exp\left(-\frac{1}{2\sigma^2}\sum_{i=1}^n x_i^2\right)\exp\left(\frac{\mu}{\sigma^2}\sum_{i=1}^n x_i\right)\exp\left(-\frac{n\mu^2}{2\sigma^2}\right)}_{g(\sum x_i^2, \sum x_i,\mu,\sigma)} \cdot \underbrace{1}_{h(\mathbf{x})} \,. \] Here, we identify \(\sum x_i^2\) and \(\sum x_i\) as joint sufficient statistics: we need two pieces of information to jointly estimate \(\mu\) and \(\sigma^2\). (To be clear: it is not necessarily the case that one of the parameters matches up to one of the statistics…rather, the two statistics are jointly sufficient for estimation.) We thus cannot proceed further to define an MVUE for \(\mu\) or for \(\sigma^2\), without knowing the joint bivariate probability density function for \(Y_1 = \sum_{i=1}^n X_i^2\) and \(Y_2 = \sum_{i=1}^n X_i\).

(Note that we can proceed if we happen to know either \(\mu\) or \(\sigma^2\); if one of these values is fixed, then there will only be one sufficient statistic and we can determine the MVUE for the other, freely varying parameter.)

3.6.3 The Sufficiency Principle: Examples of When We Cannot Reduce Data

As stated above, it is often impossible to reduce data to, e.g., a single-number summary. The following examples, utilizing distributions that are not exponential-family distributions, illustrate this.

- A Laplace distribution with scale parameter 1 has the probability density function \[\begin{align*} f_X(x \vert \theta) &= \frac12 e^{-\vert x - \theta \vert} \,, \end{align*}\] where \(x \in (-\infty,\infty)\) and \(\theta \in (-\infty,\infty)\). If we observe \(n\) iid data, then likelihood factorization yields \[\begin{align*} \mathcal{L}(\theta \vert \mathbf{x}) = \frac{1}{2^n} e^{-\sum_{i=1}^n \vert x_i - \theta \vert} \,. \end{align*}\] \(Y = \sum_{i=1}^n \vert X_i - \theta \vert\) is not a sufficient statistic as it contains the (unknown) parameter: a sufficient statistic for \(\theta\) cannot contain \(\theta\) itself! We cannot isolate a function of \(\mathbf{X}\) alone, and thus no data reduction is possible.

- A Cauchy distribution with scale parameter 1 has the probability density function \[\begin{align*} f_X(x \vert \theta) = \frac{1}{\pi (x-\theta)^2} \,, \end{align*}\] where \(x \in (-\infty,\infty)\) and \(\theta \in (-\infty,\infty)\). If we observe \(n\) iid data, then likelihood factorization yields \[\begin{align*} \mathcal{L}(\theta \vert \mathbf{x}) = \frac{1}{\pi^n} \frac{1}{\prod_{i=1}^n (x_i-\theta)^2} \,. \end{align*}\] Again, we cannot isolate a function of the \(X_i\)’s alone: no data reduction is possible.

3.6.4 The MVUE for the Exponential Mean

The exponential distribution is \[ f_X(x) = \frac{1}{\theta} \exp\left(-\frac{x}{\theta}\right) \,, \] where \(x \geq 0\) and \(\theta > 0\), and where \(E[X] = \theta\) and \(V[X] = \theta^2\). Let’s assume that we have \(n\) iid data drawn from this distribution. Can we define the MVUE for \(\theta\)? For \(\theta^2\)?

The likelihood function is \[ \mathcal{L}(\theta \vert \mathbf{x}) = \prod_{i=1}^n f_X(x_i \vert \theta) = \frac{1}{\theta^n}\exp\left(-\frac{1}{\theta}\sum_{i=1}^n x_i \right) = h(\mathbf{x}) \cdot g(\theta,\mathbf{x}) \,. \] Here, there are no terms that are functions of only the data, so \(h(\mathbf{x}) = 1\) and a sufficient statistic is \(Y = \sum_{i=1}^n X_i\). We compute the expected value of \(Y\): \[ E[Y] = E\left[\sum_{i=1}^n X_i\right] = \sum_{i=1}^n E[X_i] = \sum_{i=1}^n \theta = n\theta \,. \] The expected value of \(Y\) is not \(\theta\), so \(Y\) is not unbiased…but we can see immediately that \(E[Y/n] = \theta\), so that \(Y/n\) is unbiased. Thus the MVUE for \(\theta\) is thus \(\hat{\theta}_{MVUE} = Y/n = \bar{X}\).

Note that the MVUE does not possess the invariance property…it is not necessarily the case that \(\hat{\theta^2}_{MVUE} = \bar{X}^2\).

Let’s propose a function of \(Y\) and see if we can use that to define \(\hat{\theta^2}_{MVUE}\): \(h(Y) = Y^2/n^2 = \bar{X}^2\). (To be clear, we are simply proposing a function and seeing if it helps us define what we are looking for. It might not. If not, we can try again with another function of \(Y\).) Utilizing what we know about the sample mean, we can write down that \[ E[\bar{X}^2] = V[\bar{X}] + (E[\bar{X}])^2 = \frac{V[X]}{n} + (E[X])^2 = \frac{\theta^2}{n}+\theta^2 = \theta^2\left(\frac{1}{n} + 1\right) \,. \] So \(\bar{X}^2\) itself is not an unbiased estimator of \(\theta^2\)…but we can see that \(\bar{X}^2/(1/n+1)\) is. Hence \[ \hat{\theta^2}_{MVUE} = \frac{\bar{X}^2}{\left(\frac{1}{n}+1\right)} \,. \]

3.6.5 The MVUE for the Geometric Distribution

Recall that the geometric distribution is a negative binomial distribution with \(s = 1\): \[\begin{align*} p_X(x) = \binom{x + s - 1}{x} p^s (1-p)^x ~ \rightarrow ~ p(1-p)^x \,, \end{align*}\] where \(p \in (0,1]\) and where \(E[X] = (1-p)/p\) and \(V[X] = (1-p)/p^2\). Let’s assume that we sample \(n\) iid data from this distribution. Can we define the MVUE for \(p\)? For \(1/p\)?

The likelihood function is \[\begin{align*} \mathcal{L}(p \vert \mathbf{x}) = \prod_{i=1}^n p_X(x_i \vert p) = p^n (1-p)^{\sum_{i=1}^n x_i} = 1 \cdot g(p,\mathbf{x}) \,. \end{align*}\] We identify a sufficient statistic as \(Y = \sum_{i=1}^n X_i\); the expected value of \(Y\) is \[\begin{align*} E[Y] = E\left[\sum_{i=1}^n X_i\right] = \sum_{i=1}^n E[X_i] = \sum_{i=1}^n \frac{1-p}{p} = n\left(\frac{1}{p}-1\right) \,. \end{align*}\] We cannot “debias” this expression to find the MVUE for \(p\): \[\begin{align*} E\left[\frac{Y}{n}+1\right] = \frac{1}{p} \,. \end{align*}\] Specifically, \[\begin{align*} E\left[\frac{1}{Y/n+1}\right] \neq 1/E\left[\frac{Y}{n}+1\right] = p \,. \end{align*}\] However, we did determine the MVUE for \(1/p\): \(Y/n+1 = \bar{X}+1\). But as there is no invariance property for the MVUE, we cannot use this expression to write down an MVUE for \(p\).

3.7 Confidence Intervals

Recall: a confidence interval is a random interval \([\hat{\theta}_L,\hat{\theta}_U]\) that overlaps (or covers) the true value \(\theta\) with probability \[ P\left( \hat{\theta}_L \leq \theta \leq \hat{\theta}_U \right) = 1 - \alpha \,, \] where \(1 - \alpha\) is the confidence coefficient. We determine \(\hat{\theta}\) by solving the following equation: \[ F_Y(y_{\rm obs} \vert \theta) - q = 0 \,, \] where \(F_Y(\cdot)\) is the cumulative distribution function for the statistic \(Y\), \(y_{\rm obs}\) is the observed value of the statistic, and \(q\) is an appropriate quantile value that is determined using the confidence interval reference table introduced in section 16 of Chapter 1.

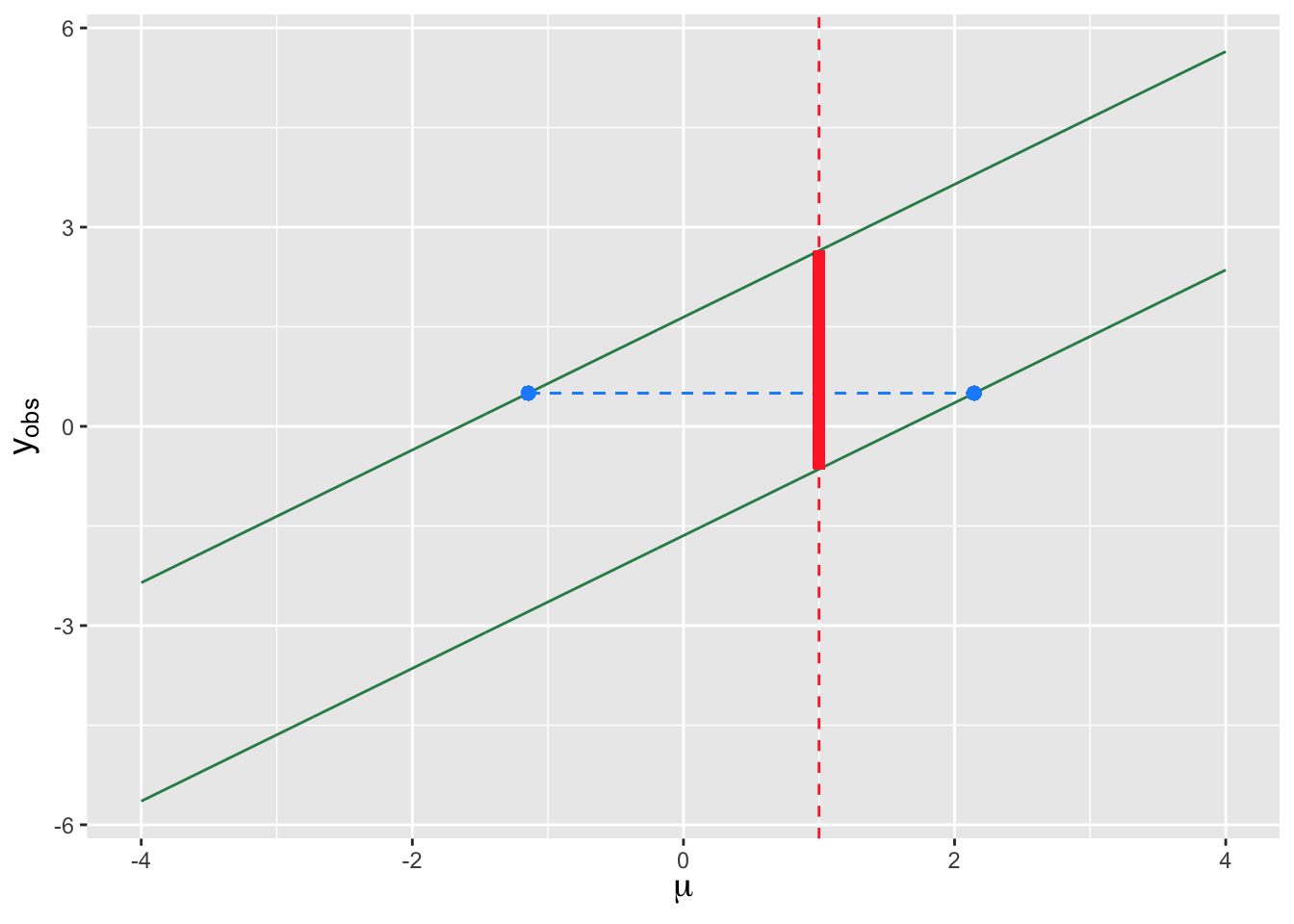

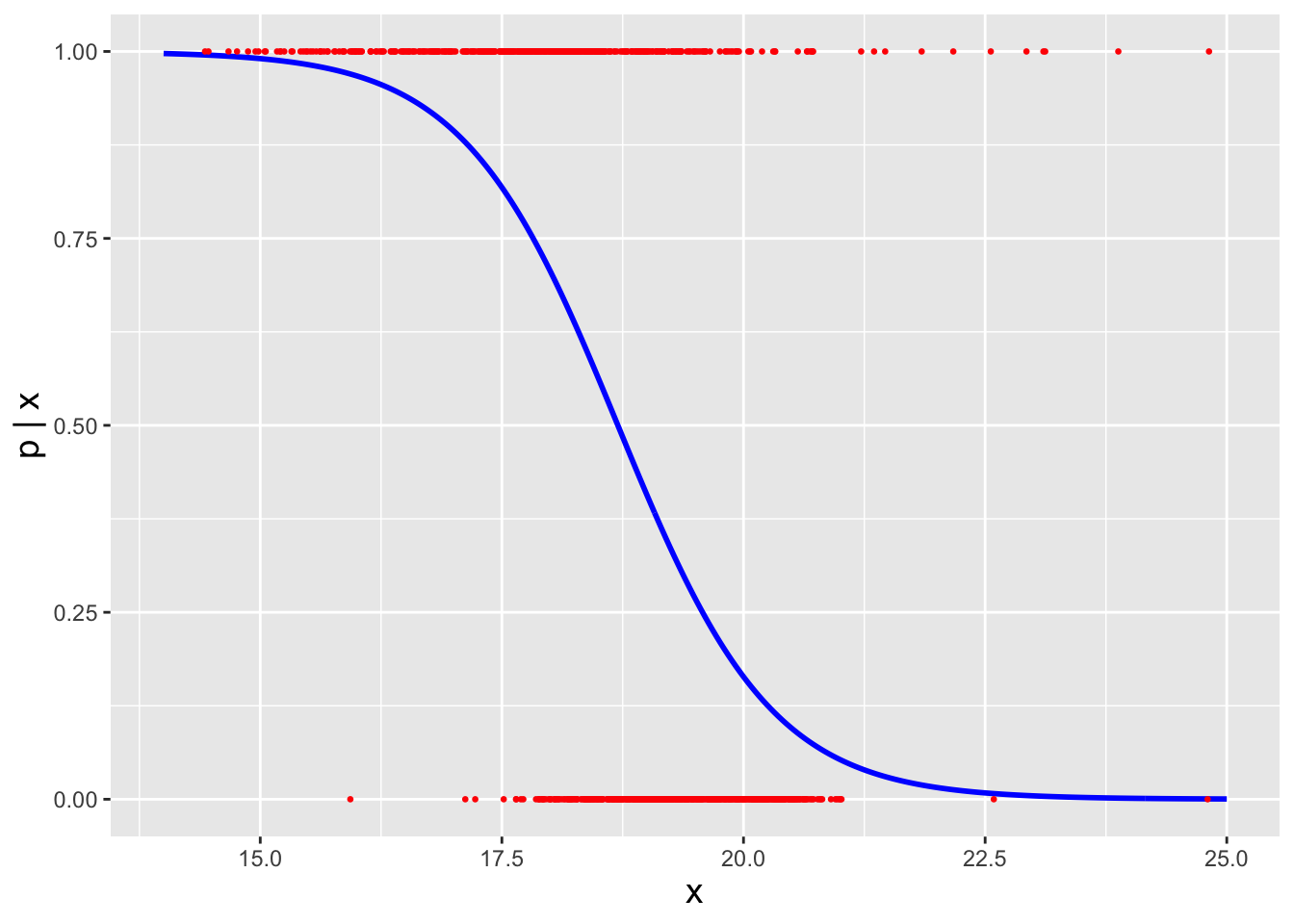

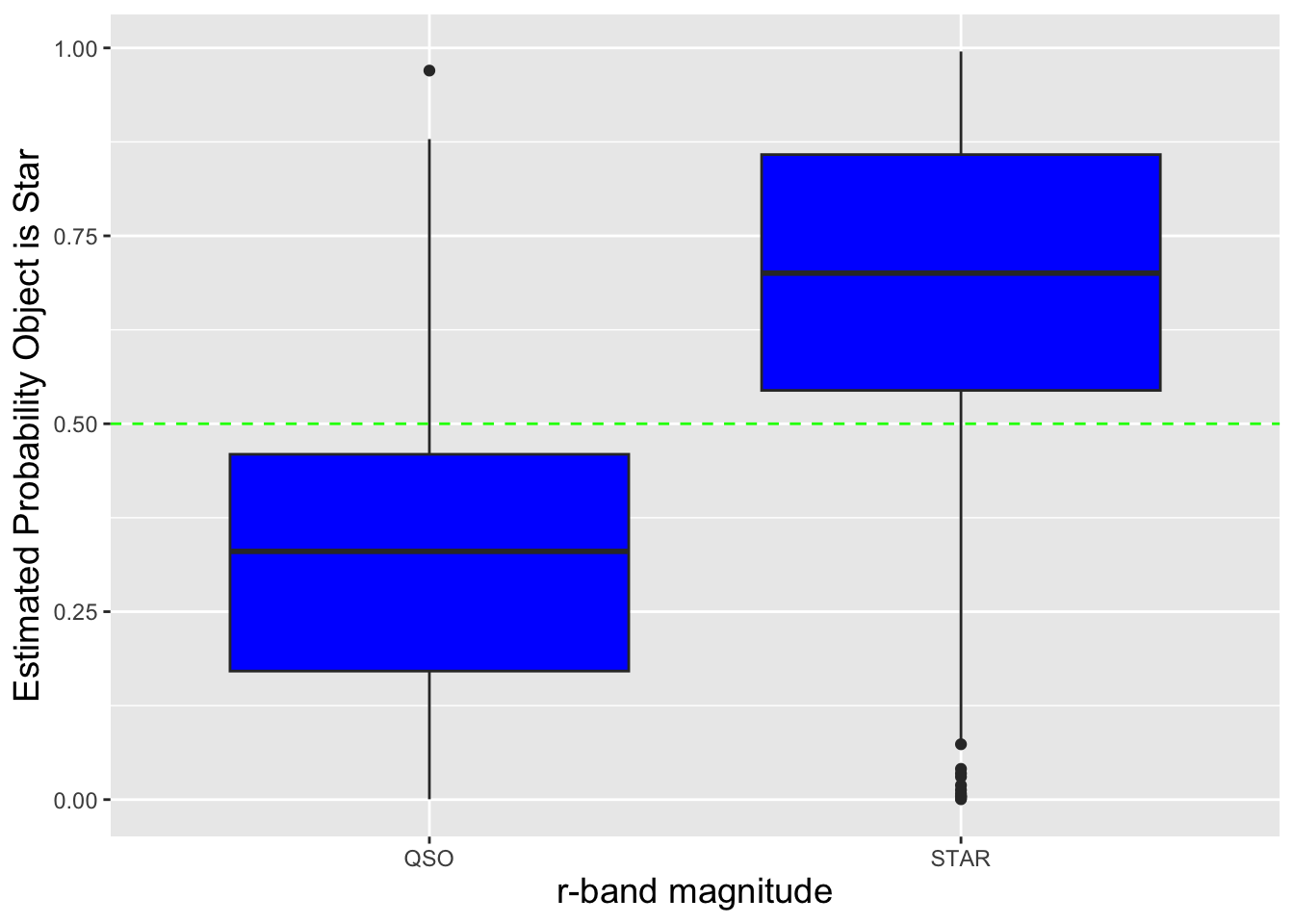

A concept that we have not explicitly discussed up until now is the “duality” between confidence intervals and hypothesis tests: as they are mathematically related, one can, in theory, “invert” hypothesis tests to derive confidence intervals and vice-versa. We illustrate this duality in Figure 3.7, in which we assume that the distribution from which we are to sample data is a normal distribution with mean \(\mu\) and known variance \(\sigma^2\). The parallel green lines in the figure represent lower and upper rejection region boundaries as a function of (the null hypothesis value) \(\mu\). Let’s say that we pick a specific value of \(\mu\), say \(\mu = \mu_o = 1\), and draw a vertical line at that coordinate. The part of that line that lies between the parallel green lines (indicated in solid red) indicates the range of observed statistic values \(y_{\rm obs}\) for which we would fail to reject the null, and the parts above and below the parallel lines (shown in dashed red) would indicate \(y_{\rm obs}\) values for which we would reject the null. (These are the acceptance and rejection regions, respectively; “acceptance region” is a term that we have not used up until now, but it simply denotes the range of statistic values for which we would fail to reject a null hypothesis, when it is indeed correct.) Confidence intervals, on the other hand, are ranges of values of \(\mu\) for which a given value of \(y_{\rm obs}\) lies in the acceptance region. (For instance, the dashed horizontal blue line in the figure shows the range of values associated with a two-sided confidence interval for \(\mu\) given \(y_{\rm obs} = 0.5\).) We can see that for a given value of \(\mu\), if we were to sample a value of \(y_{\rm obs}\) between the two green lines (with probability \(1-\alpha\)), then the associated confidence interval will overlap \(\mu\), and if we sample a value of \(y_{\rm obs}\) outside the green lines (with probability \(\alpha\)), the confidence interval will not overlap \(\mu\). The confidence interval coverage is thus exactly \(100(1-\alpha)\) percent.

Figure 3.7: An illustration of the relationship between hypothesis testing and confidence interval estimation for a continuous sampling distribution (here, a normal distribution with known variance). The two parallel green lines define rejection-region boundaries for a two-sided test with null hypothesis parameter value \(\mu\). Assume \(\mu = 1\): the dashed red lines indicate the values of \(y_{\rm obs}\) in the rejection region, while the solid red line indicates the values of \(y_{\rm obs}\) in the acceptance region (where we fail to reject the null). The horizontal dashed blue line shows the confidence interval constructed for a given value of \(y_{\rm obs}\). Given \(\mu\), the probability of sampling a statistic in the acceptance region is exactly \(1-\alpha\), and thus the coverage of confidence intervals will also be exactly \(1-\alpha\).

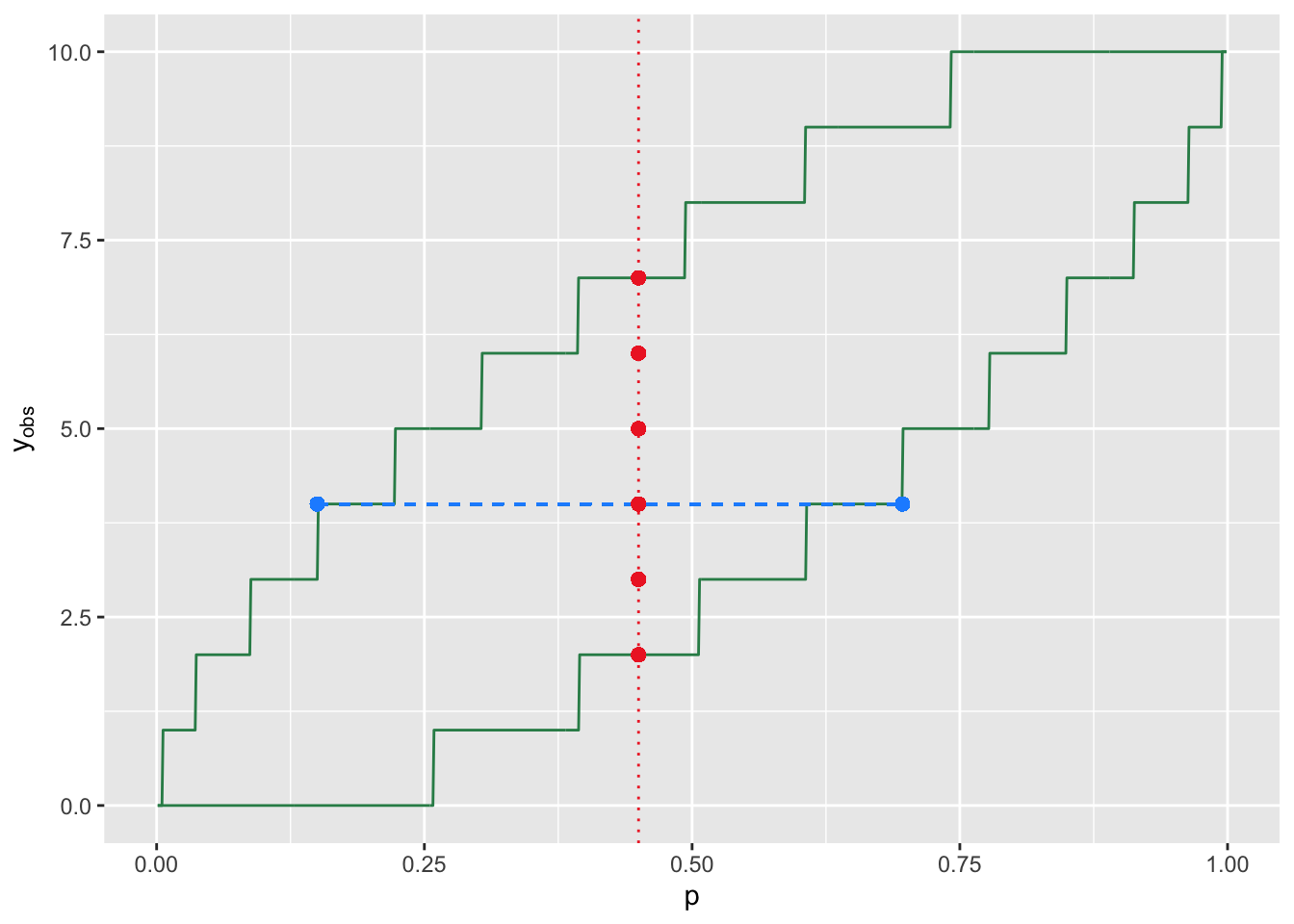

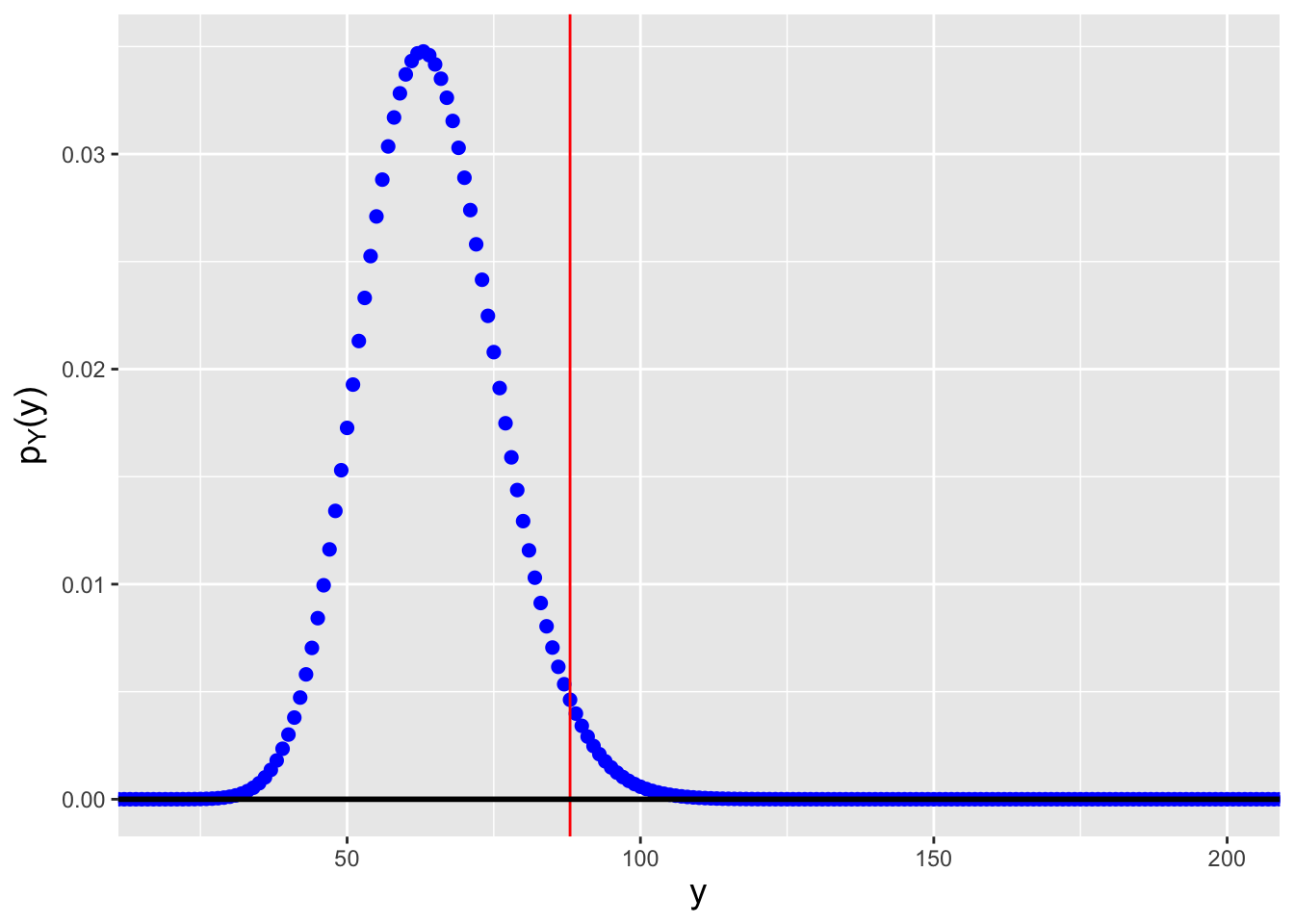

When we work with discrete sampling distributions, the overall picture is similar, but we sometimes need to make what we will dub “discreteness corrections” so that the codes we use generate the correct results. Figure 3.8 is an analogue to Figure 3.7 in which we illustrate the relationship between confidence intervals and hypothesis tests for a situation in which we conduct \(k = 10\) binomial trials. Because the statistic is discretely valued, the acceptance-region boundaries are step functions, but this is not the only change introduced with discrete distributions:

In the continuous case, the probability of sampling a datum that lies within the acceptance region for a given value of \(\theta\) is exactly \(1 - \alpha\). In the discrete case, that probability is \(\geq 1 - \alpha\). (And it will rarely, if ever, be the case that the probability masses within the acceptance region will sum to exactly \(1 - \alpha\)…although that sum will trend towards \(1 - \alpha\) as the sample size and/or the number of trials increases. This motivates our use of the word “level,” as in “we conduct a level-\(\alpha\) test,” rather than “size.” For a size-\(\alpha\) test, the probability of sampling a statistic value in the acceptance region is, by definition, exactly \(1-\alpha\), while for a level-\(\alpha\) test, it is \(\geq 1 - \alpha\).)

Because the probability of sampling a datum in the acceptance region is equal to the confidence interval coverage, the coverage will also be \(\geq 1 - \alpha\).

Figure 3.8: An illustration of the relationship between hypothesis testing and confidence interval estimation for a discrete sampling distribution (here, a binomial distribution with \(k=10\)). The two green lines define rejection-region boundaries for a two-sided test with null hypothesis parameter value \(p\). Assume \(p = 0.45\): the red dots indicates the values of \(y_{\rm obs}\) in the acceptance region (where we fail to reject the null). The horizontal dashed blue line shows the confidence interval constructed for a given value of \(y_{\rm obs}\). Given \(p\), the probability of sampling a statistic in the acceptance region is generally greater than \(1-\alpha\), and thus the coverage of confidence intervals, which has the same value, will also be generally greater than \(1-\alpha\).

The blue dashed line in Figure 3.8 is an example of

a confidence interval (specifically for \(y_{\rm obs} = 4\)). In order to

construct an interval like this, we would use a uniroot()-style code

as we have before, but with the following changes.

- If we are determining a lower bound \(\hat{\theta}_L\) in a situation where \(E[Y]\) increases with \(\theta\), we need to replace \(y_{\rm obs}\) in the input to

uniroot()with the next smaller value in the distribution’s domain (e.g., \(y_{\rm obs} - 1\) for a binomially distributed statistic). - If we are determining an upper bound \(\hat{\theta}_U\) in a situation where \(E[Y]\) decreases with \(\theta\), we need to replace \(y_{\rm obs}\) in the input to

uniroot()with the next smaller value in the distribution’s domain (e.g., \(y_{\rm obs} - 1\) for a negative binomially distributed statistic).

We note that for the specific case of the binomial distribution, the confidence intervals that we construct are dubbed exact, or Clopper-Pearson, intervals. Because binomial distributions have historically been difficult to work with analytically, a number of algorithms have been developed through the years for constructing approximate confidence intervals for binomial probabilities. (See, e.g., this Wikipedia page for examples.) It is our opinion that there is no reason to utilize any of these algorithms when one can compute exact intervals, particularly since the coverages of exact intervals are easily derived for any given value \(p\). However, for completeness, we illustrate how one would construct the most-commonly used approximating interval, the Wald interval, in an example below.

3.7.1 Confidence Interval for the Binomial Success Probability

Assume that we sample \(n\) iid data from a binomial distribution with number of trials \(k\) and probability \(p\). Then, as shown above, \(Y = \sum_{i=1}^n X_i \sim\) Binom(\(nk,p\)); our observed test statistic is \(y_{\rm obs} = \sum_{i=1}^n x_i\). For this statistic, \(E[Y] = nkp\) increases with \(p\), so \(q = 1-\alpha/2\) maps to the lower bound, while \(q = \alpha/2\) maps to the upper bound.

set.seed(101)

alpha <- 0.05

n <- 12

k <- 5

p <- 0.4

X <- rbinom(n,size=k,prob=p)

f <- function(p,y.obs,n,k,q)

{

pbinom(y.obs,size=n*k,prob=p)-q

}

uniroot(f,interval=c(0,1),y.obs=sum(X)-1,n,k,1-alpha/2)$root # note correction## [1] 0.2459379## [1] 0.5010387We find that the interval is \([\hat{p}_L,\hat{p}_U] = [0.246,0.501]\), which overlaps the true value of 0.4. (See Figure 3.9.) Note that, unlike in Chapter 2, the interval over which we search for the root is [0,1], which is the range of possible values for \(p\).

We can compute the coverage of this interval following the prescription given above:

y.rr.lo <- qbinom(alpha/2,n*k,p)

y.rr.hi <- qbinom(1-alpha/2,n*k,p)

sum(dbinom(y.rr.lo:y.rr.hi,n*k,p))## [1] 0.9646363Due to the discreteness of the binomial distribution, the true coverage of our two-sided intervals, in this particular circumstance, is 96.5%, not 95%.

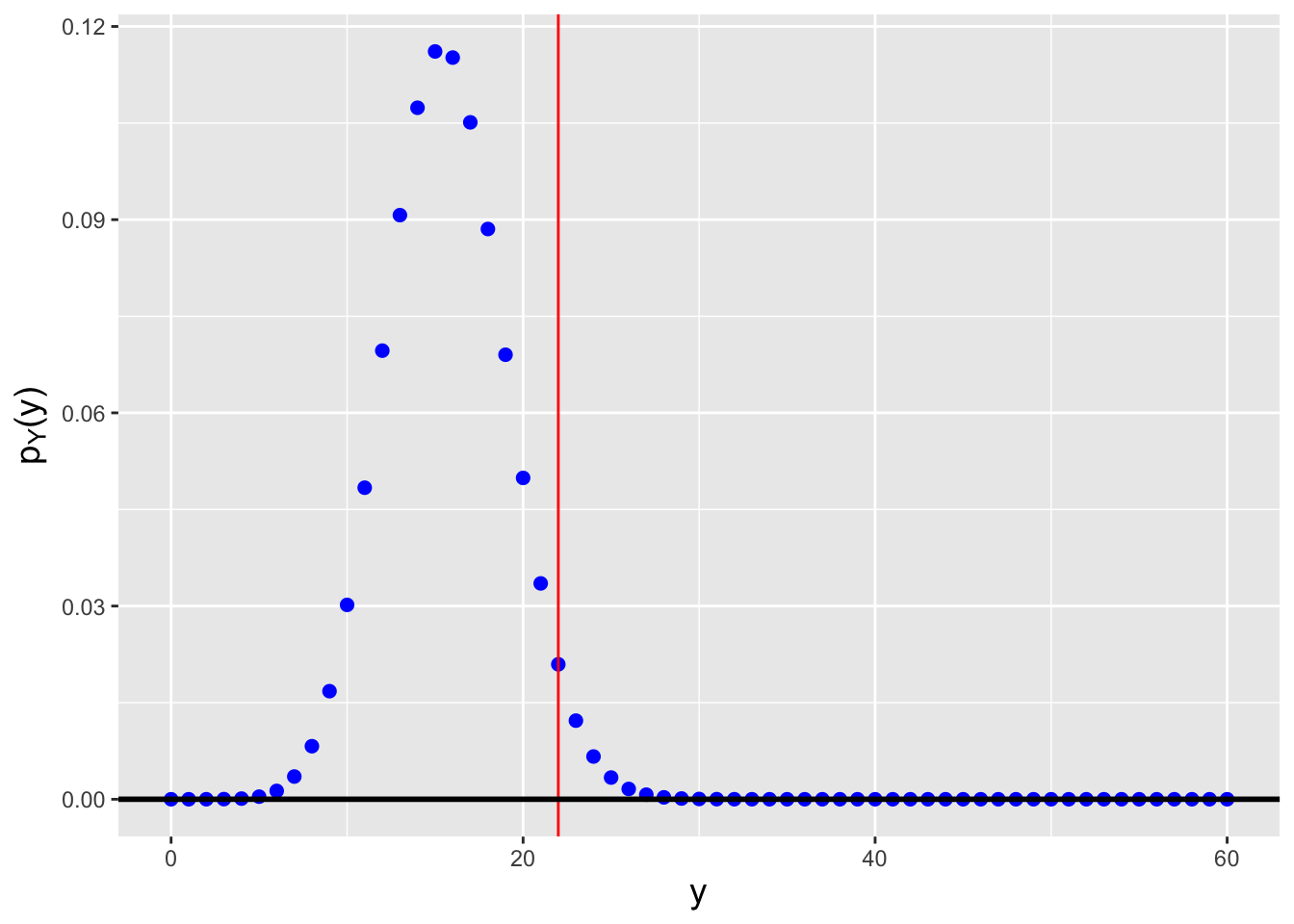

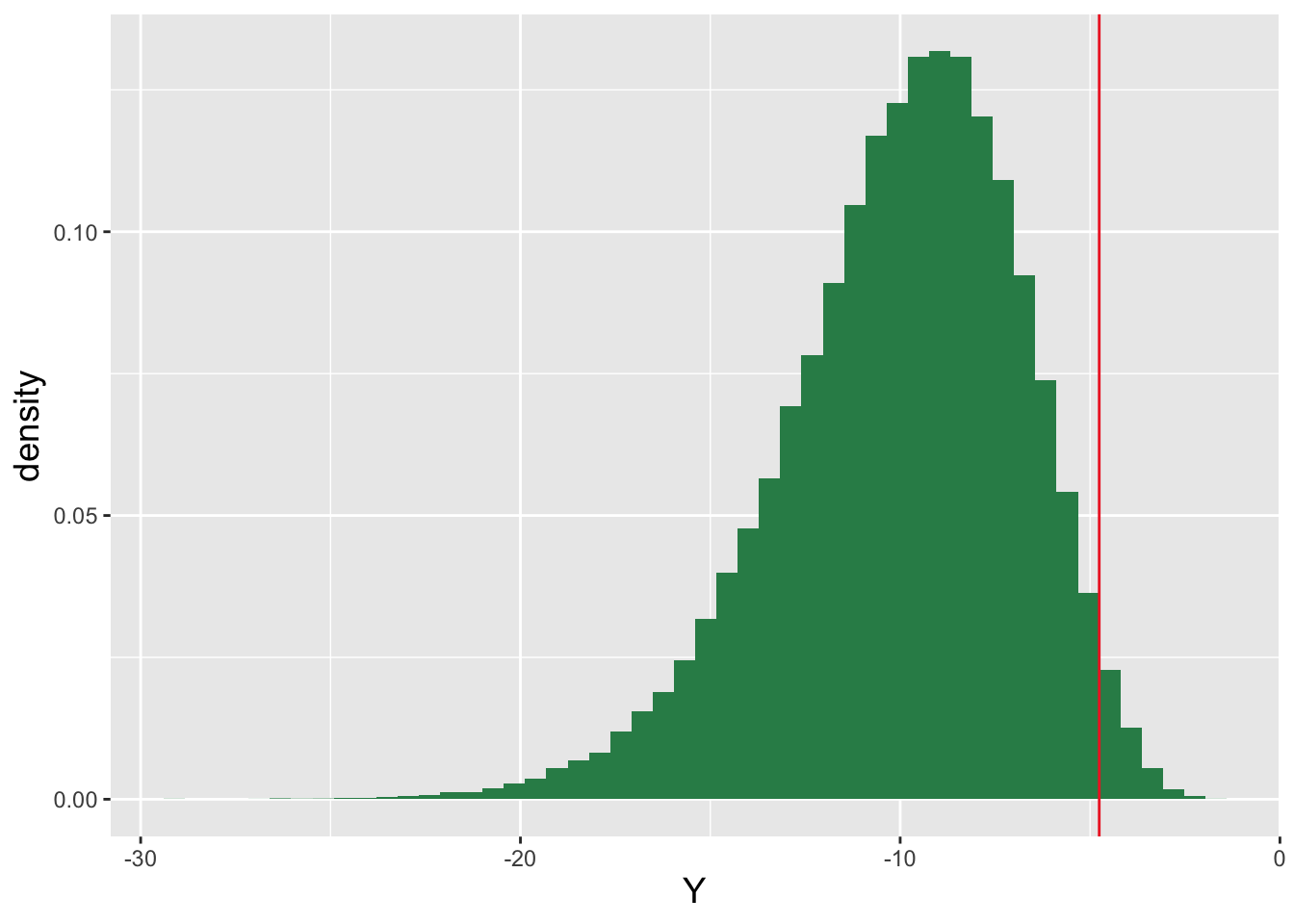

Figure 3.9: Probability mass functions for binomial distributions for which \(n \cdot k= 12 \cdot 5 = 60\) and (left) \(p=0.246\) and (right) \(p=0.501\). We observe \(y_{\rm obs} = \sum_{i=1}^n x_i = 22\) successes and we want to construct a 95% confidence interval. \(p=0.246\) is the smallest value of \(p\) such that \(F_Y^{-1}(0.975) = 22\), while \(p=0.501\) is the largest value of \(p\) such that \(F_Y^{-1}(0.025) = 22\).

3.7.2 Confidence Interval for the Negative Binomial Success Probability

Let’s assume that we have performed \(n = 10\) separate negative binomial trials, each with a target number of successes \(s\), and recorded the number of failures \(X_1,\ldots,X_{n}\) for each. Further, assume that the probability of success is \(p\). Below we will show how to compute the confidence interval for \(p\), but before we start, we recall that \(Y = \sum_{i=1}^n X_i\) is a negative binomially distributed random variable for \(ns\) successes and probability of success \(p\). Here, \(E[Y] = s(1-p)/p\)…as \(p\) increases, \(E[Y]\) decreases. Thus when we adapt the confidence interval code we use for binomially distributed data, we need to switch the mapping of \(q = 1-\alpha/2\) and \(q = \alpha/2\) to the upper and lower bounds, respectively.

set.seed(101)

alpha <- 0.05

n <- 12

s <- 5

p <- 0.4

X <- rnbinom(n,size=s,prob=p)

f <- function(p,y.obs,n,s,q)

{

pnbinom(y.obs,size=n*s,prob=p)-q

}

uniroot(f,interval=c(0.0001,1),y.obs=sum(X),n,s,alpha/2)$root## [1] 0.3255305## [1] 0.4853274The confidence interval is \([\hat{p}_L,\hat{p}_U] = [0.326,0.485]\), which overlaps the true value 0.4. We note that in the code, we change the lower bound on the interval from 0 (in the binomial case) to 0.0001 (something suitably small but non-zero): a probability of success of 0 maps to an infinite number of failures, which

Rcannot tolerate!

We can compute the coverage of this interval following the prescription given above:

y.rr.lo <- qnbinom(1-alpha/2,n*s,p)

y.rr.hi <- qnbinom(alpha/2,n*s,p)

sum(dnbinom(y.rr.lo:y.rr.hi,n*s,p))## [1] 0.9510741Due to the discreteness of the binomial distribution, the true coverage of our two-sided intervals is 95.1%, not 95%.

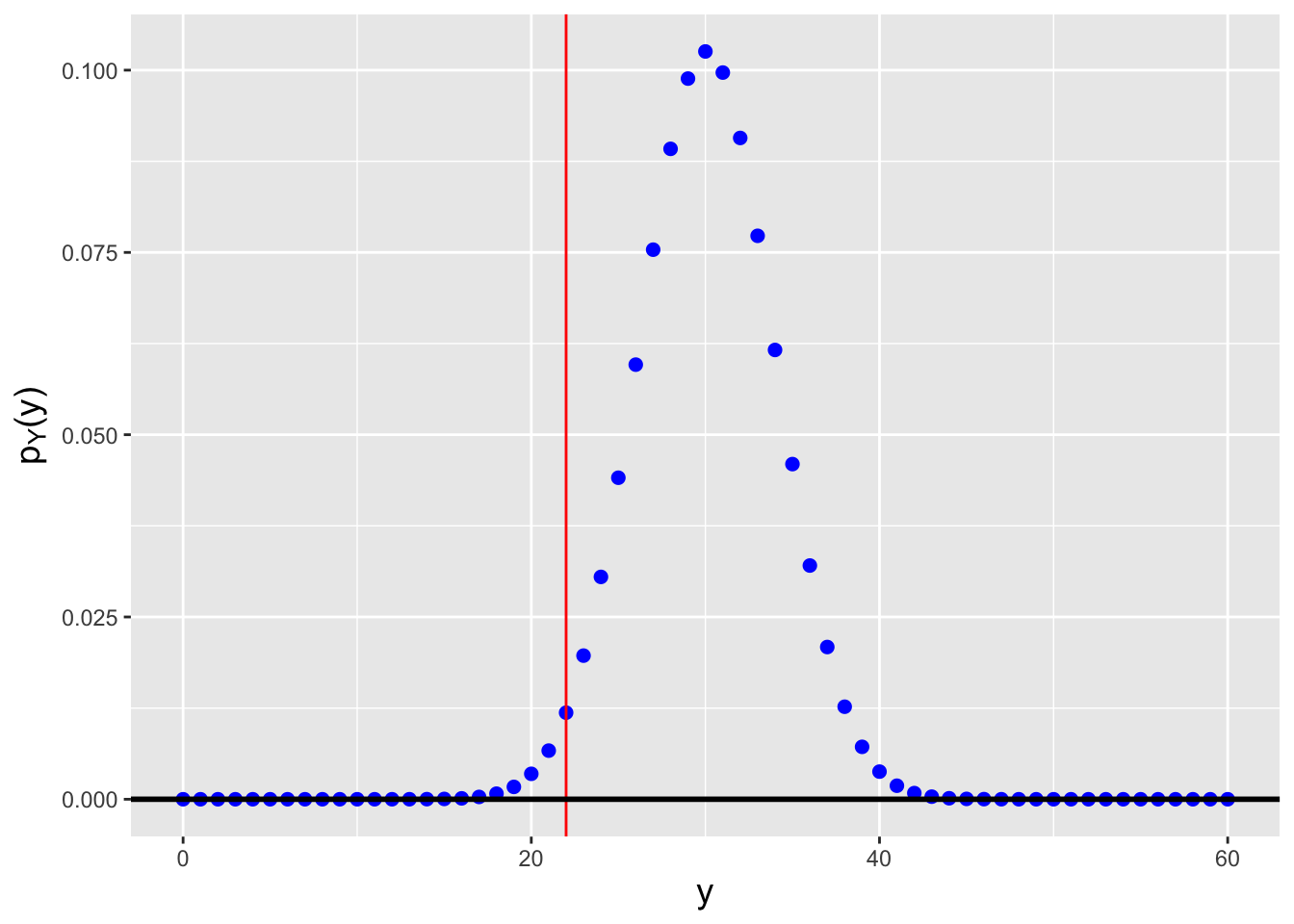

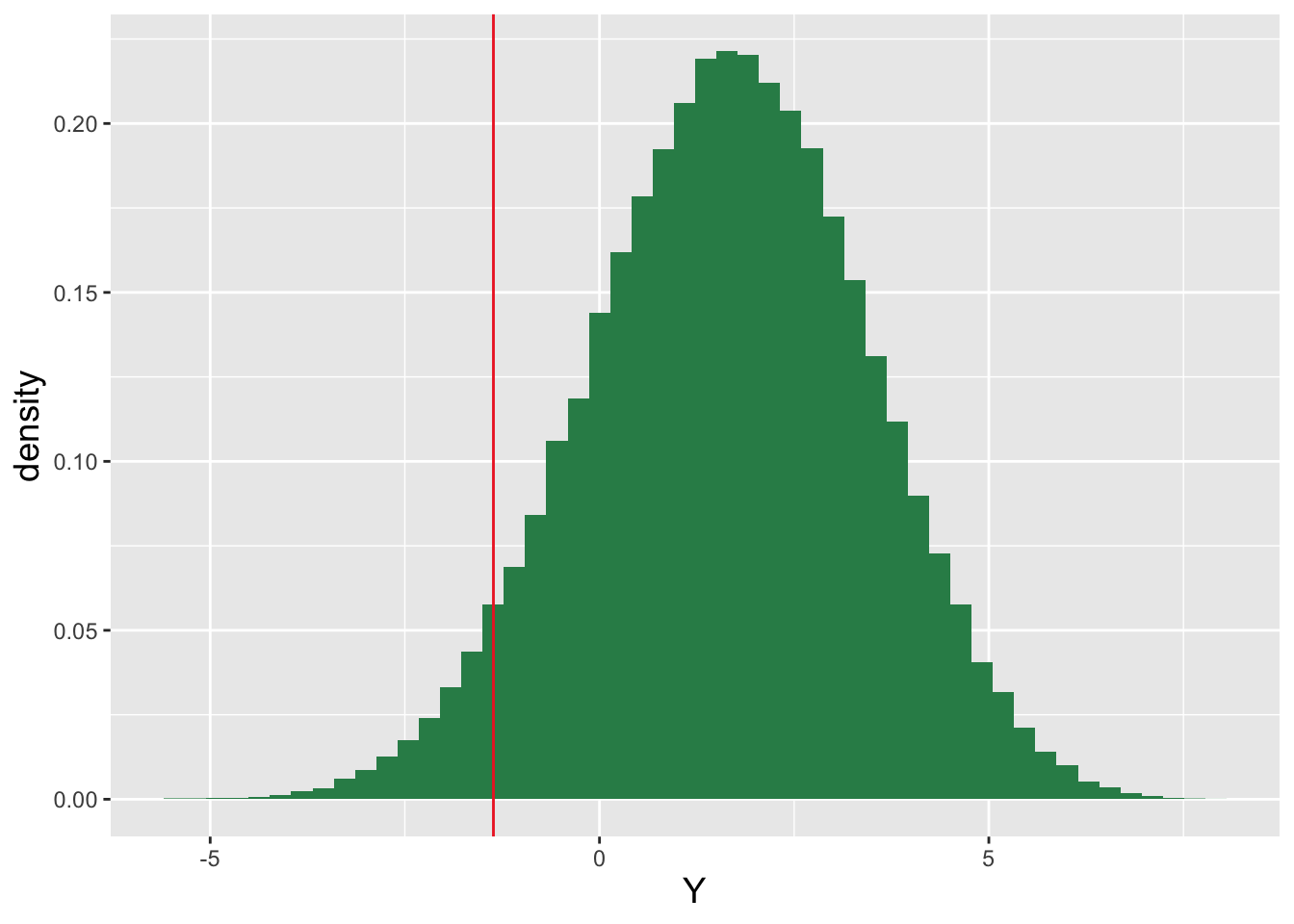

Figure 3.10: Probability mass functions for negative binomial distributions for which \(n \cdot s = 12 \cdot 5 = 60\) and (left) \(p=0.326\) and (right) \(p=0.485\). We observe \(y_{\rm obs} = \sum_{i=1}^n x_i = 88\) failures and we want to construct a 95% confidence interval. \(p=0.326\) is the smallest value of \(p\) such that \(F_Y^{-1}(0.025) = 88\), while \(p=0.485\) is the largest value of \(p\) such that \(F_Y^{-1}(0.975) = 88\).

3.7.3 Wald Interval for the Binomial Success Probability

Let’s assume that we have sampled \(n\) iid binomial variables with number of trials \(k\) and probability of success \(p\). When \(k\) is sufficiently large and \(p\) is sufficiently far from 0 or 1, we can assume that \(\bar{X}\) has a distribution whose shape is approximately that of a normal distribution, with mean \(E[\bar{X}] = kp\) and with variance and standard error \[ V[\bar{X}] = \frac{kp(1-p)}{n} ~~~ \mbox{and} ~~~ se(\bar{X}) = \sqrt{V[\bar{X}]} = \sqrt{\frac{kp(1-p)}{n}} \,. \] Furthermore, we can assume that \(\hat{p} = \bar{X}/k\) is approximately normally distributed, with mean \(p\), variance \(V[\hat{p}] = V[\bar{X}]/k^2 = p(1-p)/nk\), and standard error \(\sqrt{p(1-p)/nk}\). Given this information, it is simple to express, e.g., an approximate two-sided \(100(1-\alpha)\)% confidence interval for \(p\): \[ \hat{p} \pm z_{1-\alpha/2} se(\hat{p}) ~~ \Rightarrow ~~ \left[ \hat{p} - z_{1-\alpha/2} \sqrt{\frac{p(1-p)}{nk}} \, , \, \hat{p} + z_{1-\alpha/2} \sqrt{\frac{p(1-p)}{nk}} \right] \,, \] where \(z_{1-\alpha/2} = \Phi^{-1}(1-\alpha/2)\). However, we don’t actually know the true value of \(p\)…so we plug in \(p = \hat{p}\).

This is the so-called Wald interval that is typically provided to students in introductory statistics courses, although it is typically provided assuming \(n = 1\) and assuming that \(\alpha = 0.05\): \[ \left[ \hat{p} - 1.96 \sqrt{\frac{\hat{p}(1-\hat{p})}{k}} \, , \, \hat{p} + 1.96 \sqrt{\frac{\hat{p}(1-\hat{p})}{k}} \right] \,, \] where in this case \(\hat{p} = X/k\).

What is the Wald interval for the situation provided in the first example above?

set.seed(101)

alpha <- 0.05

n <- 12

k <- 5

p <- 0.4

X <- rbinom(n,size=k,prob=p)

z <- qnorm(1-alpha/2)

p.hat <- sum(X)/(n*k)

p.hat - z*sqrt(p.hat*(1-p.hat)/(n*k))## [1] 0.2447328## [1] 0.4886005We can compare these values to those generated in the first example: \([0.236,0.501]\). Here, the interval is smaller relative to the Clopper-Pearson interval, and thus the coverage for \(p = 0.4\) (which we would have to estimate via simulation) will be smaller as well.

3.8 Hypothesis Testing

Recall: a hypothesis test is a framework to make an inference about the value of a population parameter \(\theta\). The null hypothesis \(H_o\) is that \(\theta = \theta_o\), while possible alternatives \(H_a\) are \(\theta \neq \theta_o\) (two-tail test), \(\theta > \theta_o\) (upper-tail test), and \(\theta < \theta_o\) (lower-tail test). For, e.g., a one-tail test, we reject the null hypothesis if the observed test statistic \(y_{\rm obs}\) falls outside the bound given by \(y_{RR}\), which is a solution to the equation \[ F_Y(y_{RR} \vert \theta_o) - q = 0 \,, \] where \(F_Y(\cdot)\) is the cumulative distribution function for the statistic \(Y\) and \(q\) is an appropriate quantile value that is determined using the hypothesis test reference table introduced in section 17 of Chapter 1. Note that the hypothesis test framework only allows us to make a decision about a null hypothesis; nothing is proven.

In the previous chapter, we utilized \(\bar{X}\) when testing hypotheses about the normal mean \(\mu\). This is a principled choice for a test statistic\(-\)after all, \(\bar{X}\) is the MLE for \(\mu-\)but we do not yet know whether or not we can choose a better one. Can we differentiate hypotheses more easily if we use a test statistic other than \(\bar{X}\)?

To help answer this question, we now introduce a method for defining the most powerful test of a simple null hypothesis versus a simple alternative hypothesis: \[ H_o : \theta = \theta_o ~~\mbox{and}~~ H_a : \theta = \theta_a \,. \] Note that the word “simple” has a precise meaning here: it means that when we set \(\theta\) to a particular value, we are completely fixing the shape and location of the pmf or pdf from which data are sampled. If, for instance, we are dealing with a normal distribution with unknown variance \(\sigma^2\), the hypothesis \(\mu = \mu_o\) would not be simple, since the width of the pdf can still vary: the shape is not completely fixed. (The hypothesis \(\mu = \mu_o\) with variance unknown is dubbed a composite hypothesis. We will examine how to work with composite hypotheses in the next chapter.) For a given test level \(\alpha\), the Neyman-Pearson lemma states that the test that maximizes the power has a rejection region of the form \[ \lambda_{NP} = \frac{\mathcal{L}(\theta_o \vert \mathbf{x})}{\mathcal{L}(\theta_a \vert \mathbf{x})} < c(\alpha) \,, \] where \(c\) is a constant whose value depends on \(\alpha\) that we have to determine. While this formulation initially appears straightforward, it is in fact not necessarily clear how to derive \(c(\alpha)\). However: when we work with distributions that belong to the exponential family, we don’t need to! The NP lemma utilizes the likelihood function, so in this situation it is implicitly telling us that the best statistic for differentiating between two simple hypotheses is a sufficient statistic. We simply determine a sufficient statistic and and use its sampling distribution to define a hypothesis test via the procedure we have laid out in previous chapters. Full stop. (What if the distribution we are working with is not a member of the exponential family? We would need to determine the sampling distribution of \(\lambda_{NP}\) itself, something most easily done via the use of simulations. We illustrate this in an example below.)

For instance, when we draw \(n\) iid data from a binomial distribution, the sufficient statistic \(\sum_{i=1}^n X_i\) has a known and easily utilized sampling distribution\(-\)namely, Binom(\(nk,p\))\(-\)while \(\bar{X} = (\sum_{i=1}^n X_i)/n\) does not. So we would utilize \(Y = \sum_{i=1}^n X_i\). (Note that it ultimately doesn’t matter which function of a sufficient statistic we use: if we can carry out the math, we will end up with tests that have the same power and result in the same \(p\)-values. It would be very problematic if this wasn’t the case: we’d have to “fish around” to determine which function of a sufficient statistic would give us the best test, which clearly would not be a good situation to find ourselves in.)

Let’s assume that we use \(Y = \sum_{i=1}^n X_i\) to define, e.g., a lower-tail test \(H_o : p = p_o\) versus \(H_a : p = p_a < p_o\). Since \(E[Y] = nkp\) increases with \(p\), we can go to the hypothesis test reference tables and write (in code) that

We see that our rejection region boundary depends on the value of \(p_o\), but not on the value of \(p_a\). This means that the test we define above is the most-powerful test regardless of the value \(p_a < p_o\). We have thus constructed a uniformly most powerful (or UMP) test for disambiguating the simple hypotheses \(H_o : p = p_o\) and \(H_a : p = p_a < p_o\). It is typically the case that when we use the NP lemma to define a most powerful test for \(\theta_o\) versus \(\theta_a\), we end up defining a UMP test as well. (That is because the families of distributions that we work with typically exhibit so-called monotone likelihood ratios [or MLRs]. For more information on MLRs and UMPs, the interested reader should look at the Karlin-Rubin theorem.)

We note that we cannot use the NP lemma to construct most powerful two-tail hypothesis tests. When we construct a two-tail test, it is convention to define one rejection region boundary assuming \(q = \alpha/2\) and the other assuming \(q = 1 - \alpha/2\). But that is just convention; we could put \(\alpha/10\) on “one side” and \(1-9\alpha/10\) on the other, etc., and in general we cannot guarantee that any one way of splitting \(\alpha\) will yield a more powerful test in a given situation than any other possible split.

We conclude our present discussion of hypothesis tests with an overview of how working with a discrete sampling distribution affects the computation of \(p\)-values and test power.

In the last section, we introduced the idea of a “discreteness correction”; for confidence interval calculations, this involved changing the observed statistic value to the next lower value in the domain of the sampling distribution when estimating a lower interval bound (with \(E[Y]\) increasing with \(\theta\)) or when estimating an upper interval bound (with \(E[Y]\) decreasing with \(\theta\)). (For the binomial and negative binomial distributions, this means changing \(y_{\rm obs}\) to \(y_{\rm obs}-1\).) What are the discreteness corrections that we need to make when performing hypothesis tests? First, there are none when computing rejection-region boundaries. Second, when computing \(p\)-values, we change the observed statistic value to the next lower value in the domain of the sampling distribution when performing

- upper-tail tests where \(E[Y]\) increases with \(\theta\) and

- lower-tail tests where \(E[Y]\) decreases with \(\theta\).

Last, when computing test power, we change the derived rejection-region boundary to the next lower value in the domain of the sampling distribution when performing

- upper-tail tests where \(E[Y]\) decreases with \(\theta\) and

- lower-tail tests where \(E[Y]\) increases with \(\theta\).

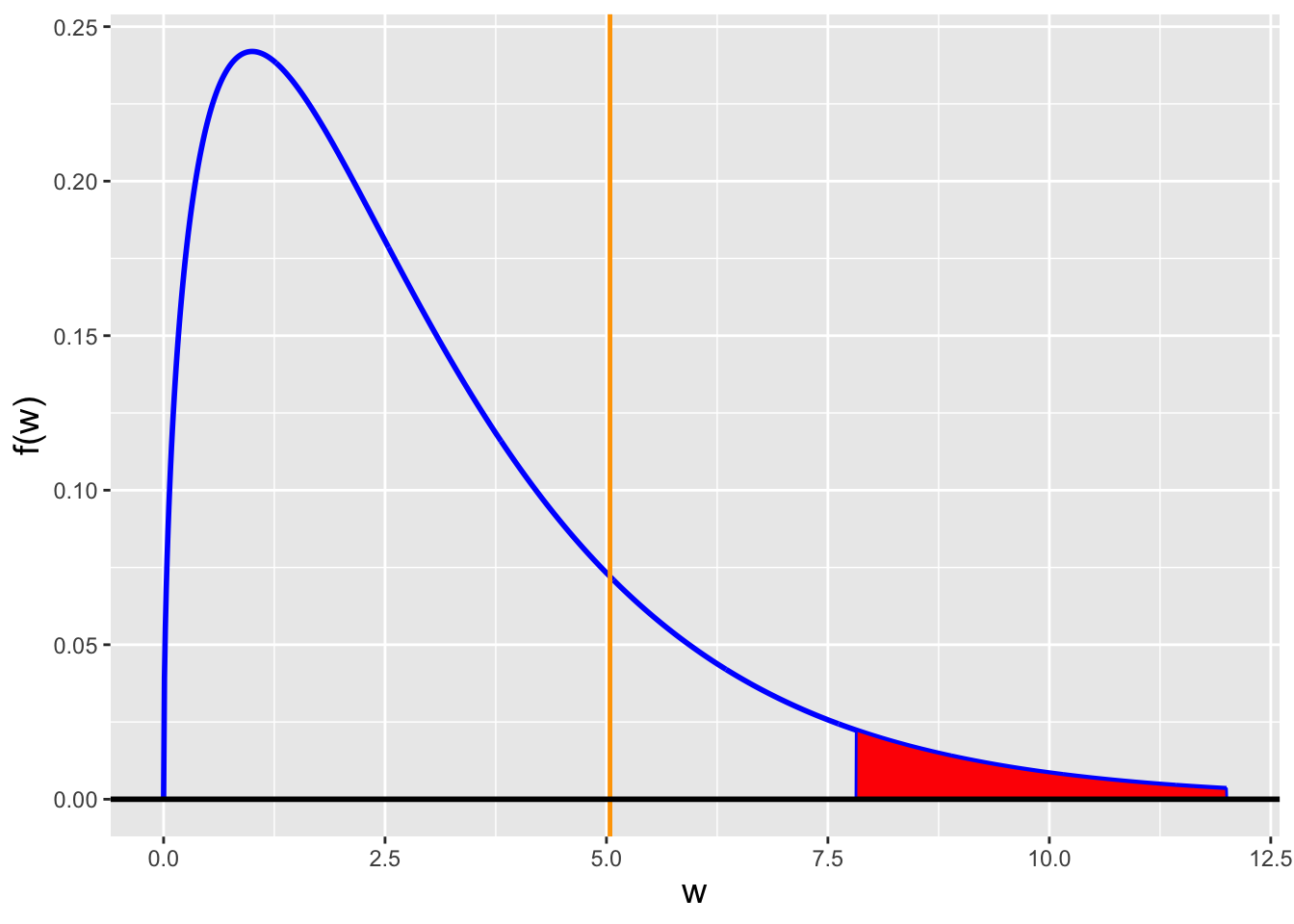

3.8.1 UMP Test: Exponential Distribution

Let’s suppose we sample \(n = 3\) iid data from the exponential distribution \[ f_X(x) = \frac{1}{\theta} e^{-x/\theta} \,, \] for \(x \geq 0\) and \(\theta > 0\), and we wish to define the most powerful test of the simple hypotheses \(H_o : \theta_o = 2\) and \(H_a : \theta_a = 1\). We observe the values \(\mathbf{x}_{\rm obs} = \{0.215,1.131,2.064\}\), and we assume \(\alpha = 0.05\).

We begin by determining a sufficient statistic: \[ \mathcal{L}(\theta \vert \mathbf{x}) = \prod_{i=1}^3 \frac{1}{\theta} e^{-x_i/\theta} = \frac{1}{\theta^3} e^{(\sum_{i=1}^3 x_i)/\theta} \,. \] We identify \(Y = \sum_{i=1}^3 X_i\) as a sufficient statistic. The next question is whether we can determine the sampling distribution for \(Y\). The moment-generating function for each of the \(X_i\)’s is \[ m_{X_i}(t) = (1 - \theta t)^{-1} \,, \] and so the mgf for \(Y\) is \[ m_Y(t) = \prod_{i=1}^3 m_{X_i}(t) = (1 - \theta t)^{-3} \,, \] We (might!) recognize this as the mgf for a Gamma(3,\(\theta\)) distribution. (The gamma distribution will be officially introduced in Chapter 4.) So: we know the sampling distribution for \(Y\), and we can use it to define the most powerful test. To reiterate: the only thing that the NP lemma is doing for us here is guiding our selection of a test statistic. Beyond that, we construct the test using the framework we already learned in Chapters 1 and 2.

Because \(\theta_a < \theta_o\), we define a lower-tail test. And since \(E[Y] = 3\theta\) increases with \(\theta\), we utilize the formulae from the hypothesis test reference tables that are on the “yes” line. The rejection-region boundary is thus \[ y_{\rm RR} = F_Y^{-1}(\alpha \vert \theta_o) \,, \] which in code is

## [1] 3.41## [1] 1.635383Our observed statistic is \(y_{\rm obs} = 3.410\) and the rejection-region boundary is 1.635: we fail to reject the null and conclude that \(\theta_o = 2\) is a plausible value of \(\theta\).

We note that because \(y_{\rm RR}\) is not a function of \(\theta_a\), we have not only defined the most powerful test, but we have also defined a uniformly most powerful test for all alternative hypotheses \(\theta_a < \theta_o\).

What is the \(p\)-value, and what is the power of the test if \(\theta = 1.5\)?

According to the hypothesis test reference tables, the \(p\)-value is \[ p = F_Y(y_{\rm obs} \vert \theta_o) \,, \] which in code is

## [1] 0.2440973The \(p\)-value is 0.244, which is greater than \(\alpha\), as we expect.

The test power is \[ {\rm power}(\theta) = F_Y(y_{\rm RR} \vert \theta) \,, \] which in code is

## [1] 0.09762911The power is 0.097…only 9.7% of the time will we reject the null hypothesis \(\theta_o = 2\) when \(\theta\) is actually 1.5.

3.8.2 UMP Test: Negative Binomial Distribution

Let’s assume that we sample \(n = 5\) data from a negative binomial distribution \[ p_X(x) = \binom{x+s-1}{x} p^s (1-p)^x \,, \] with \(x \in \{0,1,\ldots,\infty\}\) being the observed number of failures prior to observed the \(s^{\rm th}\) success, \(p \in (0,1]\), and \(s = 3\) successes. We wish to define the most powerful test of the simple hypotheses \(H_o : p_o = 0.5\) and \(H_a : p_a = 0.25\). We observe the values \(\mathbf{x}_{\rm obs} = \{5,3,10,12,4\}\), and we assume \(\alpha = 0.05\).

As in the last example, we begin by determining a sufficient statistic: \[ \mathcal{L}(\theta \vert \mathbf{x}) = \prod_{i=1}^5 \binom{x_i+s-1}{x_i} p^s (1-p)^x_i \propto \prod_{i=1}^5 p^s (1-p)^x_i = p^{ns} (1-p)^{\sum_{i=1}^5 x_i} \,. \] We identify \(Y = \sum_{i=1}^5 X_i\) as a sufficient statistic. Earlier in the chapter, we determined that if the \(X_i\)’s are iid draws from a negative binomial distribution with parameters \(p\) and \(s\), then the sum is also negative binomially distributed, with parameters \(p\) and \(ns\). So: we know the sampling distribution for \(Y\), and we can use it to define the most powerful test.

Because \(p_a < p_o\), we will define a lower-tail test. And since \(E[Y] = s(1-p)/p\) decreases with \(p\), we will utilize the formulae from the hypothesis test reference tables that are on the “no” line (and we will reject the null hypothesis if \(y_{\rm obs} > y_{\rm RR}\)).

The rejection-region boundary is \[ y_{\rm RR} = F_Y^{-1}(1-\alpha \vert p_o) \,, \] which in code is

## [1] 34## [1] 25Our observed statistic is \(y_{\rm obs} = 34\) and the rejection-region boundary is 25: we reject the null hypothesis and state that there is sufficient evidence to conclude that \(p < 0.5\).

We note that because \(y_{\rm RR}\) is not a function of \(p_a\), we have not only defined the most powerful test, but we have also defined a uniformly most powerful test for all alternative hypotheses \(p_a < p_o\).

What is the \(p\)-value, and what is the power of the test if \(p = 0.3\)?

According to the hypothesis test reference tables (and the description of discreteness corrections given above), the \(p\)-value is \[ p = 1 - F_Y(y_{\rm obs}-1 \vert p_o) \,, \] which in code is

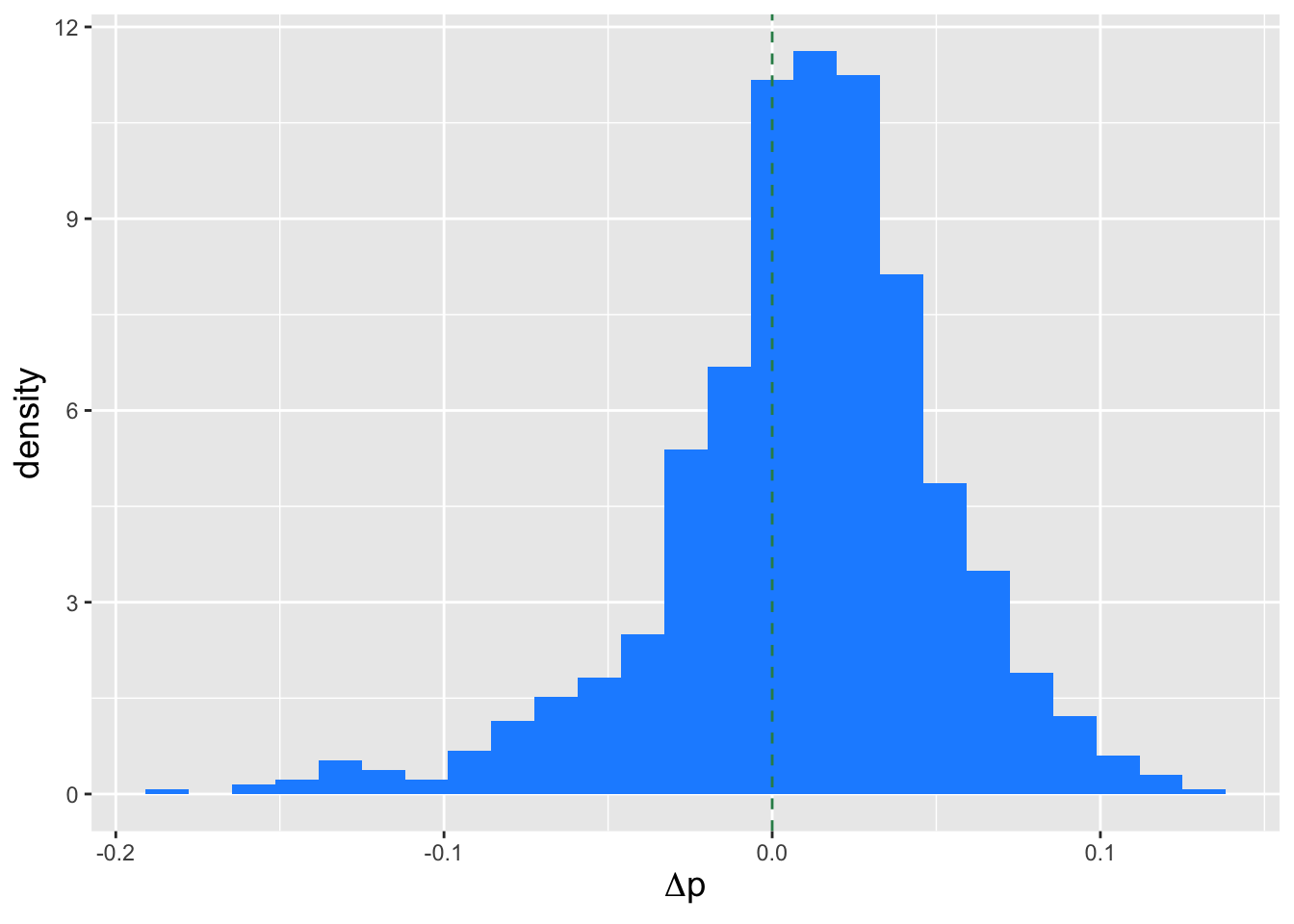

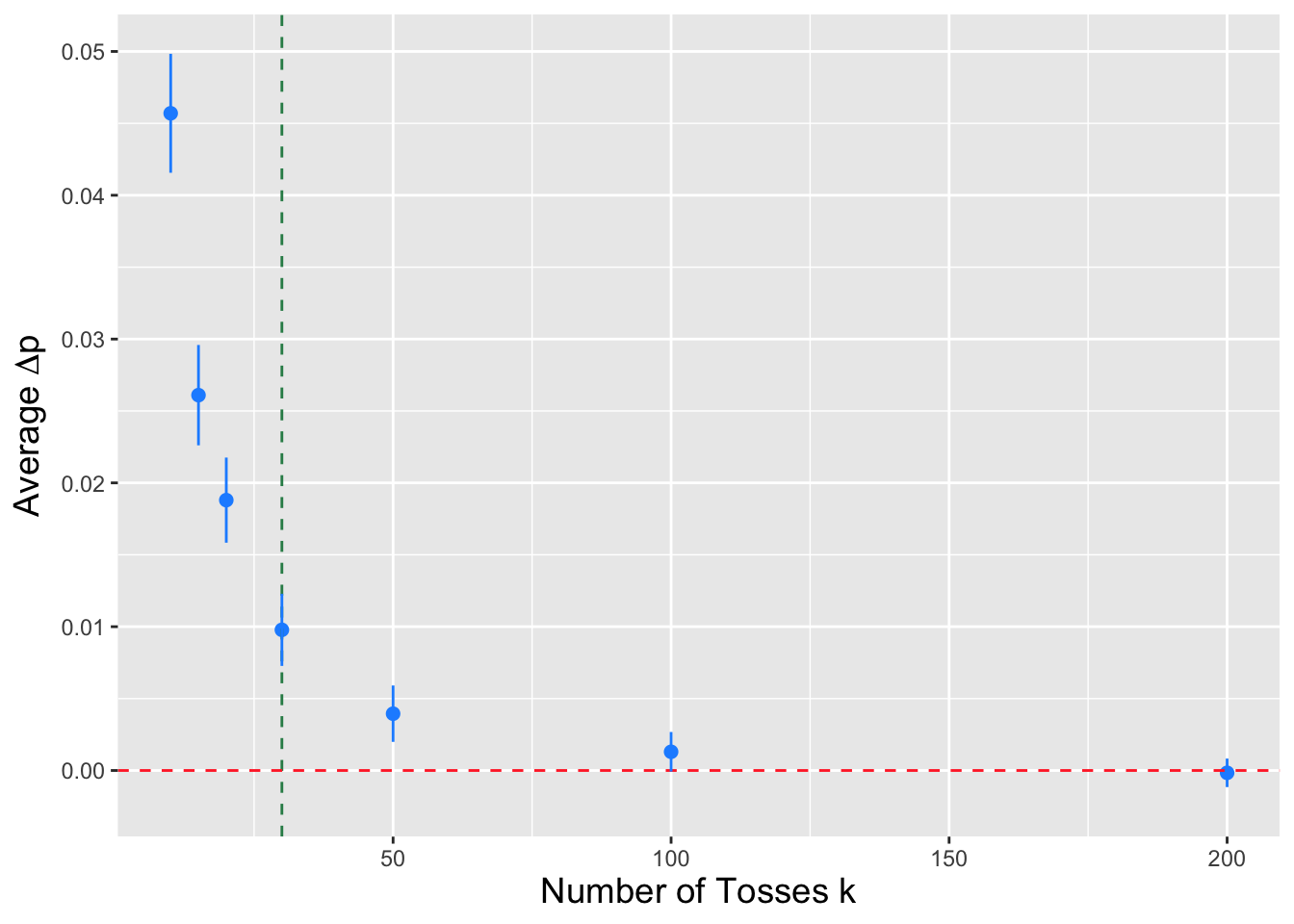

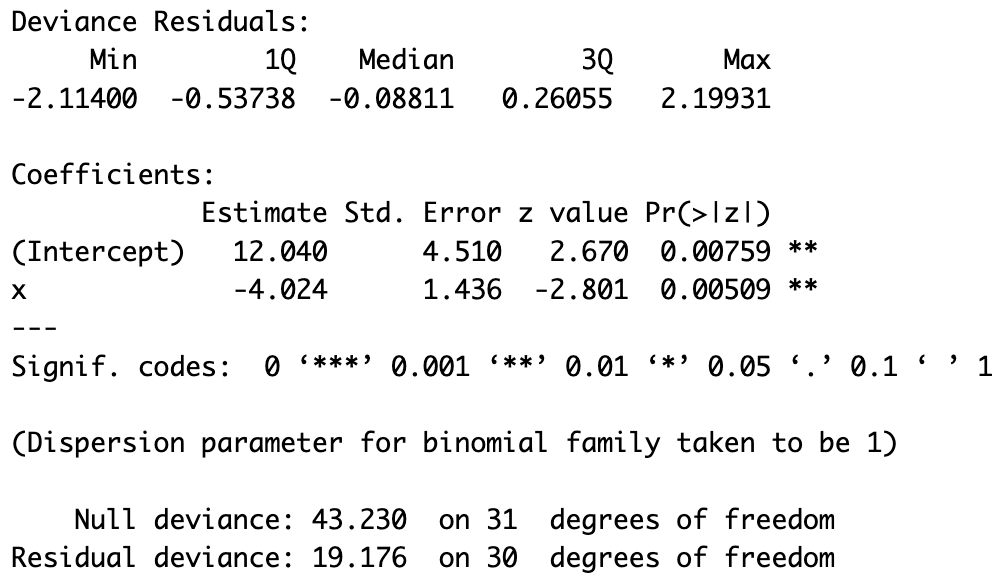

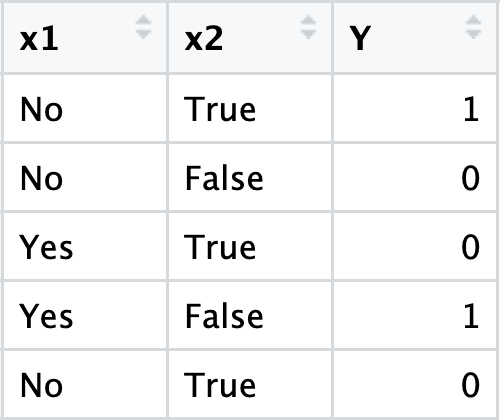

## [1] 0.002757601The \(p\)-value is 0.0028, which is less than \(\alpha\): we would decide to reject the null hypothesis.